Hello everyone,

We recently completed a migration from ESXi to Proxmox. The migration went smoothly, and everything worked perfectly.

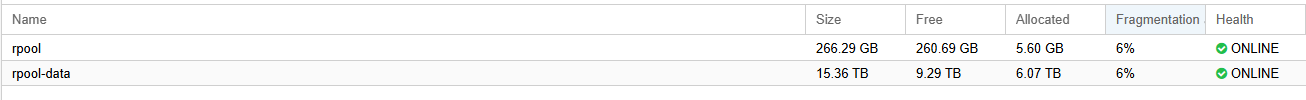

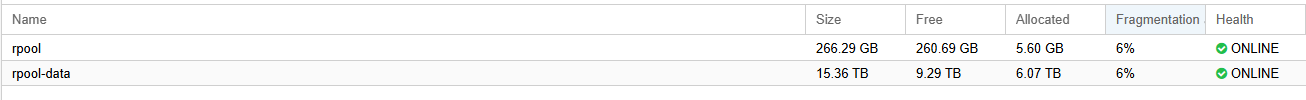

However, after one week, we noticed two different storage views in Proxmox, and we're unsure which one is correct. One view shows only 900 GB free, while the other shows 9.29 TB free.

In the shell, we found the following:

root@lkppve0001:~# zfs list -r rpool-data

NAME USED AVAIL REFER MOUNTPOINT

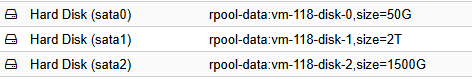

rpool-data/vm-118-disk-0 101G 779G 37.1G -

rpool-data/vm-118-disk-1 3.82T 2.74T 1.78T -

rpool-data/vm-118-disk-2 2.23T 2.20T 758G -

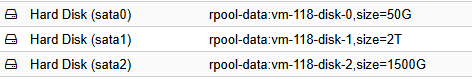

What do these values mean? When I check the disk sizes inside the VM, they don't seem to match this output.

Thank you all in advance.

We recently completed a migration from ESXi to Proxmox. The migration went smoothly, and everything worked perfectly.

However, after one week, we noticed two different storage views in Proxmox, and we're unsure which one is correct. One view shows only 900 GB free, while the other shows 9.29 TB free.

In the shell, we found the following:

root@lkppve0001:~# zfs list -r rpool-data

NAME USED AVAIL REFER MOUNTPOINT

rpool-data/vm-118-disk-0 101G 779G 37.1G -

rpool-data/vm-118-disk-1 3.82T 2.74T 1.78T -

rpool-data/vm-118-disk-2 2.23T 2.20T 758G -

What do these values mean? When I check the disk sizes inside the VM, they don't seem to match this output.

Thank you all in advance.