I am unable to get reasonable transfer speeds to and from VMs over SMB (both Win 10 and TrueNAS)

Setup:

3xDL380 G8 cluster w Ceph

each DL380 G8

H220 HBA 3 Samsung 830 SSD

H221 HBA dual links to D2700 shelf w 25 10k SSD

128GB Memory

10gb 561t ethernet (2 port)

mellanox connect-3 40gbe

40Gb connections are used for Ceph only.

1 10GB link is used for accessing VMs

1 10GB link is for Corrosync

1 SSD pool w 9 SSDs

1 HDD pool w 75 10k SAS drives.

When testing with rados both pools yield good results with the HDDs being a bit better transfer:

rados bench -p hdd_pool 60 write –no-cleanup

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

60 16 6874 6858 457.116 460 0.132968 0.139785

Total time run: 60.1512

Total writes made: 6874

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 457.115

Stddev Bandwidth: 26.6482

Max bandwidth (MB/sec): 500

Min bandwidth (MB/sec): 376

Average IOPS: 114

Stddev IOPS: 6.66206

Max IOPS: 125

Min IOPS: 94

Average Latency(s): 0.139961

Stddev Latency(s): 0.0559417

Max latency(s): 0.596267

Min latency(s): 0.0584686

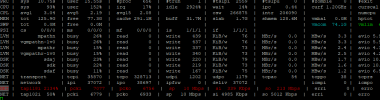

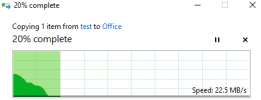

I would expect good transfer rates with this benchmark but I am seeing 35MB/s-2MB/s transfer rates to these pools mounted in VMs, (Both TrueNAS and Win10). Transfers over the same network from physical machine to physical machines over the same network yields 800-900MB/s speed with NVME Drives. In looking at atop on the PVE where the VM is located I see the tap interface indicates it is a 10MB device and not a 10GB device. It also shows ~2000% utilization. Is this the cause of my issues? If so, how do I correct it? If not, where should I look next?

Setup:

3xDL380 G8 cluster w Ceph

each DL380 G8

H220 HBA 3 Samsung 830 SSD

H221 HBA dual links to D2700 shelf w 25 10k SSD

128GB Memory

10gb 561t ethernet (2 port)

mellanox connect-3 40gbe

40Gb connections are used for Ceph only.

1 10GB link is used for accessing VMs

1 10GB link is for Corrosync

1 SSD pool w 9 SSDs

1 HDD pool w 75 10k SAS drives.

When testing with rados both pools yield good results with the HDDs being a bit better transfer:

rados bench -p hdd_pool 60 write –no-cleanup

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

60 16 6874 6858 457.116 460 0.132968 0.139785

Total time run: 60.1512

Total writes made: 6874

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 457.115

Stddev Bandwidth: 26.6482

Max bandwidth (MB/sec): 500

Min bandwidth (MB/sec): 376

Average IOPS: 114

Stddev IOPS: 6.66206

Max IOPS: 125

Min IOPS: 94

Average Latency(s): 0.139961

Stddev Latency(s): 0.0559417

Max latency(s): 0.596267

Min latency(s): 0.0584686

I would expect good transfer rates with this benchmark but I am seeing 35MB/s-2MB/s transfer rates to these pools mounted in VMs, (Both TrueNAS and Win10). Transfers over the same network from physical machine to physical machines over the same network yields 800-900MB/s speed with NVME Drives. In looking at atop on the PVE where the VM is located I see the tap interface indicates it is a 10MB device and not a 10GB device. It also shows ~2000% utilization. Is this the cause of my issues? If so, how do I correct it? If not, where should I look next?

Attachments

Last edited: