Hello,

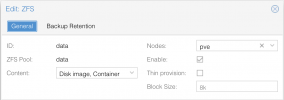

I have a 5TB ZFS raid that I use as data for several VMs.

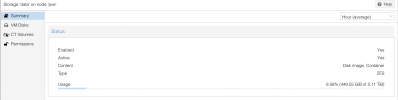

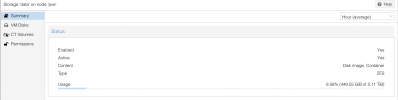

When I go to data> summary, it says there is 8.58% used : 449GB used of 5TB (see in attach).,

Yet I am not using 449GB.

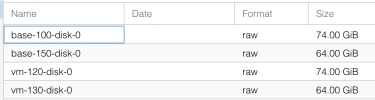

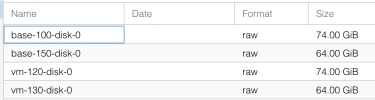

When I go to see in my VM Disk, the total is 276GB and it's correct.

Where is the 173GB difference?

Knowing in addition that the Proxmox system is on another dedicated hard drive

thanks

I have a 5TB ZFS raid that I use as data for several VMs.

When I go to data> summary, it says there is 8.58% used : 449GB used of 5TB (see in attach).,

Yet I am not using 449GB.

When I go to see in my VM Disk, the total is 276GB and it's correct.

Where is the 173GB difference?

Knowing in addition that the Proxmox system is on another dedicated hard drive

thanks