Hi,

I just installed proxmox and I had to destroy my mdraid (unsuported in proxmox) from previous ubuntu install.

After wiping my 3 disks I went to ZFS section and created a ZFS pool with them :

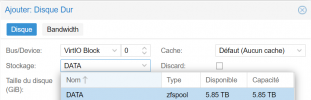

When I try adding a disk to my VM, I have selected it and we can see the raid5 with 3 3TB disks and it displays a little less than 6TB which is normal :

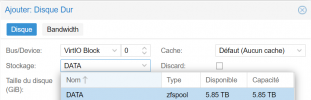

But when I want to add it I have an error "out of space"

Disk is 5.85TB so I entered 5850 and I also tried 5700 or 5500 and even 5000, every time it displays the same message.

How can I solve this ? And in a general matter, how can I add a disk to a VM with 100% of the size of the pool or LVM ?

Thanks in advance.

I just installed proxmox and I had to destroy my mdraid (unsuported in proxmox) from previous ubuntu install.

After wiping my 3 disks I went to ZFS section and created a ZFS pool with them :

When I try adding a disk to my VM, I have selected it and we can see the raid5 with 3 3TB disks and it displays a little less than 6TB which is normal :

But when I want to add it I have an error "out of space"

Disk is 5.85TB so I entered 5850 and I also tried 5700 or 5500 and even 5000, every time it displays the same message.

How can I solve this ? And in a general matter, how can I add a disk to a VM with 100% of the size of the pool or LVM ?

Thanks in advance.