Hello, I have apparently the same issue reported here https://forum.proxmox.com/threads/e...failed-command-error-protocol-canceled.88675/

I think that the backup is interrupted by a WAN link down caused by a connection reset from the ISP, but that's not the point.

This `protocol canceled` error is erratic but each time I got it, it corrupted the VM and I had to run fsck in rescue mode to restart the VM OS.

Note that the backup is daily, other VMs backup woks perfectly.

I tried multiple things to avoid it without results:

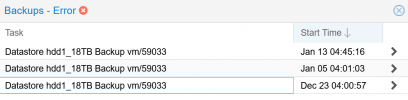

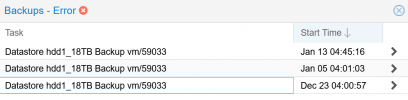

This is the ProxmoxVE backup task error report

This is the ProxmoxBS latest error report

This is the VM system log (https://pastebin.com/E0fPj6T7)

VM config file

Is there something that could explain that a fail backup corrupt the VM ?

Since I'm pretty sure that the corrupted VM state comes from a fail backup while running the VM, is there a way to wait that the backup has finished before restarting the VM in stop mode ?

I think that the backup is interrupted by a WAN link down caused by a connection reset from the ISP, but that's not the point.

This `protocol canceled` error is erratic but each time I got it, it corrupted the VM and I had to run fsck in rescue mode to restart the VM OS.

Note that the backup is daily, other VMs backup woks perfectly.

I tried multiple things to avoid it without results:

- Use a different disk storage for the VM

- Deploying the VM on another server (rented a new baremetal)

- Backup in snapshot mode

- Backup in stop mode

- Rebuild the VM from scratch (new VM, new disks, new OS)

This is the ProxmoxVE backup task error report

Code:

INFO: starting new backup job: vzdump 59033 --storage thor_pbs_1 --mailnotification always --mode stop --notes-template '{{guestname}}/{{node}}' --quiet 1

INFO: Starting Backup of VM 59033 (qemu)

INFO: Backup started at 2023-01-13 03:45:02

INFO: status = running

INFO: backup mode: stop

INFO: ionice priority: 7

INFO: VM Name: dallas

INFO: include disk 'scsi0' 'local:59033/vm-59033-disk-2.qcow2' 24G

INFO: include disk 'scsi1' 'local:59033/vm-59033-disk-3.qcow2' 128G

INFO: stopping virtual guest

INFO: creating Proxmox Backup Server archive 'vm/59033/2023-01-13T03:45:02Z'

INFO: starting kvm to execute backup task

INFO: started backup task '5ba3c8a3-e0a6-4eaa-8a48-772b6310fdfc'

INFO: resuming VM again after 15 seconds

INFO: scsi0: dirty-bitmap status: created new

INFO: scsi1: dirty-bitmap status: created new

INFO: 3% (5.2 GiB of 152.0 GiB) in 3s, read: 1.7 GiB/s, write: 2.7 MiB/s

INFO: 6% (10.5 GiB of 152.0 GiB) in 6s, read: 1.8 GiB/s, write: 2.7 MiB/s

INFO: 10% (15.7 GiB of 152.0 GiB) in 9s, read: 1.7 GiB/s, write: 21.3 MiB/s

INFO: 12% (19.5 GiB of 152.0 GiB) in 12s, read: 1.3 GiB/s, write: 28.0 MiB/s

INFO: 13% (19.8 GiB of 152.0 GiB) in 19s, read: 41.1 MiB/s, write: 40.0 MiB/s

INFO: 14% (21.3 GiB of 152.0 GiB) in 42s, read: 69.0 MiB/s, write: 48.5 MiB/s

INFO: 15% (22.8 GiB of 152.0 GiB) in 1m 8s, read: 58.2 MiB/s, write: 42.6 MiB/s

INFO: 15% (24.2 GiB of 152.0 GiB) in 17m 58s, read: 1.5 MiB/s, write: 1.4 MiB/s

ERROR: backup write data failed: command error: protocol canceled

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 59033 failed - backup write data failed: command error: protocol canceled

INFO: Failed at 2023-01-13 04:03:15

INFO: Backup job finished with errors

TASK ERROR: job errorsThis is the ProxmoxBS latest error report

Code:

2023-01-13T04:45:16+01:00: starting new backup on datastore 'hdd1_18TB': "vm/59033/2023-01-13T03:45:02Z"

2023-01-13T04:45:17+01:00: download 'index.json.blob' from previous backup.

2023-01-13T04:45:17+01:00: register chunks in 'drive-scsi0.img.fidx' from previous backup.

2023-01-13T04:45:17+01:00: download 'drive-scsi0.img.fidx' from previous backup.

2023-01-13T04:45:17+01:00: created new fixed index 1 ("vm/59033/2023-01-13T03:45:02Z/drive-scsi0.img.fidx")

2023-01-13T04:45:17+01:00: register chunks in 'drive-scsi1.img.fidx' from previous backup.

2023-01-13T04:45:17+01:00: download 'drive-scsi1.img.fidx' from previous backup.

2023-01-13T04:45:17+01:00: created new fixed index 2 ("vm/59033/2023-01-13T03:45:02Z/drive-scsi1.img.fidx")

2023-01-13T04:45:17+01:00: add blob "/mnt/datastore/hdd1_18TB/pbs/vm/59033/2023-01-13T03:45:02Z/qemu-server.conf.blob" (473 bytes, comp: 473)

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: backup failed: connection error: timed out

2023-01-13T05:00:24+01:00: removing failed backup

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed out

2023-01-13T05:00:24+01:00: TASK ERROR: connection error: timed out

2023-01-13T05:00:24+01:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: timed outThis is the VM system log (https://pastebin.com/E0fPj6T7)

Code:

Jan 13 04:49:29 ip199 kernel: [ 242.707341] INFO: task kworker/u16:0:7 blocked for more than 120 seconds.

Jan 13 04:49:29 ip199 kernel: [ 242.707362] Not tainted 5.10.0-20-amd64 #1 Debian 5.10.158-2

Jan 13 04:49:29 ip199 kernel: [ 242.707377] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Jan 13 04:49:29 ip199 kernel: [ 242.707394] task:kworker/u16:0 state:D stack: 0 pid: 7 ppid: 2 flags:0x00004000

Jan 13 04:49:29 ip199 kernel: [ 242.707399] Workqueue: writeback wb_workfn (flush-8:16)

Jan 13 04:49:29 ip199 kernel: [ 242.707401] Call Trace:

Jan 13 04:49:29 ip199 kernel: [ 242.707407] __schedule+0x282/0x880

Jan 13 04:49:29 ip199 kernel: [ 242.707417] ? blk_mq_flush_plug_list+0x100/0x1a0

Jan 13 04:49:29 ip199 kernel: [ 242.707419] ? out_of_line_wait_on_bit_lock+0xb0/0xb0

Jan 13 04:49:29 ip199 kernel: [ 242.707420] schedule+0x46/0xb0

Jan 13 04:49:29 ip199 kernel: [ 242.707421] io_schedule+0x42/0x70

Jan 13 04:49:29 ip199 kernel: [ 242.707423] bit_wait_io+0xd/0x60

Jan 13 04:49:29 ip199 kernel: [ 242.707424] __wait_on_bit_lock+0x5d/0xb0

Jan 13 04:49:29 ip199 kernel: [ 242.707425] out_of_line_wait_on_bit_lock+0x92/0xb0

Jan 13 04:49:29 ip199 kernel: [ 242.707428] ? var_wake_function+0x30/0x30

Jan 13 04:49:29 ip199 kernel: [ 242.707433] do_get_write_access+0x1e7/0x3d0 [jbd2]

Jan 13 04:49:29 ip199 kernel: [ 242.707436] jbd2_journal_get_write_access+0x67/0x90 [jbd2]

Jan 13 04:49:29 ip199 kernel: [ 242.707447] __ext4_journal_get_write_access+0x77/0x130 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707459] ext4_mb_mark_diskspace_used+0x7a/0x420 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707469] ext4_mb_new_blocks+0x4bf/0xf40 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707478] ? ext4_find_extent+0x1af/0x450 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707487] ext4_ext_map_blocks+0x861/0x18c0 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707489] ? release_pages+0x3d8/0x460

Jan 13 04:49:29 ip199 kernel: [ 242.707490] ? __pagevec_release+0x1c/0x60

Jan 13 04:49:29 ip199 kernel: [ 242.707499] ext4_map_blocks+0x18e/0x5b0 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707509] ext4_writepages+0x735/0xfd0 [ext4]

Jan 13 04:49:29 ip199 kernel: [ 242.707510] ? update_load_avg+0x7a/0x5d0

Jan 13 04:49:29 ip199 kernel: [ 242.707513] ? update_sd_lb_stats.constprop.0+0x81b/0x8a0

Jan 13 04:49:29 ip199 kernel: [ 242.707515] do_writepages+0x34/0xc0

Jan 13 04:49:29 ip199 kernel: [ 242.707518] ? fprop_reflect_period_percpu.isra.0+0x7b/0xc0

Jan 13 04:49:29 ip199 kernel: [ 242.707519] __writeback_single_inode+0x39/0x2a0

Jan 13 04:49:29 ip199 kernel: [ 242.707521] writeback_sb_inodes+0x20d/0x4a0

Jan 13 04:49:29 ip199 kernel: [ 242.707523] __writeback_inodes_wb+0x4c/0xe0

Jan 13 04:49:29 ip199 kernel: [ 242.707524] wb_writeback+0x1d8/0x2a0

Jan 13 04:49:29 ip199 kernel: [ 242.707526] wb_workfn+0x296/0x4e0

Jan 13 04:49:29 ip199 kernel: [ 242.707528] process_one_work+0x1b6/0x350

Jan 13 04:49:29 ip199 kernel: [ 242.707529] worker_thread+0x53/0x3e0

Jan 13 04:49:29 ip199 kernel: [ 242.707530] ? process_one_work+0x350/0x350

Jan 13 04:49:29 ip199 kernel: [ 242.707531] kthread+0x11b/0x140

Jan 13 04:49:29 ip199 kernel: [ 242.707532] ? __kthread_bind_mask+0x60/0x60

Jan 13 04:49:29 ip199 kernel: [ 242.707534] ret_from_fork+0x22/0x30

Jan 13 04:49:29 ip199 kernel: [ 242.707540] INFO: task kworker/u16:1:52 blocked for more than 120 seconds.

Jan 13 04:49:29 ip199 kernel: [ 242.707556] Not tainted 5.10.0-20-amd64 #1 Debian 5.10.158-2

Jan 13 04:49:29 ip199 kernel: [ 242.707570] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Jan 13 04:49:29 ip199 kernel: [ 242.707587] task:kworker/u16:1 state:D stack: 0 pid: 52 ppid: 2 flags:0x00004000

Jan 13 04:49:29 ip199 kernel: [ 242.707598] Workqueue: ext4-rsv-conversion ext4_end_io_rsv_work [ext4]

...

Jan 13 04:49:29 ip199 kernel: [ 242.708237] RIP: 0033:0x7fa3c20eab4e

Jan 13 04:49:29 ip199 kernel: [ 242.708237] RSP: 002b:00007ffdd85faa78 EFLAGS: 00010246

Jan 13 04:49:29 ip199 kernel: [ 242.708238] RAX: 00007fa3b6d2c540 RBX: 00007ffdd85faba0 RCX: 303030302e373034

Jan 13 04:49:29 ip199 kernel: [ 242.708239] RDX: 0000000000000008 RSI: 303030302e373034 RDI: 00007fa3b6d2c540

Jan 13 04:49:29 ip199 kernel: [ 242.708239] RBP: 00007fa3c29abb21 R08: 00007fa3b6d2c540 R09: 0000000000000000

Jan 13 04:49:29 ip199 kernel: [ 242.708240] R10: 0000000000000004 R11: 00007fa3c2b11f78 R12: 0000000000000008

Jan 13 04:49:29 ip199 kernel: [ 242.708240] R13: 00007ffdd85fabd0 R14: 0000000000000000 R15: 00007fa3c29f164cVM config file

Code:

root@njord:/etc/pve/qemu-server# cat 59033.conf

agent: 1

boot: order=scsi0;sata2

cores: 8

cpu: host

memory: 16384

name: dallas

net0: virtio=00:50:56:09:c9:d3,bridge=vmbr0,firewall=1

net1: virtio=00:50:56:81:ab:38,bridge=vmbr1,firewall=1

numa: 0

onboot: 1

ostype: l26

sata2: local:iso/gparted-live-1.4.0-6-amd64.iso,media=cdrom,size=484M

scsi0: local:59033/vm-59033-disk-2.qcow2,aio=threads,discard=on,iothread=1,size=24G,ssd=1

scsi1: local:59033/vm-59033-disk-3.qcow2,aio=threads,discard=on,iothread=1,size=128G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=82dc0b9b-e7cb-4472-93e4-67a77ee756b4

sockets: 1

spice_enhancements: videostreaming=filter

startup: order=33

tablet: 0

vga: memory=8

vmgenid: 8403709f-c344-450b-8d4c-1f39c30e5abcIs there something that could explain that a fail backup corrupt the VM ?

Since I'm pretty sure that the corrupted VM state comes from a fail backup while running the VM, is there a way to wait that the backup has finished before restarting the VM in stop mode ?