Looking for some guidance on the how to correctly set the MTU for a cluster with a dedicated / separate network for Corosync traffic.

I have a (rather) simple setup of 3 pve nodes, each having 2 physical interfaces:

My question is how to correctly set the MTU on that mellanox fiber card (enp65s0) to 9000? I have seen reference to a "netmtu" setting that was available in Corosync.conf, but was deprecated? The current documentation doesn't mention it, so I am guessing that is the case.

I have tested the jumbo frames using ip link set mtu 9000 on each node before going any further,ensured that it's set on the Mikrotik switch, etc. Everything works nicely between nodes, iperf is successful, as are pings, etc.

Edit: Updated Solution - After help from fabian, the problem was indeed the switch's MTU settings not being properly set.

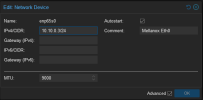

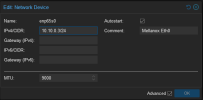

If I set it on the webui's config screen as shown below, everything will work with the exception of the webui, which won't correctly communicate with other nodes in the cluster (only the one directly connected to). Loading any page or interacting with any other node will fail. The specific error from the WebUI is a 596, also shown below.

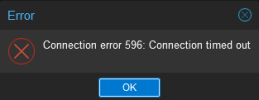

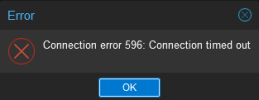

Example error when trying to access anything from another node aside from the one connected to:

I notice that:

Thank you in advance for pointing me in the right direction!

I have a (rather) simple setup of 3 pve nodes, each having 2 physical interfaces:

- enp65s0: A mellanox fiber nic. Used by corosync in ring config (see below) for migration/internode communication

- enp1s0: An onboard 10GBE nic. Used for the VMBR that all VM traffic goes into/out of. Working just fine, even with problem below.

My question is how to correctly set the MTU on that mellanox fiber card (enp65s0) to 9000? I have seen reference to a "netmtu" setting that was available in Corosync.conf, but was deprecated? The current documentation doesn't mention it, so I am guessing that is the case.

I have tested the jumbo frames using ip link set mtu 9000 on each node before going any further,

Edit: Updated Solution - After help from fabian, the problem was indeed the switch's MTU settings not being properly set.

If I set it on the webui's config screen as shown below, everything will work with the exception of the webui, which won't correctly communicate with other nodes in the cluster (only the one directly connected to). Loading any page or interacting with any other node will fail. The specific error from the WebUI is a 596, also shown below.

Example error when trying to access anything from another node aside from the one connected to:

I notice that:

- output of corosync-cfgtool -n is showing the default MTU after the above change, even after a full cluster reboot

- a CLI qm migrate on an offline test VM also fails, with an error 255. However it is choosing the correct/cluster network of 10.10.x.x, strange that it fails since the config tool reports the target node as connected and reachable.

- Additionally, ip link show is showing the expected MTU value for both the corosync network (9000) and the vmbr adapter (1500)

Thank you in advance for pointing me in the right direction!

Last edited: