I have plenty of this:

[TOTEM ] Retransmit List:................

And this was from last night when he made the same loosing cluster

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6884 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6933 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6933 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6934 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6934 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6934 ms, flushing membership messages.

Jan 17 00:33:09 d3 corosync[20686]: [TOTEM ] Process pause detected for 6983 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [QUORUM] Sync members[6]: 1 2 3 4 6 9

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] A new membership (1.1f4a5) was formed. Members

Jan 17 00:33:18 d3 corosync[20686]: [QUORUM] Members[6]: 1 2 3 4 6 9

Jan 17 00:33:18 d3 corosync[20686]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6634 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6635 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6635 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6635 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6635 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [QUORUM] Sync members[6]: 1 2 3 4 6 9

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] A new membership (1.1f4a9) was formed. Members

Jan 17 00:33:18 d3 corosync[20686]: [QUORUM] Members[6]: 1 2 3 4 6 9

Jan 17 00:33:18 d3 corosync[20686]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6684 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6684 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6684 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6685 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6685 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6728 ms, flushing membership messages.

Jan 17 00:33:18 d3 corosync[20686]: [TOTEM ] Process pause detected for 6729 ms, flushing membership messages.

this repeat all night until

Jan 17 00:33:22 d3 corosync[20686]: [TOTEM ] A new membership (1.1f509) was formed. Members joined: 2 3 4 6 9 left: 2 3 4 6 9

Jan 17 00:33:22 d3 corosync[20686]: [TOTEM ] Failed to receive the leave message. failed: 2 3 4 6 9

Jan 17 00:33:22 d3 corosync[20686]: [QUORUM] Sync members[6]: 1 2 3 4 6 9

Jan 17 00:33:22 d3 corosync[20686]: [QUORUM] Sync joined[5]: 2 3 4 6 9

Jan 17 00:33:22 d3 corosync[20686]: [QUORUM] Sync left[5]: 2 3 4 6 9

Jan 17 00:33:27 d3 corosync[20686]: [TOTEM ] A new membership (1.1f975) was formed. Members joined: 4 6 9 left: 4 6 9

Jan 17 00:33:27 d3 corosync[20686]: [TOTEM ] Failed to receive the leave message. failed: 4 6 9

Jan 17 00:33:27 d3 corosync[20686]: [QUORUM] Sync members[7]: 1 2 3 4 5 6 9

Jan 17 00:33:27 d3 corosync[20686]: [QUORUM] Sync joined[1]: 5

Jan 17 00:33:27 d3 corosync[20686]: [TOTEM ] A new membership (1.1f979) was formed. Members joined: 5

this repeat all night until

Jan 17 03:44:30 d3 corosync[20686]: [KNET ] link: host: 3 link: 0 is down

Jan 17 03:44:30 d3 corosync[20686]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 17 03:44:30 d3 corosync[20686]: [KNET ] host: host: 3 has no active links

Jan 17 03:44:37 d3 corosync[20686]: [KNET ] rx: host: 3 link: 0 is up

Jan 17 03:44:37 d3 corosync[20686]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

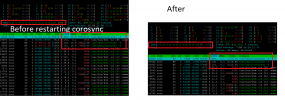

i restarted corosync cuz cluster was lost not working

and this after

Jan 17 08:19:18 d3 corosync[17949]: [TOTEM ] A new membership (1.1f98b) was formed. Members joined: 5

Jan 17 08:19:18 d3 corosync[17949]: [QUORUM] Members[2]: 1 5

Jan 17 08:19:18 d3 corosync[17949]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 17 08:19:18 d3 corosync[17949]: [QUORUM] Sync members[7]: 1 2 3 4 5 6 9

Jan 17 08:19:18 d3 corosync[17949]: [QUORUM] Sync joined[5]: 2 3 4 6 9

Jan 17 08:19:18 d3 corosync[17949]: [TOTEM ] A new membership (1.1f98f) was formed. Members joined: 2 3 4 6 9

Jan 17 08:19:18 d3 corosync[17949]: [QUORUM] This node is within the primary component and will provide service.

Jan 17 08:19:18 d3 corosync[17949]: [QUORUM] Members[7]: 1 2 3 4 5 6 9

Jan 17 08:19:18 d3 corosync[17949]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 17 08:19:19 d3 corosync[17949]: [TOTEM ] Retransmit List: 82

Jan 17 08:19:20 d3 corosync[17949]: [TOTEM ] Retransmit List: 8c 8e 8f 91 92 94

Jan 17 08:19:20 d3 corosync[17949]: [TOTEM ] Retransmit List: 92 94

Jan 17 08:19:20 d3 corosync[17949]: [TOTEM ] Retransmit List: 89 8b 8c 8e 8f 91 92 94

and i sow this two

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [CPG ] *** 0x5586dd1bc1a0 can't mcast to group pve_kvstore_v1 state:1, error:12

Jan 17 12:21:30 d3 corosync[17949]: [MAIN ] qb_ipcs_event_send: Transport endpoint is not connected (107)

The log its big with this

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2022-01-16 22:10:49 EET; 16h ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 23303 (corosync)

Tasks: 9 (limit: 4915)

Memory: 4.1G

CGroup: /system.slice/corosync.service

└─23303 /usr/sbin/corosync -f

Jan 17 14:21:06 d4 corosync[23303]: [TOTEM ] Retransmit List: 5d51

Jan 17 14:28:46 d4 corosync[23303]: [TOTEM ] Retransmit List: 6ba1

Jan 17 14:43:16 d4 corosync[23303]: [TOTEM ] Retransmit List: 86ce

Jan 17 14:49:06 d4 corosync[23303]: [TOTEM ] Retransmit List: 9196

Jan 17 14:51:29 d4 corosync[23303]: [TOTEM ] Retransmit List: 9603

Jan 17 14:59:03 d4 corosync[23303]: [KNET ] link: host: 3 link: 0 is down

Jan 17 14:59:03 d4 corosync[23303]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 17 14:59:03 d4 corosync[23303]: [KNET ] host: host: 3 has no active links

Jan 17 14:59:11 d4 corosync[23303]: [KNET ] rx: host: 3 link: 0 is up

Jan 17 14:59:11 d4 corosync[23303]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

4.1G in 1 week will be again 30GB