Hi,

I have an almost idle test cluster with 12 nodes (pve-7.4). With an uptime of a year or so without issues, last week I noticed in web GUI, that only the local node information showed up. I logged in and saw on three randomly picked nodes that corosync had 100%CPU, apparently busy-loop over some network error; the log showed many "Retransmit List:" entries. Today I planned to take a look. The load is gone, as corosyncs have been out-of-memory-killed and nodes are "Flags: Quorate".

I have no clue what now would be the best steps to continue.

I think I will start upgrading to latest PVE version and consider building corosync with debug symbols to be able to debug it, but I'm walking in the dark...

Any hints or recommendations?

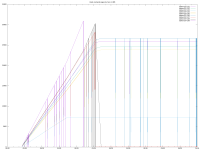

I have an almost idle test cluster with 12 nodes (pve-7.4). With an uptime of a year or so without issues, last week I noticed in web GUI, that only the local node information showed up. I logged in and saw on three randomly picked nodes that corosync had 100%CPU, apparently busy-loop over some network error; the log showed many "Retransmit List:" entries. Today I planned to take a look. The load is gone, as corosyncs have been out-of-memory-killed and nodes are "Flags: Quorate".

Code:

ansible all -m shell -a "systemctl status corosync.service" | grep Active

Active: inactive (dead)

Active: active (running) since Fri 2023-09-22 07:08:15 CEST; 1 years 3 months ago

Active: failed (Result: oom-kill) since Thu 2025-01-16 18:02:25 CET; 3 days ago

Active: active (running) since Mon 2023-08-14 19:45:28 CEST; 1 years 5 months ago

Active: active (running) since Mon 2023-08-14 19:45:25 CEST; 1 years 5 months ago

Active: failed (Result: oom-kill) since Thu 2025-01-16 16:04:09 CET; 3 days ago

Active: failed (Result: oom-kill) since Thu 2025-01-16 17:56:14 CET; 3 days ago

Active: active (running) since Thu 2025-01-16 16:15:10 CET; 3 days ago

Active: failed (Result: oom-kill) since Thu 2025-01-16 17:47:49 CET; 3 days ago

Active: active (running) since Fri 2023-09-22 07:08:23 CEST; 1 years 3 months ago

Active: active (running) since Tue 2023-11-21 19:44:39 CET; 1 years 1 months ago

Active: active (running) since Fri 2023-09-22 07:08:23 CEST; 1 years 3 months ago

Active: active (running) since Fri 2023-09-22 07:08:08 CEST; 1 years 3 months ago

Active: active (running) since Fri 2023-09-22 07:08:08 CEST; 1 years 3 months agoI think I will start upgrading to latest PVE version and consider building corosync with debug symbols to be able to debug it, but I'm walking in the dark...

Any hints or recommendations?