Every so often (like right now), I'll start seeing a lot of KNET logs about a link going down and coming back up. Sometimes rebooting one node will fix it, sometimes it won't. It seems to happen randomly after node reboots or some other event. How can I determine which node is causing this or what part of the infrastructure is causing it?

I have 2 10GbE interfaces and 2 GbE interfaces LAGd together on each host except one, which also has 2 10GbE but 4 GbE all lagged together. Each logical interface trunks several VLANs.

1 of the 10GbE VLANs contains the main corosync network as well as the Ceph front side network. The other 10GbE VLAN contains the secondary corosync ring and the Ceph back side network. Each 10GbE link is connected via DAC to a different switch. Here's the logs I see on each node:

Here's my corosync.conf:

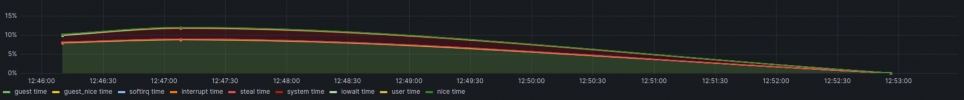

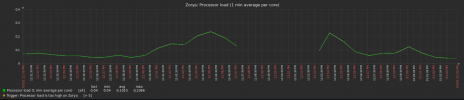

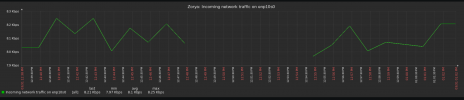

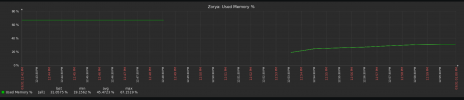

Here's a graph of traffic from the switches over the last 15 minutes (ignore the vertical lines):

Ring 0 is the "Private" network and Ring 1 is the "Secondary" network, which shares a VLAN with the "Storage" network. It looks like it's Ring 1 that's flapping...but why?

I have 2 10GbE interfaces and 2 GbE interfaces LAGd together on each host except one, which also has 2 10GbE but 4 GbE all lagged together. Each logical interface trunks several VLANs.

1 of the 10GbE VLANs contains the main corosync network as well as the Ceph front side network. The other 10GbE VLAN contains the secondary corosync ring and the Ceph back side network. Each 10GbE link is connected via DAC to a different switch. Here's the logs I see on each node:

Code:

Jan 24 20:23:31 pve2 corosync[6080]: [KNET ] link: host: 5 link: 1 is down

Jan 24 20:23:31 pve2 corosync[6080]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Jan 24 20:23:34 pve2 corosync[6080]: [KNET ] rx: host: 5 link: 1 is up

Jan 24 20:23:34 pve2 corosync[6080]: [KNET ] link: Resetting MTU for link 1 because host 5 joined

Jan 24 20:23:34 pve2 corosync[6080]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Jan 24 20:23:34 pve2 corosync[6080]: [KNET ] pmtud: Global data MTU changed to: 1397Here's my corosync.conf:

Code:

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: pve1

nodeid: 4

quorum_votes: 1

ring0_addr: 10.10.0.32

ring1_addr: 10.13.1.4

}

node {

name: pve2

nodeid: 1

quorum_votes: 1

ring0_addr: 10.10.0.1

ring1_addr: 10.13.1.1

}

node {

name: pve3

nodeid: 3

quorum_votes: 1

ring0_addr: 10.10.0.2

ring1_addr: 10.13.1.2

}

node {

name: pve4

nodeid: 2

quorum_votes: 1

ring0_addr: 10.10.0.3

ring1_addr: 10.13.1.3

}

node {

name: pve5

nodeid: 5

quorum_votes: 1

ring0_addr: 10.10.0.20

ring1_addr: 10.13.1.5

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: PVE

config_version: 6

interface {

bindnetaddr: 10.10.0.1

ringnumber: 0

}

interface {

bindnetaddr: 10.13.1.1

ringnumber: 1

}

ip_version: ipv4

rrp_mode: passive

secauth: on

version: 2

}Here's a graph of traffic from the switches over the last 15 minutes (ignore the vertical lines):

Ring 0 is the "Private" network and Ring 1 is the "Secondary" network, which shares a VLAN with the "Storage" network. It looks like it's Ring 1 that's flapping...but why?

Last edited: