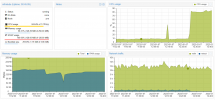

So I limited the cgroup2.memory.high to 60% in order to make the issue happen faster, but as you can see once it hit the high memory cap it started dumping things to swap and then once swap filled it spiked the CPU usage and quit responding on the network. Any other suggestions on how to troubleshoot this? This CT was fine prior to upgrade to Proxmox 7.3, no other CT's appear to be having issues either so I am guessing its something to do with the packages this CT uses.

https://github.com/nebulous/infinitude/wiki/Installing-Infinitude-on-Raspberry-PI-(raspbian)

View attachment 45347

I was too annoyed with Proxmox 7.3 and reinstalled the whole system with a Proxmox 7.2-3 iso and for me all the issues are gone by now.

I've also got some issues on 7.3 with some USB devices were not recognized only inside a container or a VM after half a day.

For me it's clearly not an issue with the kernel. I've tried a whole bunch of kernels on 7.3 and the issue was not gone.

Seems like an issue with some package.

At the moment the following packages are due to upgrade. Any of these packages could be the troiblemaker:

proxmox-mail-forward proxmox-offline-mirror-docs

proxmox-offline-mirror-helper pve-kernel-5.15.83-1-pve

The following packages will be upgraded:

base-files bash bind9-dnsutils bind9-host bind9-libs cifs-utils corosync

dbus dirmngr distro-info-data dpkg e2fsprogs gnupg gnupg-l10n gnupg-utils

gnutls-bin gpg gpg-agent gpg-wks-client gpg-wks-server gpgconf gpgsm gpgv

grub-common grub-efi-amd64-bin grub-pc grub-pc-bin grub2-common

isc-dhcp-client isc-dhcp-common krb5-locales libavahi-client3

libavahi-common-data libavahi-common3 libc-bin libc-l10n libcfg7 libcmap4

libcom-err2 libcorosync-common4 libcpg4 libcups2 libcurl3-gnutls libdbus-1-3

libexpat1 libext2fs2 libfreetype6 libfribidi0 libgssapi-krb5-2

libhttp-daemon-perl libk5crypto3 libknet1 libkrb5-3 libkrb5support0 libksba8

libldap-2.4-2 libldb2 libnftables1 libnozzle1 libnss-systemd libnvpair3linux

libpam-systemd libpcre2-8-0 libpixman-1-0 libproxmox-acme-perl

libproxmox-acme-plugins libproxmox-backup-qemu0 libproxmox-rs-perl

libpve-access-control libpve-cluster-api-perl libpve-cluster-perl

libpve-common-perl libpve-guest-common-perl libpve-http-server-perl

libpve-rs-perl libpve-storage-perl libquorum5 librados2-perl libsmbclient

libss2 libssl1.1 libsystemd0 libtasn1-6 libtiff5 libtpms0 libudev1

libuutil3linux libvirglrenderer1 libvotequorum8 libwbclient0 libxml2

libxslt1.1 libzfs4linux libzpool5linux locales logrotate logsave lxc-pve

nano nftables openssh-client openssh-server openssh-sftp-server openssl

procmail proxmox-archive-keyring proxmox-backup-client

proxmox-backup-file-restore proxmox-ve proxmox-widget-toolkit pve-cluster

pve-container pve-docs pve-edk2-firmware pve-firewall pve-firmware

pve-ha-manager pve-i18n pve-kernel-5.15 pve-kernel-helper pve-lxc-syscalld

pve-manager pve-qemu-kvm python3-ldb qemu-server rsyslog samba-common

samba-libs smbclient spl ssh swtpm swtpm-libs swtpm-tools systemd

systemd-sysv tcpdump tzdata udev xsltproc zfs-initramfs zfs-zed

zfsutils-linux