Please forgive me if I'm missing something obvious here, but I'm new to ZFS storage on Proxmox. PVE 4.4 installed on a small SSD, then added a 4TB ZFS pool built on 4kn SATA drives. Added the storage and assigned it roles, resulting in this:

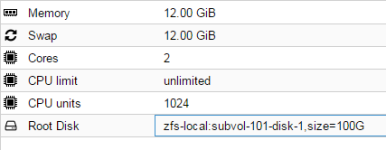

Used DAB to create a Debian 8.6 LXC template, created CT101 which shows the Resources:

Now from the node command line, I started an rsync from an eSATA drive to /var/lib/lxc/101/var to restore ~71 gbytes of mail spool from another site. Result is that the data fills up the boot drive:

Code:

Enabled Yes

Active Yes

Content Disk image, Container

Type ZFS

Usage 3.82% (137.36 GiB of 3.51 TiB)

Now from the node command line, I started an rsync from an eSATA drive to /var/lib/lxc/101/var to restore ~71 gbytes of mail spool from another site. Result is that the data fills up the boot drive:

Code:

rsync: write failed on "/var/lib/lxc/101/var/CommuniGate/Accounts/xyz.macnt/Di&-DomCrea.mbox": No space left on device (28)

rsync error: error in file IO (code 11) at receiver.c(393) [receiver=3.1.1]

root@pve1:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 10M 0 10M 0% /dev

tmpfs 3.2G 8.9M 3.2G 1% /run

/dev/dm-0 28G 28G 0 100% /

tmpfs 7.8G 25M 7.8G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/sde2 253M 292K 252M 1% /boot/efi

xpool 3.4T 128K 3.4T 1% /xpool

xpool/data 3.4T 128K 3.4T 1% /xpool/data

xpool/data/subvol-100-disk-1 1000G 137G 864G 14% /xpool/data/subvol-100-disk-1

xpool/data/subvol-101-disk-1 100G 408M 100G 1% /xpool/data/subvol-101-disk-1

/dev/fuse 30M 16K 30M 1% /etc/pve

/dev/sdf1 359G 273G 69G 81% /mnt