Hello,

I'm using pbs since a few weeks now and am very happy with its features. Especially the deduplication is great. It worked well for over a month now. I'm doing daily backups and set up the retention policy on 5-7-8-24 (last-daily-weekly-monthly).

The last update of the pbs-server and client was performed to version 0.8.11 three days ago.

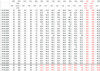

Since then the backup started to use much more space and taking longer, as much less of the existing backups is being reused (from 98.8% to only 48.9%, see atached example logs). There was no apparend reason (from the container side) for this behaviour, as the backuped container didn't had an major update or any noticable change in usage. The main point being, that this low 'reuse-rate' is showing up not once, but since then. I would have expected, it maybe shows up once, but the following backups should have gone back to a better reuse-rate again. It does not only affect the copied data, it also seems to use more space on the pbs as well, as I can clearly see a steeper increase in used storage than before.

This behaviour seems to show up on all containers, with varying degrees off severity (all were above 90% reuse-rate, now ranging from about 20%-70%). Even stopped containers show this behaviour. VMs dont seem to be affected.

Is this a known behaviour after the recent pbs update? Has anyone else experienced this? Is there anything I could do about that? Can I provide further logs for investigation?

BEFORE:

AFTER:

Regards

CanisLupus

I'm using pbs since a few weeks now and am very happy with its features. Especially the deduplication is great. It worked well for over a month now. I'm doing daily backups and set up the retention policy on 5-7-8-24 (last-daily-weekly-monthly).

The last update of the pbs-server and client was performed to version 0.8.11 three days ago.

Since then the backup started to use much more space and taking longer, as much less of the existing backups is being reused (from 98.8% to only 48.9%, see atached example logs). There was no apparend reason (from the container side) for this behaviour, as the backuped container didn't had an major update or any noticable change in usage. The main point being, that this low 'reuse-rate' is showing up not once, but since then. I would have expected, it maybe shows up once, but the following backups should have gone back to a better reuse-rate again. It does not only affect the copied data, it also seems to use more space on the pbs as well, as I can clearly see a steeper increase in used storage than before.

This behaviour seems to show up on all containers, with varying degrees off severity (all were above 90% reuse-rate, now ranging from about 20%-70%). Even stopped containers show this behaviour. VMs dont seem to be affected.

Is this a known behaviour after the recent pbs update? Has anyone else experienced this? Is there anything I could do about that? Can I provide further logs for investigation?

BEFORE:

Code:

122: 2020-08-24 01:01:32 INFO: Starting Backup of VM 122 (lxc)

122: 2020-08-24 01:01:32 INFO: status = running

122: 2020-08-24 01:01:32 INFO: CT Name: nextcloud

122: 2020-08-24 01:01:32 INFO: including mount point rootfs ('/') in backup

122: 2020-08-24 01:01:32 INFO: including mount point mp0 ('/mnt/mp0') in backup

122: 2020-08-24 01:01:32 INFO: backup mode: snapshot

122: 2020-08-24 01:01:32 INFO: ionice priority: 7

122: 2020-08-24 01:01:32 INFO: suspend vm to make snapshot

122: 2020-08-24 01:01:32 INFO: create storage snapshot 'vzdump'

122: 2020-08-24 01:01:33 INFO: resume vm

122: 2020-08-24 01:01:33 INFO: guest is online again after 1 seconds

122: 2020-08-24 01:01:33 INFO: creating Proxmox Backup Server archive 'ct/122/2020-08-23T23:01:32Z'

122: 2020-08-24 01:01:33 INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=none pct.conf:/var/tmp/vzdumptmp29006/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --include-dev /mnt/vzsnap0/./mnt/mp0 --skip-lost-and-found --backup-type ct --backup-id 122 --backup-time 1598223692 --repository backupuser@pbs@10.X.X.X:mp0

122: 2020-08-24 01:01:33 INFO: Starting backup: ct/122/2020-08-23T23:01:32Z

122: 2020-08-24 01:01:33 INFO: Client name: pve1

122: 2020-08-24 01:01:33 INFO: Starting protocol: 2020-08-24T01:01:33+02:00

122: 2020-08-24 01:01:33 INFO: Upload config file '/var/tmp/vzdumptmp29006/etc/vzdump/pct.conf' to 'backupuser@pbs@10.X.X.X:mp0' as pct.conf.blob

122: 2020-08-24 01:01:33 INFO: Upload directory '/mnt/vzsnap0' to 'backupuser@pbs@10.X.X.X:mp0' as root.pxar.didx

122: 2020-08-24 01:04:41 INFO: root.pxar: had to upload 625.99 MiB of 51.94 GiB in 188.10s, avgerage speed 3.33 MiB/s).

122: 2020-08-24 01:04:41 INFO: root.pxar: backup was done incrementally, reused 51.32 GiB (98.8%)

122: 2020-08-24 01:04:41 INFO: Uploaded backup catalog (7.39 MiB)

122: 2020-08-24 01:04:41 INFO: Duration: PT188.217461646S

122: 2020-08-24 01:04:41 INFO: End Time: 2020-08-24T01:04:41+02:00

122: 2020-08-24 01:04:42 INFO: remove vzdump snapshot

122: 2020-08-24 01:04:43 INFO: Finished Backup of VM 122 (00:03:11)AFTER:

Code:

122: 2020-08-25 01:01:35 INFO: Starting Backup of VM 122 (lxc)

122: 2020-08-25 01:01:35 INFO: status = running

122: 2020-08-25 01:01:35 INFO: CT Name: nextcloud

122: 2020-08-25 01:01:35 INFO: including mount point rootfs ('/') in backup

122: 2020-08-25 01:01:35 INFO: including mount point mp0 ('/mnt/mp0') in backup

122: 2020-08-25 01:01:35 INFO: backup mode: snapshot

122: 2020-08-25 01:01:35 INFO: ionice priority: 7

122: 2020-08-25 01:01:35 INFO: suspend vm to make snapshot

122: 2020-08-25 01:01:35 INFO: create storage snapshot 'vzdump'

122: 2020-08-25 01:01:36 INFO: resume vm

122: 2020-08-25 01:01:36 INFO: guest is online again after 1 seconds

122: 2020-08-25 01:01:36 INFO: creating Proxmox Backup Server archive 'ct/122/2020-08-24T23:01:35Z'

122: 2020-08-25 01:01:36 INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=none pct.conf:/var/tmp/vzdumptmp23570/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --include-dev /mnt/vzsnap0/./mnt/mp0 --skip-lost-and-found --backup-type ct --backup-id 122 --backup-time 1598310095 --repository backupuser@pbs@10.X.X.X:mp0

122: 2020-08-25 01:01:36 INFO: Starting backup: ct/122/2020-08-24T23:01:35Z

122: 2020-08-25 01:01:36 INFO: Client name: pve1

122: 2020-08-25 01:01:36 INFO: Starting protocol: 2020-08-25T01:01:36+02:00

122: 2020-08-25 01:01:36 INFO: Upload config file '/var/tmp/vzdumptmp23570/etc/vzdump/pct.conf' to 'backupuser@pbs@10.X.X.X:mp0' as pct.conf.blob

122: 2020-08-25 01:01:36 INFO: Upload directory '/mnt/vzsnap0' to 'backupuser@pbs@10.X.X.X:mp0' as root.pxar.didx

122: 2020-08-25 01:08:34 INFO: root.pxar: had to upload 26.56 GiB of 51.96 GiB in 417.92s, average speed 65.09 MiB/s).

122: 2020-08-25 01:08:34 INFO: root.pxar: backup was done incrementally, reused 25.40 GiB (48.9%)

122: 2020-08-25 01:08:34 INFO: Uploaded backup catalog (7.39 MiB)

122: 2020-08-25 01:08:34 INFO: Duration: PT418.097534313S

122: 2020-08-25 01:08:34 INFO: End Time: 2020-08-25T01:08:34+02:00

122: 2020-08-25 01:08:35 INFO: remove vzdump snapshot

122: 2020-08-25 01:08:36 INFO: Finished Backup of VM 122 (00:07:01)Regards

CanisLupus