Hi all,

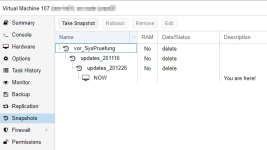

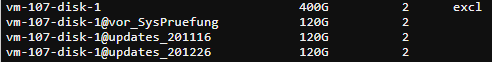

We tried snapshots on a Win 2008r2 VM, in order to try to convert disks from ide to virtio before to do it in "real life".

Snapshots worked fine.

Disk conversion from ide to virtio went also OK.

2 questions :

- we tried to remove older snaphots. Fail, because old ide disks are not found! I know why, they are now virtio! But I don't need those old snapshots anymore. Any constructive help?

- Now that all my disks are virtio, I would like to consolidate actual state of this VM : it becomes the new and only reference, without any need of snapshot, nor rollback. It doesn't seem to be possible?

Thanks,

Christophe.

We tried snapshots on a Win 2008r2 VM, in order to try to convert disks from ide to virtio before to do it in "real life".

Snapshots worked fine.

Disk conversion from ide to virtio went also OK.

2 questions :

- we tried to remove older snaphots. Fail, because old ide disks are not found! I know why, they are now virtio! But I don't need those old snapshots anymore. Any constructive help?

- Now that all my disks are virtio, I would like to consolidate actual state of this VM : it becomes the new and only reference, without any need of snapshot, nor rollback. It doesn't seem to be possible?

Thanks,

Christophe.