I have a 4 node cluster with two disks each node.

When for maintenance or upgrade of a server I have to shut down or restart a node become degraded.

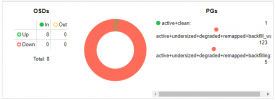

Initially the degraded PGs are exactly half of the total, in the image the reconstruction had started a few minutes earlier.

The problem is that when there are red PGs the virtual machines become completely unresponsive and, if the situation goes on for too long, it sends a crash.

Ceph configuration:

Ceph Crush Map.

How can I make sure that the PGs, during maintenance or upgrade moments, are only remapped (yellow) so that the virtual machines are responsive?

When for maintenance or upgrade of a server I have to shut down or restart a node become degraded.

Initially the degraded PGs are exactly half of the total, in the image the reconstruction had started a few minutes earlier.

The problem is that when there are red PGs the virtual machines become completely unresponsive and, if the situation goes on for too long, it sends a crash.

Ceph configuration:

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 172.25.2.20/25

fsid = df623737-f7cc-4aeb-92fd-fec2c2b7ad51

mon_allow_pool_delete = true

mon_host = 172.25.2.20 172.25.2.10 172.25.2.30

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 2

public_network = 172.25.2.20/25

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.atlantico]

public_addr = 172.25.2.20

[mon.indiano]

public_addr = 172.25.2.30

[mon.pacifico]

public_addr = 172.25.2.10Ceph Crush Map.

Code:

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class NVMeCluster

device 1 osd.1 class NVMeCluster

device 2 osd.2 class NVMeCluster

device 3 osd.3 class NVMeCluster

device 4 osd.4 class NVMeCluster

device 5 osd.5 class NVMeCluster

device 6 osd.6 class NVMeCluster

device 7 osd.7 class NVMeCluster

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host atlantico {

id -3 # do not change unnecessarily

id -4 class NVMeCluster # do not change unnecessarily

# weight 13.973

alg straw2

hash 0 # rjenkins1

item osd.0 weight 6.986

item osd.1 weight 6.986

}

host pacifico {

id -5 # do not change unnecessarily

id -6 class NVMeCluster # do not change unnecessarily

# weight 13.973

alg straw2

hash 0 # rjenkins1

item osd.2 weight 6.986

item osd.3 weight 6.986

}

host indiano {

id -7 # do not change unnecessarily

id -8 class NVMeCluster # do not change unnecessarily

# weight 13.973

alg straw2

hash 0 # rjenkins1

item osd.4 weight 6.986

item osd.5 weight 6.986

}

host artico {

id -9 # do not change unnecessarily

id -10 class NVMeCluster # do not change unnecessarily

# weight 13.973

alg straw2

hash 0 # rjenkins1

item osd.6 weight 6.986

item osd.7 weight 6.986

}

root default {

id -1 # do not change unnecessarily

id -2 class NVMeCluster # do not change unnecessarily

# weight 55.890

alg straw2

hash 0 # rjenkins1

item atlantico weight 13.973

item pacifico weight 13.973

item indiano weight 13.973

item artico weight 13.973

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule miniserver_NVMeCluster {

id 1

type replicated

min_size 1

max_size 10

step take default class NVMeCluster

step chooseleaf firstn 0 type host

step emit

}

# end crush map

Server ViewHow can I make sure that the PGs, during maintenance or upgrade moments, are only remapped (yellow) so that the virtual machines are responsive?

Last edited: