Good evening,

I have a three node test cluster running with PVE 8.4.1.

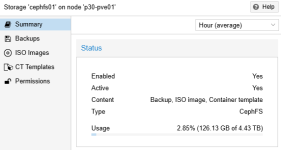

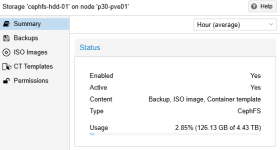

CEPH is already running and set up with 5 SSDs per node. Now, I want to use HDDs in each host for "cold storage", ISOs, and non-critical VMs, and I have created:

a. a second CEPH pool (ceph-pool-hdd-01) for the VMs

b. a second CEPHFS pool (cephfs-hdd-01) for ISOs, etc.

a. is working so far. I can move VMs to it, and the correct pool is selected.

b. The two CEPHFS pools (HDD/SSD) are currently sharing the same storage, each file is being stored in both pools, which is not what I want.

Here’s what I did:

1. Integrated SSDs and HDDs with the correct device class

2. Created CephFS for SSDs (cephfs01) and for HDDs (cephfs-hdd-01):

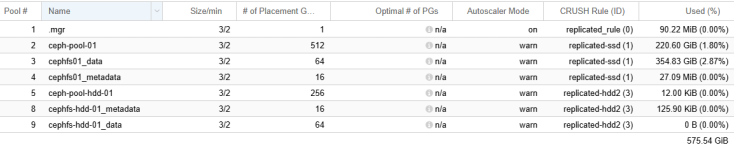

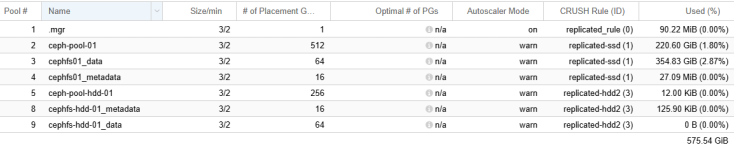

3. Created two new CRUSH rules and assigned them to the corresponding pools:

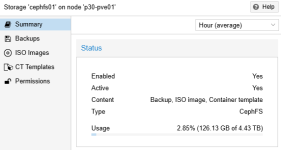

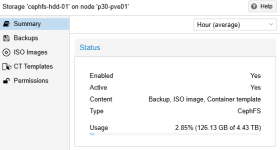

The size of both CephFS storages is always identical:

test with DD:

What am I doing wrong? How can I properly separate the CephFS storage as well?

Thanks in advance for your support.

Kind regards

Jan-Eric

I have a three node test cluster running with PVE 8.4.1.

| pve-01 & pve-02 | CPU(s) | 56 x Intel(R) Xeon(R) Gold 6132 CPU @ 2.60GHz (2 Sockets) |

| pve-03 | CPU(s) | 28 x Intel(R) Xeon(R) Gold 6132 CPU @ 2.60GHz (1 Socket) |

| Kernel Version | Linux 6.8.12-10-pve (2025-04-18T07:39Z) | |

| Boot Mode | EFI | |

| RAM | 512 |

CEPH is already running and set up with 5 SSDs per node. Now, I want to use HDDs in each host for "cold storage", ISOs, and non-critical VMs, and I have created:

a. a second CEPH pool (ceph-pool-hdd-01) for the VMs

b. a second CEPHFS pool (cephfs-hdd-01) for ISOs, etc.

a. is working so far. I can move VMs to it, and the correct pool is selected.

b. The two CEPHFS pools (HDD/SSD) are currently sharing the same storage, each file is being stored in both pools, which is not what I want.

Here’s what I did:

1. Integrated SSDs and HDDs with the correct device class

Bash:

root@p30-pve01:~# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 27.65454 root default

-3 9.82465 host p30-pve01

15 hdd 1.81940 osd.15 up 1.00000 1.00000

16 hdd 1.81940 osd.16 up 1.00000 1.00000

17 hdd 1.81940 osd.17 up 1.00000 1.00000

0 ssd 0.87329 osd.0 up 1.00000 1.00000

1 ssd 0.87329 osd.1 up 1.00000 1.00000

2 ssd 0.87329 osd.2 up 1.00000 1.00000

3 ssd 0.87329 osd.3 up 1.00000 1.00000

4 ssd 0.87329 osd.4 up 1.00000 1.00000

-5 9.82465 host p30-pve02

18 hdd 1.81940 osd.18 up 1.00000 1.00000

19 hdd 1.81940 osd.19 up 1.00000 1.00000

20 hdd 1.81940 osd.20 up 1.00000 1.00000

5 ssd 0.87329 osd.5 up 1.00000 1.00000

6 ssd 0.87329 osd.6 up 1.00000 1.00000

7 ssd 0.87329 osd.7 up 1.00000 1.00000

8 ssd 0.87329 osd.8 up 1.00000 1.00000

9 ssd 0.87329 osd.9 up 1.00000 1.00000

-7 8.00525 host p30-pve03

21 hdd 1.81940 osd.21 up 1.00000 1.00000

22 hdd 1.81940 osd.22 up 1.00000 1.00000

10 ssd 0.87329 osd.10 up 1.00000 1.00000

11 ssd 0.87329 osd.11 up 1.00000 1.00000

12 ssd 0.87329 osd.12 up 1.00000 1.00000

13 ssd 0.87329 osd.13 up 1.00000 1.00000

14 ssd 0.87329 osd.14 up 1.00000 1.000002. Created CephFS for SSDs (cephfs01) and for HDDs (cephfs-hdd-01):

Bash:

root@p30-pve01:~# ceph fs ls

name: cephfs01, metadata pool: cephfs01_metadata, data pools: [cephfs01_data ]

name: cephfs-hdd-01, metadata pool: cephfs-hdd-01_metadata, data pools: [cephfs-hdd-01_data ]3. Created two new CRUSH rules and assigned them to the corresponding pools:

Bash:

root@p30-pve01:~# ceph osd crush rule dump replicated-ssd

{

"rule_id": 1,

"rule_name": "replicated-ssd",

"type": 1,

"steps": [

{

"op": "take",

"item": -2,

"item_name": "default~ssd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

root@p30-pve01:~# ceph osd crush rule dump replicated-hdd2

{

"rule_id": 3,

"rule_name": "replicated-hdd2",

"type": 1,

"steps": [

{

"op": "take",

"item": -12,

"item_name": "default~hdd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

The size of both CephFS storages is always identical:

test with DD:

Bash:

root@p30-pve01:~# dd if=/dev/zero of=/mnt/cephfs01/testfile-ssd bs=1M count=100

root@p30-pve01:~# ls -l /mnt/pve/cephfs01/

total 112640000

drwxr-xr-x 2 root root 0 May 14 15:44 dump

drwxr-xr-x 4 root root 2 May 14 15:44 template

-rw-r--r-- 1 root root 104857600000 May 22 07:59 testfile-hdd

-rw-r--r-- 1 root root 10485760000 May 22 07:29 testfile-ssd

root@p30-pve01:~# ls -l /mnt/pve/cephfs-hdd-01/

total 112640000

drwxr-xr-x 2 root root 0 May 14 15:44 dump

drwxr-xr-x 4 root root 2 May 14 15:44 template

-rw-r--r-- 1 root root 104857600000 May 22 07:59 testfile-hdd

-rw-r--r-- 1 root root 10485760000 May 22 07:29 testfile-ssd

root@p30-pve01:~# rm /mnt/pve/cephfs-hdd-01/testfile-ssd

root@p30-pve01:~# ls -l /mnt/pve/cephfs-hdd-01/

total 102400000

drwxr-xr-x 2 root root 0 May 14 15:44 dump

drwxr-xr-x 4 root root 2 May 14 15:44 template

-rw-r--r-- 1 root root 104857600000 May 22 07:59 testfile-hdd

root@p30-pve01:~# ls -l /mnt/pve/cephfs01/

total 102400000

drwxr-xr-x 2 root root 0 May 14 15:44 dump

drwxr-xr-x 4 root root 2 May 14 15:44 template

-rw-r--r-- 1 root root 104857600000 May 22 07:59 testfile-hddWhat am I doing wrong? How can I properly separate the CephFS storage as well?

Thanks in advance for your support.

Kind regards

Jan-Eric