Hi guys,

I'm currently testing ceph in proxmox. I've followed the documentation and configured the ceph

I have 3 identical nodes and configured as follows:

CPU: 16 x Intel Xeon Bronze @ 1.90GHz (2 Sockets)

RAM: 32 GB DDR4 2133Mhz

Boot/Proxmox Disk: Patriot Burst SSD 240GB

Disk: 3x HGST 10TB HDD SAS

NIC1: 1 GbE used for Corosync

NIC2: 2x10GbE bonded with LACP for Ceph Traffic

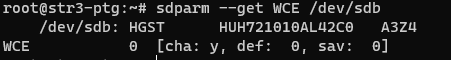

Before that i test my disk one by one using FIO with this command

This is the result (the result is similar to each disk on server)

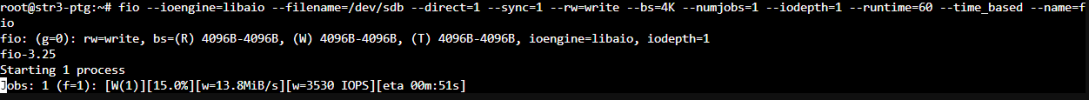

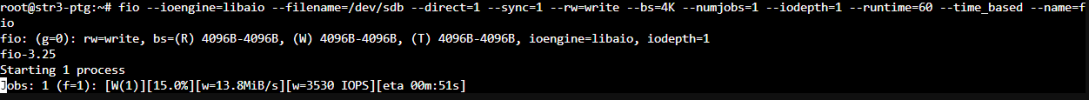

For 4K Block Size

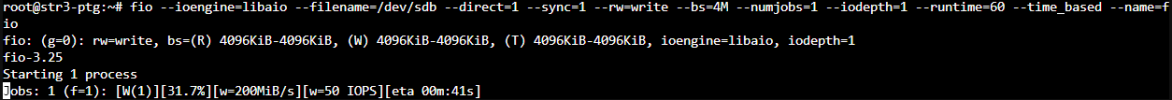

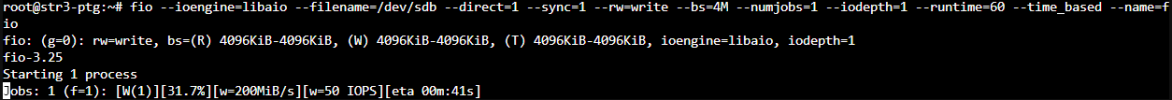

For 4M Block Size

After that i set up the ceph and set OSD with 1 disk on each server, but the speed is decreasing

My question is, Is it really the best OSD speed that i can get with my current configuration ?

I'm currently testing ceph in proxmox. I've followed the documentation and configured the ceph

I have 3 identical nodes and configured as follows:

CPU: 16 x Intel Xeon Bronze @ 1.90GHz (2 Sockets)

RAM: 32 GB DDR4 2133Mhz

Boot/Proxmox Disk: Patriot Burst SSD 240GB

Disk: 3x HGST 10TB HDD SAS

NIC1: 1 GbE used for Corosync

NIC2: 2x10GbE bonded with LACP for Ceph Traffic

Before that i test my disk one by one using FIO with this command

fio --ioengine=libaio --filename=/dev/sdx --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=60 --time_based --name=fioThis is the result (the result is similar to each disk on server)

For 4K Block Size

For 4M Block Size

After that i set up the ceph and set OSD with 1 disk on each server, but the speed is decreasing

rados -p test bench 30 write

Code:

Total time run: 30.3825

Total writes made: 939

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 123.624

Stddev Bandwidth: 11.7765

Max bandwidth (MB/sec): 148

Min bandwidth (MB/sec): 100

Average IOPS: 30

Stddev IOPS: 2.94412

Max IOPS: 37

Min IOPS: 25

Average Latency(s): 0.514558

Stddev Latency(s): 0.255017

Max latency(s): 1.72565

Min latency(s): 0.124276rados -p test bench 30 write -b 4K -t 1

Code:

Total time run: 30.0195

Total writes made: 3146

Write size: 4096

Object size: 4096

Bandwidth (MB/sec): 0.40937

Stddev Bandwidth: 0.0648556

Max bandwidth (MB/sec): 0.53125

Min bandwidth (MB/sec): 0.238281

Average IOPS: 104

Stddev IOPS: 16.603

Max IOPS: 136

Min IOPS: 61

Average Latency(s): 0.0095316

Stddev Latency(s): 0.00568832

Max latency(s): 0.047987

Min latency(s): 0.00263956My question is, Is it really the best OSD speed that i can get with my current configuration ?

Last edited: