Setting up ceph on a three node cluster, all three nodes are fresh hardware and installs of PVE. Getting an error on all three nodes when trying to create the OSD either via GUI or CLI.

create OSD on /dev/sdc (bluestore)

wiping block device /dev/sdc

200+0 records in

200+0 records out

209715200 bytes (210 MB, 200 MiB) copied, 0.52456 s, 400 MB/s

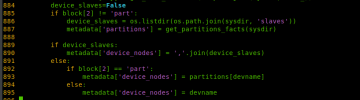

--> UnboundLocalError: cannot access local variable 'device_slaves' where it is not associated with a value

TASK ERROR: command 'ceph-volume lvm create --cluster-fsid 4691d91a-6fd9-42b1-bab9-5f9042b21925 --crush-device-class ssd --data /dev/sdc' failed: exit code 1

I can access all four drives per node, however even after multiple installations and fresh starts I'm still stuck trying to create the OSDs. I saw a previous post on 11/13/23 that was marked solved by playing musical drives however all of my nodes will not function. Any help that can be provided would be appreciated. I could not find any other results for proxmox or ceph with this error.

//Ceph.conf

global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.10.1.10/24

fsid = 4691d91a-6fd9-42b1-bab9-5f9042b21925

mon_allow_pool_delete = true

mon_host = 10.10.1.10

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.10.1.10/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.node1]

public_addr = 10.10.1.10

create OSD on /dev/sdc (bluestore)

wiping block device /dev/sdc

200+0 records in

200+0 records out

209715200 bytes (210 MB, 200 MiB) copied, 0.52456 s, 400 MB/s

--> UnboundLocalError: cannot access local variable 'device_slaves' where it is not associated with a value

TASK ERROR: command 'ceph-volume lvm create --cluster-fsid 4691d91a-6fd9-42b1-bab9-5f9042b21925 --crush-device-class ssd --data /dev/sdc' failed: exit code 1

I can access all four drives per node, however even after multiple installations and fresh starts I'm still stuck trying to create the OSDs. I saw a previous post on 11/13/23 that was marked solved by playing musical drives however all of my nodes will not function. Any help that can be provided would be appreciated. I could not find any other results for proxmox or ceph with this error.

//Ceph.conf

global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.10.1.10/24

fsid = 4691d91a-6fd9-42b1-bab9-5f9042b21925

mon_allow_pool_delete = true

mon_host = 10.10.1.10

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.10.1.10/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.node1]

public_addr = 10.10.1.10