Dear community,

the HDD pool on our 3 node Ceph cluster was quite slow, so we recreated the OSDs with block.db on NVMe drives (Enterprise, Samsung PM983/PM9A3).

The sizing recommendations in the Ceph documentation recommend 4% to 6% of 'block' size:

block.db is either 3.43% or around 6% (depending on the time of creation we used a different calculation/assignment of NVMe space to HDD).

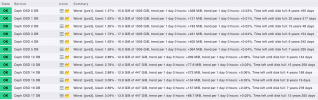

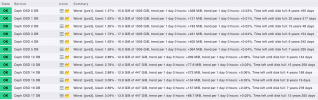

We've been running that setup for a few months, but still our monitoring only shows 1.5% to 3.1% usage of the block.db 'device' (i.e. NVMe LVM):

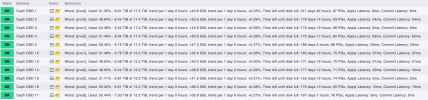

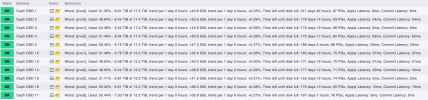

For the HDDs about 50% are occupied:

Is that to be expected? I'd think that DB allocation should be a lot higher across all NVMe devices.

Thanks in advance!

the HDD pool on our 3 node Ceph cluster was quite slow, so we recreated the OSDs with block.db on NVMe drives (Enterprise, Samsung PM983/PM9A3).

The sizing recommendations in the Ceph documentation recommend 4% to 6% of 'block' size:

It is generally recommended that the size of block.db be somewhere between 1% and 4% of the size of block. For RGW workloads, it is recommended that the block.db be at least 4% of the block size, because RGW makes heavy use of block.db to store metadata (in particular, omap keys). For example, if the block size is 1TB, then block.db should have a size of at least 40GB. For RBD workloads, however, block.db usually needs no more than 1% to 2% of the blocksize.

block.db is either 3.43% or around 6% (depending on the time of creation we used a different calculation/assignment of NVMe space to HDD).

We've been running that setup for a few months, but still our monitoring only shows 1.5% to 3.1% usage of the block.db 'device' (i.e. NVMe LVM):

For the HDDs about 50% are occupied:

Is that to be expected? I'd think that DB allocation should be a lot higher across all NVMe devices.

Thanks in advance!