I do agree that Ceph storage utilisation be reworked in the web UI, herewith an example of a cluster where there's a critical problem but the client simply wasn't aware:

I understand that Storage here is simply a sum of all available storage on each node, perhaps exclude CephFS mounts then and include information from Ceph, or only use Ceph when it's configured.

I would rather see a storage utilisation dial that goes red at 75% for each OSD type (eg hdd / ssd / nvme). This could simply come from 'ceph df', as in:

Code:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 11 TiB 2.7 TiB 8.2 TiB 8.2 TiB 74.87

ssd 2.3 TiB 2.0 TiB 306 GiB 306 GiB 13.21

Perhaps a smaller row of dials for each pool? Better yet provide a regex string (eg rbd.*) via the datacenter options list.

Code:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 74 MiB 24 222 MiB 0.01 615 GiB

cephfs_data 2 16 29 GiB 7.40k 86 GiB 3.79 726 GiB

cephfs_metadata 3 16 1.4 MiB 23 5.7 MiB 0 726 GiB

rbd_hdd 4 256 2.7 TiB 722.83k 8.1 TiB 79.14 726 GiB

rbd_hdd_cache 5 64 101 GiB 26.49k 304 GiB 14.13 615 GiB

rbd_ssd 6 64 0 B 0 0 B 0 615 GiB

The pool being at 79.14% should be a red flag already at 75%.

NB: Remember that there can be over 5% space variance on OSDs, even with the upmap balancer. Defaults also dictate near full warnings at 85% and block further writes at 95%.

Clicking through the 3 shared storage containers:

PVE config file (/etc/pve/storage.cfg):

Code:

[root@kvm1a ~]# cat /etc/pve/storage.cfg

dir: local

disable

path /var/lib/vz

content backup

prune-backups keep-all=1

shared 0

cephfs: shared

path /mnt/pve/cephfs

content snippets,vztmpl,iso

rbd: rbd_hdd

content images,rootdir

krbd 1

pool rbd_hdd

rbd: rbd_ssd

content rootdir,images

krbd 1

pool rbd_ssd

[root@kvm1a ~]# df -h

Filesystem Size Used Avail Use% Mounted on

udev 32G 0 32G 0% /dev

tmpfs 6.3G 1.9M 6.3G 1% /run

/dev/md0 59G 8.6G 48G 16% /

tmpfs 32G 63M 32G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/fuse 128M 36K 128M 1% /etc/pve

/dev/sdb4 94M 5.5M 89M 6% /var/lib/ceph/osd/ceph-111

/dev/sda4 94M 5.5M 89M 6% /var/lib/ceph/osd/ceph-110

/dev/sdf1 97M 5.5M 92M 6% /var/lib/ceph/osd/ceph-13

/dev/sdd1 97M 5.5M 92M 6% /var/lib/ceph/osd/ceph-11

/dev/sde1 97M 5.5M 92M 6% /var/lib/ceph/osd/ceph-12

/dev/sdc1 97M 5.5M 92M 6% /var/lib/ceph/osd/ceph-10

10.254.1.2,10.254.1.3,10.254.1.4:/ 756G 29G 727G 4% /mnt/pve/cephfs

tmpfs 6.3G 0 6.3G 0% /run/user/0

Warnings here would be very usefull:

First warning for me:

Ceph CLI:

NB: Note that pool 'rbd_hdd' is at 79.14% utilisation with only 726 GiB of available storage remaining.

Code:

[root@kvm1a ~]# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 11 TiB 2.7 TiB 8.2 TiB 8.2 TiB 74.87

ssd 2.3 TiB 2.0 TiB 306 GiB 306 GiB 13.21

TOTAL 13 TiB 4.7 TiB 8.5 TiB 8.5 TiB 64.29

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 74 MiB 24 222 MiB 0.01 615 GiB

cephfs_data 2 16 29 GiB 7.40k 86 GiB 3.79 726 GiB

cephfs_metadata 3 16 1.4 MiB 23 5.7 MiB 0 726 GiB

rbd_hdd 4 256 2.7 TiB 722.83k 8.1 TiB 79.14 726 GiB

rbd_hdd_cache 5 64 101 GiB 26.49k 304 GiB 14.13 615 GiB

rbd_ssd 6 64 0 B 0 0 B 0 615 GiB

Code:

[root@kvm1a ~]# ceph df detail

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 11 TiB 2.7 TiB 8.2 TiB 8.2 TiB 74.87

ssd 2.3 TiB 2.0 TiB 306 GiB 306 GiB 13.21

TOTAL 13 TiB 4.7 TiB 8.5 TiB 8.5 TiB 64.29

--- POOLS ---

POOL ID PGS STORED (DATA) (OMAP) OBJECTS USED (DATA) (OMAP) %USED MAX AVAIL QUOTA OBJECTS QUOTA BYTES DIRTY USED COMPR UNDER COMPR

device_health_metrics 1 1 74 MiB 0 B 74 MiB 24 222 MiB 0 B 222 MiB 0.01 615 GiB N/A N/A N/A 0 B 0 B

cephfs_data 2 16 29 GiB 29 GiB 0 B 7.40k 86 GiB 86 GiB 0 B 3.79 726 GiB N/A N/A N/A 0 B 0 B

cephfs_metadata 3 16 1.4 MiB 1.3 MiB 8.8 KiB 23 5.7 MiB 5.6 MiB 26 KiB 0 726 GiB N/A N/A N/A 0 B 0 B

rbd_hdd 4 256 2.7 TiB 2.7 TiB 1.3 KiB 722.83k 8.1 TiB 8.1 TiB 4.0 KiB 79.14 726 GiB N/A N/A N/A 0 B 0 B

rbd_hdd_cache 5 64 101 GiB 101 GiB 965 KiB 26.49k 304 GiB 304 GiB 2.8 MiB 14.13 615 GiB N/A N/A 13.12k 0 B 0 B

rbd_ssd 6 64 0 B 0 B 0 B 0 0 B 0 B 0 B 0 615 GiB N/A N/A N/A 0 B 0 B

Code:

[root@kvm1a ~]# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

10 hdd 0.90959 1.00000 931 GiB 695 GiB 693 GiB 7 KiB 1.3 GiB 237 GiB 74.58 1.16 71 up

11 hdd 0.90959 1.00000 931 GiB 696 GiB 694 GiB 0 B 1.4 GiB 236 GiB 74.70 1.16 72 up

12 hdd 0.90959 1.00000 931 GiB 697 GiB 695 GiB 0 B 1.4 GiB 235 GiB 74.78 1.16 69 up

13 hdd 0.90959 1.00000 931 GiB 703 GiB 702 GiB 3 KiB 1.4 GiB 228 GiB 75.51 1.17 76 up

110 ssd 0.37700 1.00000 386 GiB 43 GiB 43 GiB 2.8 MiB 275 MiB 343 GiB 11.14 0.17 58 up

111 ssd 0.37700 1.00000 386 GiB 59 GiB 59 GiB 75 MiB 440 MiB 327 GiB 15.34 0.24 71 up

20 hdd 1.81929 1.00000 1.8 TiB 1.4 TiB 1.4 TiB 3 KiB 2.3 GiB 468 GiB 74.87 1.16 144 up

21 hdd 1.81929 1.00000 1.8 TiB 1.4 TiB 1.4 TiB 7 KiB 2.3 GiB 468 GiB 74.86 1.16 144 up

120 ssd 0.37700 1.00000 386 GiB 43 GiB 43 GiB 0 B 387 MiB 343 GiB 11.21 0.17 63 up

121 ssd 0.37700 1.00000 386 GiB 59 GiB 59 GiB 75 MiB 390 MiB 327 GiB 15.28 0.24 66 up

30 hdd 1.81929 1.00000 1.8 TiB 1.4 TiB 1.4 TiB 7 KiB 2.3 GiB 467 GiB 74.95 1.17 148 up

31 hdd 1.81929 1.00000 1.8 TiB 1.4 TiB 1.4 TiB 3 KiB 2.2 GiB 470 GiB 74.77 1.16 140 up

130 ssd 0.37700 1.00000 386 GiB 49 GiB 49 GiB 73 MiB 208 MiB 337 GiB 12.81 0.20 64 up

131 ssd 0.37700 1.00000 386 GiB 53 GiB 52 GiB 0 B 331 MiB 333 GiB 13.61 0.21 65 up

TOTAL 13 TiB 8.5 TiB 8.5 TiB 225 MiB 17 GiB 4.7 TiB 64.29

MIN/MAX VAR: 0.17/1.17 STDDEV: 34.39

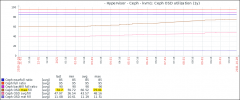

For storage management it's not necessarily just the utilisation but the pace of change that's important. Herewith a sample graph following the average, maximum and minimum utilisations of individual OSDs over time:

Herewith a miss leading representation of the total OSD storage space, irrespective of type or pool constraints:

Client decided to deploy a virtual NVR, miss lead by the space reporting in the UI...

PS: Some additional monitoring metrics that can help identify bottlenecks: