Having used proxmox for some time, I'm re-building one of my clusters and I would like to make sure I get the PG's correct as it still confuses me.

Question 1

In my situation I have five nodes. Each nodes has 5-6 500GB OSD's installed.

I used the pg calculator which give me PG value of 1024 however, in my case im consious that I have 5-6 OSD's per node.

Should I take this into account?

Question 2

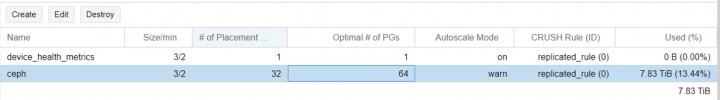

I created a pool with the default 3/2 replication and a PG size of 1024.

I then created five MDS's and a cephfs with 128 PG's.

Is this correct as it complained when I tried to increase to 1024 PG?

Question 1

In my situation I have five nodes. Each nodes has 5-6 500GB OSD's installed.

I used the pg calculator which give me PG value of 1024 however, in my case im consious that I have 5-6 OSD's per node.

Should I take this into account?

Question 2

I created a pool with the default 3/2 replication and a PG size of 1024.

I then created five MDS's and a cephfs with 128 PG's.

Is this correct as it complained when I tried to increase to 1024 PG?