I have setup a three node ceph cluster with 8 OSDs. My goal is to be able to tolerate one node failure without losing data access. To test this i put a couple of ISOs and vm backups (80 GB total) on the cephfs, waited until it reached steady state and then shut down one node (gracefully). All my nodes are running the latest ceph and pve versions and i have kept the default crushmap. Unfortunately, this consistently resuluts in "reduced data availability":

When i restart the Node my pool goes back to healthy. What can i do to make sure, the cluster will actually remain available when losing a node? Can anyone point me to a way to diagnose why the data doesnt get distributed correctly (IMO that should be 3 copies, one on each node)?

The only irregular thing i can think of is that my OSDs are slightly imbalanced due to having added two spare hard drives. However, when i set them to out and wait for steady state one by one , the problem remains. Furthermore, all data together is smaller than the smallest OSD.

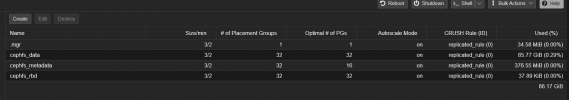

For reference - my pools:

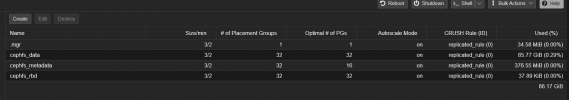

Update: And the state my pool is in with all osds up/in:

When i restart the Node my pool goes back to healthy. What can i do to make sure, the cluster will actually remain available when losing a node? Can anyone point me to a way to diagnose why the data doesnt get distributed correctly (IMO that should be 3 copies, one on each node)?

The only irregular thing i can think of is that my OSDs are slightly imbalanced due to having added two spare hard drives. However, when i set them to out and wait for steady state one by one , the problem remains. Furthermore, all data together is smaller than the smallest OSD.

For reference - my pools:

Update: And the state my pool is in with all osds up/in:

Last edited: