Hi, PVE geekers:

I build a pve cluster on three server (with ceph), with pve & ceph package version as follow:

Ceph works fine when using public and cluster network with tcp/ip, and the performance is not as expected (3x10 Samsung 960GB SATA SSD).

My ceph.conf with tcp/ip network:

There are 4 Mellanox adapters (Mellanox Technologies MT27710 Family [ConnectX-4 Lx]) installed in each server, and I installed the MLNX_OFED driver from NVIDIA (MLNX_OFED_LINUX-5.8-3.0.7.0-debian11.3-x86_64).

As following:

I'v tested the rdma network with the following tools, all seems to work fine:

- ibping

- ucmatose

- rping

- ibv_rc_pingpong

- ibv_srq_pingpong

- ibv_xsrq_pingpong

- rdma_xserver/rdma_xclient

- rdma_server/rdma_client

Also, `hca_self_test.ofed` , `ibdev2netdev`, `ibstat`, `ibv_devices`.... commands shows all works fine:

So, I tried to enable rdma for ceph according to the tips of [BRING UP CEPH RDMA - DEVELOPER'S GUIDE]https://enterprise-support.nvidia.com/s/article/bring-up-ceph-rdma---developer-s-guide).

This is my new-added options in ceph.conf - [global] section:

And, referring to step 11 of the above blog, I modified the ceph service file (ceph-mgr@.service, ceph-mon@.service, ceph-osd@.service, ceph-mds@.service).

File `/etc/security/limits.conf` is updated too.

I understand that by default /etc/ceph/ceph.conf is a link to /etc/pve/ceph.conf (from pvefs) for cluster configuration file synchronization.

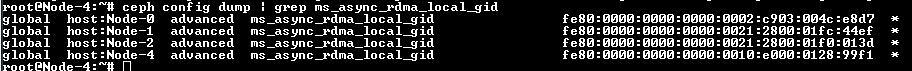

In order to use roce v2, I deleted the link and placed /etc/ceph/ceph.conf separately for each server, the only difference is that the `ms_async_rdma_local_gid` in `ceph.conf` is obtained from the `show_gids` command of each server itself.

Here is a full ceph.conf file on server 1 (node01):

When I start `

Start ceph.target:

After a few seconds...

Can anyone help to solve it?

Can anyone help to solve it?

Can anyone help to solve it?

I was wondering, has anyone successfully enabled RDMA with ceph in PVE?

PS:

I have truncated all ceph logs before starting ceph with rdma, so, the attached file is a complete ceph startup log with rdma option configured in ceph.conf.[/CODE]

I build a pve cluster on three server (with ceph), with pve & ceph package version as follow:

Code:

root@node01:~# pveversion

pve-manager/7.3-3/c3928077 (running kernel: 5.15.74-1-pve)

root@node01:~# ceph --version

ceph version 16.2.13 (b81a1d7f978c8d41cf452da7af14e190542d2ee2) pacific (stable)

root@node01:~# dpkg -l |grep ceph

ii ceph 16.2.13-pve1 amd64 distributed storage and file system

ii ceph-base 16.2.13-pve1 amd64 common ceph daemon libraries and management tools

ii ceph-common 16.2.13-pve1 amd64 common utilities to mount and interact with a ceph storage cluster

ii ceph-fuse 16.2.13-pve1 amd64 FUSE-based client for the Ceph distributed file system

ii ceph-mds 16.2.13-pve1 amd64 metadata server for the ceph distributed file system

ii ceph-mgr 16.2.13-pve1 amd64 manager for the ceph distributed storage system

ii ceph-mgr-modules-core 16.2.13-pve1 all ceph manager modules which are always enabled

ii ceph-mon 16.2.13-pve1 amd64 monitor server for the ceph storage system

ii ceph-osd 16.2.13-pve1 amd64 OSD server for the ceph storage system

...Ceph works fine when using public and cluster network with tcp/ip, and the performance is not as expected (3x10 Samsung 960GB SATA SSD).

Code:

root@node01:~# ceph -s

cluster:

id: 87d52299-0504-4c6f-8882-3bc27b85cc53

health: HEALTH_OK

services:

mon: 3 daemons, quorum node01,node02,node03 (age 22s)

mgr: node02(active, since 20s), standbys: node01, node03

osd: 30 osds: 30 up (since 14s), 30 in (since 37m)

data:

pools: 2 pools, 129 pgs

objects: 42 objects, 19 B

usage: 2.6 TiB used, 26 TiB / 29 TiB avail

pgs: 129 active+cleanMy ceph.conf with tcp/ip network:

Code:

root@node01:~# cat /etc/ceph/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.2.1/24

fsid = 87d52299-0504-4c6f-8882-3bc27b85cc53

mon_allow_pool_delete = true

mon_host = 192.168.1.1 192.168.1.2 192.168.1.3

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 192.168.1.1/24There are 4 Mellanox adapters (Mellanox Technologies MT27710 Family [ConnectX-4 Lx]) installed in each server, and I installed the MLNX_OFED driver from NVIDIA (MLNX_OFED_LINUX-5.8-3.0.7.0-debian11.3-x86_64).

As following:

Code:

root@node01:~# dpkg -l |grep " mlnx"

ii knem-dkms 1.1.4.90mlnx2-OFED.23.07.0.2.2.1 all DKMS support for mlnx-ofed kernel modules

ii mlnx-ethtool 5.18-1.58307 amd64 This utility allows querying and changing settings such as speed,

ii mlnx-iproute2 5.19.0-1.58307 amd64 This utility allows querying and changing settings such as speed,

ii mlnx-ofed-all 5.8-3.0.7.0 all MLNX_OFED all installer package (with DKMS support)

ii mlnx-ofed-kernel-dkms 5.8-OFED.5.8.3.0.7.1 all DKMS support for mlnx-ofed kernel modules

ii mlnx-ofed-kernel-utils 5.8-OFED.5.8.3.0.7.1 amd64 Userspace tools to restart and tune mlnx-ofed kernel modules

ii mlnx-tools 5.8.0-1.lts.58307 amd64 Userspace tools to restart and tune MLNX_OFED kernel modulesI'v tested the rdma network with the following tools, all seems to work fine:

- ibping

- ucmatose

- rping

- ibv_rc_pingpong

- ibv_srq_pingpong

- ibv_xsrq_pingpong

- rdma_xserver/rdma_xclient

- rdma_server/rdma_client

Also, `hca_self_test.ofed` , `ibdev2netdev`, `ibstat`, `ibv_devices`.... commands shows all works fine:

Code:

root@node01:/opt# hca_self_test.ofed

---- Performing Adapter Device Self Test ----

Number of CAs Detected ................. 8

PCI Device Check ....................... PASS

Kernel Arch ............................ x86_64

Host Driver Version .................... MLNX_OFED_LINUX-5.8-3.0.7.0 (OFED-5.8-3.0.7): 5.15.74-1-pve

Host Driver RPM Check .................. PASS

Firmware on CA #0 NIC .................. v14.32.1010

Firmware on CA #1 NIC .................. v14.32.1010

Firmware on CA #2 NIC .................. v14.32.1010

Firmware on CA #3 NIC .................. v14.32.1010

Firmware on CA #4 NIC .................. v14.32.1010

Firmware on CA #5 NIC .................. v14.32.1010

Firmware on CA #6 NIC .................. v14.32.1010

Firmware on CA #7 NIC .................. v14.32.1010

Host Driver Initialization ............. PASS

Number of CA Ports Active .............. 3

Port State of Port #1 on CA #0 (NIC)..... DOWN (Ethernet)

Port State of Port #1 on CA #1 (NIC)..... UP 1X EDR (Ethernet)

Port State of Port #1 on CA #2 (NIC)..... DOWN (Ethernet)

Port State of Port #1 on CA #3 (NIC)..... DOWN (Ethernet)

Port State of Port #1 on CA #4 (NIC)..... UP 1X EDR (Ethernet)

Port State of Port #1 on CA #5 (NIC)..... DOWN (Ethernet)

Port State of Port #1 on CA #6 (NIC)..... UP 1X EDR (Ethernet)

Port State of Port #1 on CA #7 (NIC)..... DOWN (Ethernet)

Error Counter Check on CA #0 (NIC)...... PASS

Error Counter Check on CA #1 (NIC)...... PASS

Error Counter Check on CA #2 (NIC)...... PASS

Error Counter Check on CA #3 (NIC)...... PASS

Error Counter Check on CA #4 (NIC)...... PASS

Error Counter Check on CA #5 (NIC)...... PASS

Error Counter Check on CA #6 (NIC)...... PASS

Error Counter Check on CA #7 (NIC)...... PASS

Kernel Syslog Check .................... PASS

Node GUID on CA #0 (NIC) ............... 30:c6:d7:00:00:8f:fa:01

Node GUID on CA #1 (NIC) ............... 30:c6:d7:00:00:8f:fa:02

Node GUID on CA #2 (NIC) ............... 30:c6:d7:00:00:8f:f9:9d

Node GUID on CA #3 (NIC) ............... 30:c6:d7:00:00:8f:f9:9e

Node GUID on CA #4 (NIC) ............... 30:c6:d7:00:00:8f:fa:ff

Node GUID on CA #5 (NIC) ............... 30:c6:d7:00:00:8f:fb:00

Node GUID on CA #6 (NIC) ............... 30:c6:d7:00:00:8f:f9:b3

Node GUID on CA #7 (NIC) ............... 30:c6:d7:00:00:8f:f9:b4

------------------ DONE ---------------------

root@node01:/opt# ibdev2netdev

mlx5_0 port 1 ==> ens1f0np0 (Down)

mlx5_1 port 1 ==> ens1f1np1 (Up)

mlx5_2 port 1 ==> ens2f0np0 (Down)

mlx5_3 port 1 ==> ens2f1np1 (Down)

mlx5_4 port 1 ==> ens4f0np0 (Up)

mlx5_5 port 1 ==> ens4f1np1 (Down)

mlx5_6 port 1 ==> ens5f0np0 (Up)

mlx5_7 port 1 ==> ens5f1np1 (Down)So, I tried to enable rdma for ceph according to the tips of [BRING UP CEPH RDMA - DEVELOPER'S GUIDE]https://enterprise-support.nvidia.com/s/article/bring-up-ceph-rdma---developer-s-guide).

This is my new-added options in ceph.conf - [global] section:

Code:

#Enable ceph with RDMA:

ms_async_op_threads = 8 #default 3

# ms_type = async

ms_public_type = async+posix #keep frontend with posix

ms_cluster_type = async+rdma #for setting backend only to RDMA

ms_async_rdma_type = rdma #default ib

ms_async_rdma_device_name = mlx5_6

ms_async_rdma_cluster_device_name = ens5f0np0

ms_async_rdma_roce_ver = 2

ms_async_rdma_gid_idx = 3

ms_async_rdma_local_gid = 0000:0000:0000:0000:0000:ffff:c0a8:0201And, referring to step 11 of the above blog, I modified the ceph service file (ceph-mgr@.service, ceph-mon@.service, ceph-osd@.service, ceph-mds@.service).

File `/etc/security/limits.conf` is updated too.

I understand that by default /etc/ceph/ceph.conf is a link to /etc/pve/ceph.conf (from pvefs) for cluster configuration file synchronization.

In order to use roce v2, I deleted the link and placed /etc/ceph/ceph.conf separately for each server, the only difference is that the `ms_async_rdma_local_gid` in `ceph.conf` is obtained from the `show_gids` command of each server itself.

Here is a full ceph.conf file on server 1 (node01):

Code:

root@node01:~# cat /etc/ceph/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.2.1/24

fsid = 87d52299-0504-4c6f-8882-3bc27b85cc53

mon_allow_pool_delete = true

mon_host = 192.168.1.1 192.168.1.2 192.168.1.3

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 192.168.1.1/24

#Enable ceph with RDMA:

ms_async_op_threads = 8 #default 3

# ms_type = async

ms_public_type = async+posix #keep frontend with posix

ms_cluster_type = async+rdma #for setting backend only to RDMA

ms_async_rdma_type = rdma #default ib

ms_async_rdma_device_name = mlx5_6

ms_async_rdma_cluster_device_name = ens5f0np0

ms_async_rdma_roce_ver = 2

ms_async_rdma_gid_idx = 3

ms_async_rdma_local_gid = 0000:0000:0000:0000:0000:ffff:c0a8:0201 #This is the only difference.

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.node01]

public_addr = 192.168.1.1

[mon.node02]

public_addr = 192.168.1.2

[mon.node03]

public_addr = 192.168.1.3When I start `

ceph.target` on all servers, `ceph -s` shows everything fine for the first few seconds, then all pgs become unknown (100.000% pg unknown), all ceph-osd processes crashed. Cluster health status becomes HEALTH_WARN. As following:Start ceph.target:

Code:

root@node01:~# ceph -s

cluster:

id: 87d52299-0504-4c6f-8882-3bc27b85cc53

health: HEALTH_OK

services:

mon: 3 daemons, quorum node01,node02,node03 (age 19s)

mgr: node02(active, since 16s), standbys: node01, node03

osd: 30 osds: 30 up (since 58m), 30 in (since 95m)

data:

pools: 2 pools, 129 pgs

objects: 42 objects, 19 B

usage: 2.6 TiB used, 26 TiB / 29 TiB avail

pgs: 129 active+clean

root@node01:~# ps -ef |grep ceph

ceph 414046 1 3 23:25 ? 00:00:00 /usr/bin/python3.9 /usr/bin/ceph-crash

ceph 414047 1 37 23:25 ? 00:00:00 /usr/bin/ceph-mgr -f --cluster ceph --id node03 --setuser ceph --setgroup ceph

ceph 414048 1 12 23:25 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id node03 --setuser ceph --setgroup ceph

ceph 414083 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 20 --setuser ceph --setgroup ceph

ceph 414094 1 3 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 21 --setuser ceph --setgroup ceph

ceph 414103 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 22 --setuser ceph --setgroup ceph

ceph 414106 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 23 --setuser ceph --setgroup ceph

ceph 414108 1 3 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 24 --setuser ceph --setgroup ceph

ceph 414109 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 25 --setuser ceph --setgroup ceph

ceph 414110 1 3 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 26 --setuser ceph --setgroup ceph

ceph 414113 1 3 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 27 --setuser ceph --setgroup ceph

ceph 414114 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 28 --setuser ceph --setgroup ceph

ceph 414115 1 2 23:25 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 29 --setuser ceph --setgroup ceph

root 414580 414456 0 23:25 ? 00:00:00 bash -c ps -ef |grep ceph

root 414584 414580 0 23:25 ? 00:00:00 grep cephAfter a few seconds...

Code:

root@node01:~# ceph -s

cluster:

id: 87d52299-0504-4c6f-8882-3bc27b85cc53

health: HEALTH_WARN

Reduced data availability: 129 pgs inactive

services:

mon: 3 daemons, quorum node01,node02,node03 (age 2m)

mgr: node02(active, since 2m), standbys: node01, node03

osd: 30 osds: 30 up (since 60m), 30 in (since 97m)

data:

pools: 2 pools, 129 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

129 unknown

root@node01:~# ps -ef |grep ceph

root 460031 1 0 23:24 ? 00:00:01 /usr/bin/rbd -p ceph_pool01 -c /etc/pve/ceph.conf --auth_supported cephx -n client.admin --keyring /etc/pve/priv/ceph/ceph_pool01.keyring ls

ceph 460967 1 0 23:25 ? 00:00:02 /usr/bin/python3.9 /usr/bin/ceph-crash

ceph 460968 1 0 23:25 ? 00:00:02 /usr/bin/ceph-mgr -f --cluster ceph --id node01 --setuser ceph --setgroup ceph

ceph 460969 1 0 23:25 ? 00:00:02 /usr/bin/ceph-mon -f --cluster ceph --id node01 --setuser ceph --setgroup ceph

root 474811 314176 0 23:31 pts/0 00:00:00 grep cephCan anyone help to solve it?

Can anyone help to solve it?

Can anyone help to solve it?

I was wondering, has anyone successfully enabled RDMA with ceph in PVE?

PS:

I have truncated all ceph logs before starting ceph with rdma, so, the attached file is a complete ceph startup log with rdma option configured in ceph.conf.[/CODE]

Attachments

Last edited: