Hello,

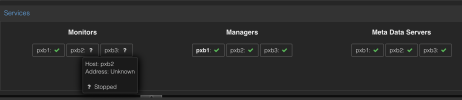

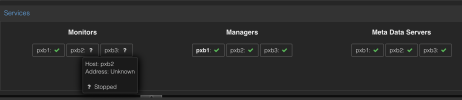

3 nodes, identical, new installation from latest ISO, upgraded all nodes, added ceph reef sources. I installed ceph on all 3 hosts individually. It came online, green initiall (well also now it is green), but only on node 1 the manager is running and it is green, node 2 and 3 are in unknown state and it will not start. Checking on the node directly, it is failing to start, stating:

I tried to manually set

did not help a lot - now service will start but still wont be recognised in the interface:

and if i check log i see this:

Any help? I do not dare to deploy any production workload on this - if that one mgr goes down, i presume i am in very bad spot...

Thank you in advance!

3 nodes, identical, new installation from latest ISO, upgraded all nodes, added ceph reef sources. I installed ceph on all 3 hosts individually. It came online, green initiall (well also now it is green), but only on node 1 the manager is running and it is green, node 2 and 3 are in unknown state and it will not start. Checking on the node directly, it is failing to start, stating:

Code:

Jul 18 18:15:08 pxb2 ceph-mgr[2526]: 2024-07-18T18:15:08.489+0200 72d5472006c0 -1 monclient(hunting): handle_auth_bad_met> monclient(hunting): handle_auth_bad_method server allowed_methods [2] but i only support [2]

Jul 18 18:15:08 pxb2 ceph-mgr[2526]: failed to fetch mon config (--no-mon-config to skip)

Jul 18 18:15:08 pxb2 systemd[1]: ceph-mgr@pxb2.service: Main process exited, code=exited, status=1/FAILURE

Subject: Unit process exitedI tried to manually set

Code:

root@pxb2:~# ceph auth get-key client.admin --keyring=/etc/ceph/ceph.client.admin.keyringdid not help a lot - now service will start but still wont be recognised in the interface:

and if i check log i see this:

Code:

2024-07-18T18:12:59.025518+0200 mgr.pxb2 (mgr.14107) 1580 : cluster 0 pgmap v1581: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:13:01.025796+0200 mgr.pxb2 (mgr.14107) 1581 : cluster 0 pgmap v1582: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:13:03.026115+0200 mgr.pxb2 (mgr.14107) 1582 : cluster 0 pgmap v1583: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:13:05.026470+0200 mgr.pxb2 (mgr.14107) 1583 : cluster 0 pgmap v1584: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:13:07.026740+0200 mgr.pxb2 (mgr.14107) 1584 : cluster 0 pgmap v1585: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:13:09.027006+0200 mgr.pxb2 (mgr.14107) 1585 : cluster 0 pgmap v1586: 0 pgs: ; 0 B data, 0 B used, 0 B / 0 B avail

2024-07-18T18:14:37.723712+0200 mon.pxb2 (mon.0) 1 : cluster 1 mon.pxb2 is new leader, mons pxb2 in quorum (ranks 0)

2024-07-18T18:14:37.723795+0200 mon.pxb2 (mon.0) 2 : cluster 0 monmap e1: 1 mons at {pxb2=[v2:172.16.5.26:3300/0,v1:172.16.5.26:6789/0]} removed_ranks: {} disallowed_leaders: {}

2024-07-18T18:14:37.724278+0200 mon.pxb2 (mon.0) 3 : cluster 0 fsmap

2024-07-18T18:14:37.724294+0200 mon.pxb2 (mon.0) 4 : cluster 0 osdmap e3: 0 total, 0 up, 0 in

2024-07-18T18:14:37.726016+0200 mon.pxb2 (mon.0) 5 : cluster 0 mgrmap e10: pxb2(active, since 54m)

2024-07-18T18:14:42.719049+0200 mon.pxb2 (mon.0) 6 : cluster 1 Manager daemon pxb2 is unresponsive. No standby daemons available.

2024-07-18T18:14:42.725515+0200 mon.pxb2 (mon.0) 7 : cluster 0 osdmap e4: 0 total, 0 up, 0 in

2024-07-18T18:14:42.725634+0200 mon.pxb2 (mon.0) 8 : cluster 0 mgrmap e11: no daemons active (since 0.00659005s)

2024-07-18T18:21:23.288566+0200 mon.pxb2 (mon.0) 1 : cluster 1 mon.pxb2 is new leader, mons pxb2 in quorum (ranks 0)

2024-07-18T18:21:23.288627+0200 mon.pxb2 (mon.0) 2 : cluster 0 monmap e1: 1 mons at {pxb2=[v2:172.16.5.26:3300/0,v1:172.16.5.26:6789/0]} removed_ranks: {} disallowed_leaders: {}

2024-07-18T18:21:23.289057+0200 mon.pxb2 (mon.0) 3 : cluster 0 fsmap

2024-07-18T18:21:23.289073+0200 mon.pxb2 (mon.0) 4 : cluster 0 osdmap e4: 0 total, 0 up, 0 in

2024-07-18T18:21:23.290465+0200 mon.pxb2 (mon.0) 5 : cluster 0 mgrmap e11: no daemons active (since 6m)

2024-07-18T19:32:16.994758+0200 mon.pxb2 (mon.0) 1 : cluster 1 mon.pxb2 is new leader, mons pxb2 in quorum (ranks 0)

2024-07-18T19:32:16.994838+0200 mon.pxb2 (mon.0) 2 : cluster 0 monmap e1: 1 mons at {pxb2=[v2:172.16.5.26:3300/0,v1:172.16.5.26:6789/0]} removed_ranks: {} disallowed_leaders: {}

2024-07-18T19:32:16.995286+0200 mon.pxb2 (mon.0) 3 : cluster 0 fsmap

2024-07-18T19:32:16.995302+0200 mon.pxb2 (mon.0) 4 : cluster 0 osdmap e4: 0 total, 0 up, 0 in

2024-07-18T19:32:16.996748+0200 mon.pxb2 (mon.0) 5 : cluster 0 mgrmap e11: no daemons active (since 77m)Any help? I do not dare to deploy any production workload on this - if that one mgr goes down, i presume i am in very bad spot...

Thank you in advance!