Hi

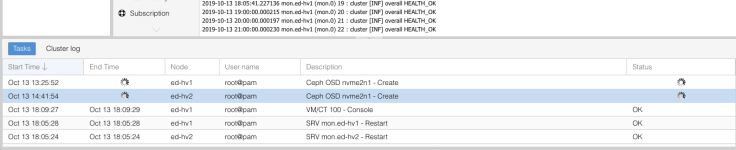

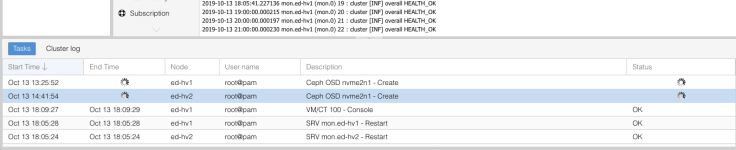

I tried to bring up a test platform for PVE and Ceph today. It's a 4 node cluster with nvme drives for data storage. All was fine until I tried to create the OSDs. Via the web UI I picked the first data drive on a couple of the cluster nodes and selected them as OSDs. 8 hours later and the tasks are still running. On another node I tried using

ceph-volume to create 4 OSDs on a drive and that's also still running. I create a filesystem on another drive, mounted it, and ran a dd to it in case the OS was having problems talking to the hardware. That was all good with very fast throughput.

I've looked through the logs and can't see anything that looks like a problem. In the OSD log file on the first node the only entries are :

2019-10-13 13:25:59.373 7f18135de000 1 bluestore(/var/lib/ceph/osd/ceph-0/) umount

2019-10-13 13:25:59.373 7f18135de000 4 rocksdb: [db/db_impl.cc:390] Shutdown: canceling all background work

2019-10-13 13:25:59.373 7f18135de000 4 rocksdb: [db/db_impl.cc:563] Shutdown complete

2019-10-13 13:25:59.373 7f18135de000 1 bluefs umount

2019-10-13 13:25:59.373 7f18135de000 1 fbmap_alloc 0x560145214800 shutdown

2019-10-13 13:25:59.373 7f18135de000 1 bdev(0x560145f7ce00 /var/lib/ceph/osd/ceph-0//block) close

2019-10-13 13:25:59.661 7f18135de000 1 fbmap_alloc 0x560145214300 shutdown

2019-10-13 13:25:59.661 7f18135de000 1 freelist shutdown

2019-10-13 13:25:59.661 7f18135de000 1 bdev(0x560145f7c700 /var/lib/ceph/osd/ceph-0//block) close

2019-10-13 13:25:59.905 7f18135de000 0 created object store /var/lib/ceph/osd/ceph-0/ for osd.0 fsid f9441e6e-4288-47df-b174-8a432726870c

That job is still running and it's now 21:12:00 so something clearly isn't right. On that box I'm seeing :

root@ed-hv1:/var/log/ceph# ceph-volume lvm list

====== osd.0 =======

[block] /dev/ceph-9a059803-9647-4b23-9c27-d5bcc45b852a/osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4

block device /dev/ceph-9a059803-9647-4b23-9c27-d5bcc45b852a/osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4

block uuid PXYumK-fJ8b-oU7X-qsju-4sVQ-llMz-wyMWDI

cephx lockbox secret

cluster fsid f9441e6e-4288-47df-b174-8a432726870c

cluster name ceph

crush device class None

encrypted 0

osd fsid 6dc00288-c9e6-4b15-8139-21d81c115be4

osd id 0

type block

vdo 0

devices /dev/nvme2n1

root@ed-hv1:/var/log/ceph# lvs | grep osd

osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4 ceph-9a059803-9647-4b23-9c27-d5bcc45b852a -wi-a----- <1.82t

root@ed-hv1:/var/log/ceph# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

1 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

2 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

TOTAL 0 B 0 B 0 B 0 B 0 B 0 B 0

MIN/MAX VAR: -/- STDDEV: 0

This is my first day with PVE and Ceph so I'm not sure where else to look. Any pointers would be appreciated.

Thanks

David

...

I tried to bring up a test platform for PVE and Ceph today. It's a 4 node cluster with nvme drives for data storage. All was fine until I tried to create the OSDs. Via the web UI I picked the first data drive on a couple of the cluster nodes and selected them as OSDs. 8 hours later and the tasks are still running. On another node I tried using

ceph-volume to create 4 OSDs on a drive and that's also still running. I create a filesystem on another drive, mounted it, and ran a dd to it in case the OS was having problems talking to the hardware. That was all good with very fast throughput.

I've looked through the logs and can't see anything that looks like a problem. In the OSD log file on the first node the only entries are :

2019-10-13 13:25:59.373 7f18135de000 1 bluestore(/var/lib/ceph/osd/ceph-0/) umount

2019-10-13 13:25:59.373 7f18135de000 4 rocksdb: [db/db_impl.cc:390] Shutdown: canceling all background work

2019-10-13 13:25:59.373 7f18135de000 4 rocksdb: [db/db_impl.cc:563] Shutdown complete

2019-10-13 13:25:59.373 7f18135de000 1 bluefs umount

2019-10-13 13:25:59.373 7f18135de000 1 fbmap_alloc 0x560145214800 shutdown

2019-10-13 13:25:59.373 7f18135de000 1 bdev(0x560145f7ce00 /var/lib/ceph/osd/ceph-0//block) close

2019-10-13 13:25:59.661 7f18135de000 1 fbmap_alloc 0x560145214300 shutdown

2019-10-13 13:25:59.661 7f18135de000 1 freelist shutdown

2019-10-13 13:25:59.661 7f18135de000 1 bdev(0x560145f7c700 /var/lib/ceph/osd/ceph-0//block) close

2019-10-13 13:25:59.905 7f18135de000 0 created object store /var/lib/ceph/osd/ceph-0/ for osd.0 fsid f9441e6e-4288-47df-b174-8a432726870c

That job is still running and it's now 21:12:00 so something clearly isn't right. On that box I'm seeing :

root@ed-hv1:/var/log/ceph# ceph-volume lvm list

====== osd.0 =======

[block] /dev/ceph-9a059803-9647-4b23-9c27-d5bcc45b852a/osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4

block device /dev/ceph-9a059803-9647-4b23-9c27-d5bcc45b852a/osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4

block uuid PXYumK-fJ8b-oU7X-qsju-4sVQ-llMz-wyMWDI

cephx lockbox secret

cluster fsid f9441e6e-4288-47df-b174-8a432726870c

cluster name ceph

crush device class None

encrypted 0

osd fsid 6dc00288-c9e6-4b15-8139-21d81c115be4

osd id 0

type block

vdo 0

devices /dev/nvme2n1

root@ed-hv1:/var/log/ceph# lvs | grep osd

osd-block-6dc00288-c9e6-4b15-8139-21d81c115be4 ceph-9a059803-9647-4b23-9c27-d5bcc45b852a -wi-a----- <1.82t

root@ed-hv1:/var/log/ceph# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

1 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

2 0 0 0 B 0 B 0 B 0 B 0 B 0 B 0 1.00 0 down

TOTAL 0 B 0 B 0 B 0 B 0 B 0 B 0

MIN/MAX VAR: -/- STDDEV: 0

This is my first day with PVE and Ceph so I'm not sure where else to look. Any pointers would be appreciated.

Thanks

David

...