Index:

1. Create Classes

2. Crushmap

3. Creating Rules

4. Adding Pools

--------

Piecing together information i've obtained from various threads, thought I'd compile it here for hopefully, easier consumption and use.

If you're visiting this topic you probably already know what ceph is and what it can do for you. ceph so far for me, has been really cool and proxmox really overall is pretty cool. A couple things we'll address in this small tutorial:

List OSDs and their classes:

*** Note the IDs of the devices you want to change, and unset and set the new class to the device:

Unset the class ssd for device 20:

Set the class to nvme for device 20:

Do the above for all OSD IDs that you want to change to a particular class.

Now we need to be sure ceph doesn't default the devices, so we disable the update on start. This will allow us to modify classes as they won't be set when they come up.

Modify your local /etc/ceph/ceph.conf to include an osd entry:

I THINK you have to hup the osd service but I'm not really sure to be honest right now

You can see the crushmap in the UI @

To understand a crushmap we need to do the following.

Get crushmap to compiled file

Decompile the crushmap

Compile the crushmap, maintaining original compiled crushmap file (you choose how you want to manage the files)

Import the new crushmap

Now that we know how to edit a crushmap, let's edit a crushmap!

Alright, we have a new class created but not really doing anything. So now we need to associate a class to a rule.

Using the above crushmap instructions, decompile and edit your crush map rules. The default rule name with ID 0 is something like replicated.

I decided to delete the default rule and replace it with 3 rules. The rules are what link the classes to the pool, which we'll do in the last step. Delete the original rule id 0 and replace with these 3. This works for this example as we have 3 devices types but your discretion here what you do to your own environment. Most would create an SSD and HDD rule, for example.

Don't forget to compile and import your new crush file!

You can test the crushfile like so, but

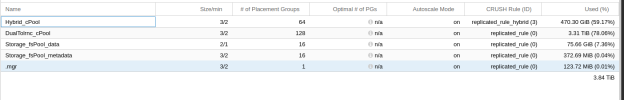

Create a pool name, like pool-ssd, and bind the new rule to that pool name. This will result in any new device that is associated with a particular class, automagically get associated with a pool based on your need. I think this is pretty damn cool. Don't you?

Your new rules are available in the pool creation:

Now you can do the following:

1. Create Classes

2. Crushmap

3. Creating Rules

4. Adding Pools

--------

Piecing together information i've obtained from various threads, thought I'd compile it here for hopefully, easier consumption and use.

If you're visiting this topic you probably already know what ceph is and what it can do for you. ceph so far for me, has been really cool and proxmox really overall is pretty cool. A couple things we'll address in this small tutorial:

- OSD classes for ceph are by default HDD and SSD.

- Even if you have NVME drives, they'll get associated with the SSD class. That's kind of crappy. That's like making SSDs defaulting to the HDD class. We really need 3 class types here.

- Classes will help isolate a way to assign VMs or LXC to these classes, which means you can put some things on HDD, others on SSD and even others on super fast NVMe storage.

- Me for example, I want to put large media storage on HDD, but I want containers that are processing the data on VMs on NVMe drives. SSD runs other things that aren't storage or speed intensive, like a distil instance checking on prices of things for me around the internet.

- By default, ceph adds all OSDs to one pool that you create.

- There's no way to isolate devices to a specific pool out of the box.

- If you're like some of us, we have multiple nodes with multiple devices types. Let's take ssd, hdd and nvme to name a few storage devices types.

- As mentioned above, we want to group these into pools.

Creating a New Class

Classes, if you don't know, are a way to categorize devices based really on their speed. You could also create classes for things like their size as well I suppose. There's a few different ways to leverage classes that are useful. For this tutorial, we're going to add an nvme class.

List OSDs and their classes:

ceph osd crush tree --show-shadow

Code:

ID CLASS WEIGHT TYPE NAME

-16 nvme 4.54498 root default~nvme

-10 37.75299 host host1

13 hdd 9.09499 osd.13

14 hdd 9.09499 osd.14

15 hdd 9.09499 osd.15

16 hdd 9.09499 osd.16

20 ssd 0.90900 osd.20 (These .90900 drives, 1TB, are the nvme disks but see how they're ssd? Note the osd number, osd.20 in this case, 20.

17 ssd 0.46500 osd.17

-7 37.74199 host host2

8 hdd 9.09499 osd.8

9 hdd 9.09499 osd.9

10 hdd 9.09499 osd.10

11 hdd 9.09499 osd.11

7 ssd 0.90900 osd.7 ***

18 ssd 0.45399 osd.18

-3 39.56000 host host3

0 hdd 9.09499 osd.0

1 hdd 9.09499 osd.1

2 hdd 9.09499 osd.2

3 hdd 9.09499 osd.3

4 ssd 0.90900 osd.4 ***

5 ssd 0.90900 osd.5 ***

6 ssd 0.90900 osd.6 ***

19 ssd 0.45399 osd.19*** Note the IDs of the devices you want to change, and unset and set the new class to the device:

Unset the class ssd for device 20:

ceph osd crush rm-device-class osd.20Set the class to nvme for device 20:

ceph osd crush set-device-class nvme osd.20Do the above for all OSD IDs that you want to change to a particular class.

Now we need to be sure ceph doesn't default the devices, so we disable the update on start. This will allow us to modify classes as they won't be set when they come up.

Modify your local /etc/ceph/ceph.conf to include an osd entry:

Code:

[osd]

osd_class_update_on_start = falseI THINK you have to hup the osd service but I'm not really sure to be honest right now

systemctl restart ceph-osd.targetCrushmap

You can see the crushmap in the UI @

Host >> Ceph >> ConfigurationTo understand a crushmap we need to do the following.

- Export and decompile a crush map

- Read and edit a crush map

- Import a crush map

- Rules to Pools

Get crushmap to compiled file

ceph osd getcrushmap -o crushmap.compiledDecompile the crushmap

crushtool -d crushmap.compiled -o crushmap.textCompile the crushmap, maintaining original compiled crushmap file (you choose how you want to manage the files)

crushtool -c crushmap.text -o crushmap.compiled.newImport the new crushmap

ceph osd setcrushmap -i crushmap.compiled.newNow that we know how to edit a crushmap, let's edit a crushmap!

Creating New Rules

Alright, we have a new class created but not really doing anything. So now we need to associate a class to a rule.

Using the above crushmap instructions, decompile and edit your crush map rules. The default rule name with ID 0 is something like replicated.

I decided to delete the default rule and replace it with 3 rules. The rules are what link the classes to the pool, which we'll do in the last step. Delete the original rule id 0 and replace with these 3. This works for this example as we have 3 devices types but your discretion here what you do to your own environment. Most would create an SSD and HDD rule, for example.

Code:

# rules

rule replicated_hdd {

id 0

type replicated

min_size 1

max_size 10

step take default class hdd

step chooseleaf firstn 0 type host

step emit

}

rule replicated_ssd {

id 1

type replicated

min_size 1

max_size 10

step take default class ssd

step chooseleaf firstn 0 type host

step emit

}

rule replicated_nvme {

id 2

type replicated

min_size 1

max_size 10

step take default class nvme

step chooseleaf firstn 0 type host

step emit

}Don't forget to compile and import your new crush file!

You can test the crushfile like so, but

--show-X fails for me for some reason as of this writing:crushtool -i crushmap.compiled.new --test --show-XCreate Pool

Go into Proxmox UI

Host >> Ceph >> PoolsCreate a pool name, like pool-ssd, and bind the new rule to that pool name. This will result in any new device that is associated with a particular class, automagically get associated with a pool based on your need. I think this is pretty damn cool. Don't you?

Your new rules are available in the pool creation:

Now you can do the following:

Upload ISOs to a pool resource and ALL nodes will be able to install from it. Woohoo!- Ceph is RBD storage only in this case and therefore doesn't support ISO Image content type, Only Container and Disk Image

- I believe now HA is feasible as you can push VMs to these shared pools. Which is what I'm going to test out tomorrow.

- Install VMs on media that works best for your need

Last edited: