Ceph Luminous now defaults to creating BlueStore OSDs, instead of FileStore. Whilst this avoids the double write penalty and promises a 100% increase in speed, it will probably frustrate a lot of people when their resulting throughput is multitudes slower than it was previously.

We trawled countless discussion forums before investing in tin to setup our first Ceph cluster approximately 1.5 years ago. We didn't have huge storage capacity requirements but wanted a highly available and linearly scalable architecture. We acquired 3 x 1U Intel Wildcat Pass server systems with 4 x 64 GB LR-DIMMs (expandable to 1.5TB), Dual Intel Xeon 2640v4 CPUs (40 Hyper Threaded cores per 1U), 2 x 10GbE UTP and 2 x 10GbE SFP+ interfaces. We like Intel original equipment as we are familiar with its quirks, are happy with their support and long firmware update history and particularly as the chassis ships with empty caddies (yuck to HP & Dell). The drives are natively accessible via AHCI and we've purchased the NVMe conversion kits to eventually be able to upgrade to faster storage. Each system still has empty PCIe slots for future use and there is out of band management to avoid unnecessary trips to the DC.

The 1U chassis only provides 8 x 2.5 inch bays, so we installed 2 x 480 GB Intel DC S3610 SSDs and 4 x 2TB Seagate 4Kn discs. The SSDs are partitioned to provide a 8 GB software RAID-1 for Proxmox, 256 GB software RAID-1 for swap and 3 x 60GB partitions to serve as SSD journals. Each FileStore OSD subsequently had its journal residing in a partition on the SSDs and performance was... surprisingly good.

The PVE 5.1 and Ceph Luminous upgrade process was painless, so we were excited to migrate our OSDs to BlueStore. My understanding of BlueStore was that it doesn't require a journal, due to writes to the hdd OSDs being atomic. Information previously stored in extended attributes is now stored in RocksDB and that relatively tiny database has its own WAL (write ahead log). The WAL is automatically located together with the DB, but can be split out, should the system additionally have NVMe or 3D X-Point memory.

After validating that Ceph was healthy (3/2 replica pools) we destroyed the FileStore OSDs on a given host and then re-created them by placing RocksDB in the SSD journal partitions. After waiting for replication to complete, we moved on to the next host. Our cluster had in the interim grown to 6 nodes and we only initiated this work after 6pm, so this process took approximately a week to complete.

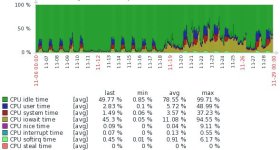

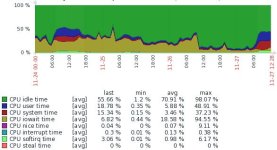

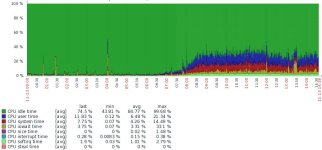

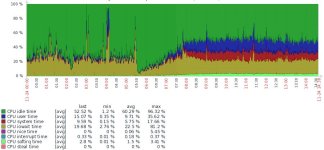

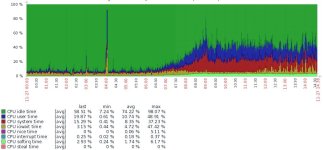

The following graph shows a breakdown of CPU activity of a guest VM in our cluster. Upgrading PVE and Ceph started early evening on the 18th of November and the spike there is objects being moved around due to tuneables being changed to optimal.:

This virtual machine runs Check Point vSEC and is essentially part of a firewall-as-a-service offering. The problem here is that Check Point are still using a relatively ancient kernel, specifically 2.6.18 from RHEL5. Whilst Ceph RBD has the ability to run in writeback mode, to buffer random writes, it runs in writethrough mode until the VM sends its first flush command. The flush function is however only available in 2.6.32 or later, so RBD permanently runs in writethrough mode.

The impact wasn't noticeable previously, as writes were returned as having completed when they were absorbed by the SSD journals. BlueStore OSDs don't journal writes, they exclusively use RocksDB for object metadata. This means that any writes need to land on the spinning discs for them to be acknowledged.

It unfortunately takes time for people to speak up, so when we got our first reports of degraded performance on Wednesday afternoon we were well committed to the conversion process.

I spent virtually all of Thursday identifying the problem and trying to find of a solution. bcache's ability to use the random access advantages of a SSD, by absorbing read and write requests, prior to sequentially draining its cache to slower bulk storage looked promising. bcache was merged in to the 3.9 kernel and Debian stretch (PVE 5) includes bcache-tools as a pre-built package. Kernel 4.13 (PVE 5.1) also now includes the ability to partition bcache block devices, so we had a solution.

After destroying OSDs on a host again, creating bcache block devices using the HDDs, repartitioning our SSDs and subsequently attaching partitions on the SSDs as caches to the bcache block devices we initially gained... nothing... It didn't take too long for us to realise that bcache comes from a time when SSDs were fast at random read/write but slow on sequential data transfer. bcache subsequently stops caching writes when writing 4MB or more sequential data, the default Ceph object size. After disabling that feature we had good performance and completed the process on the rest of the nodes over the weekend.

Notes:

Ceph Luminous BlueStore hdd OSDs with RocksDB, its WAL and bcache on SSD (2:1 ratio)

Layout:

Physical chassis view:

Resulting disc, partition and bcache block device layout:

Construction:

Ceph RBD benchmarks:

Technet's DiskSpd benchmark utility, run within VM:

https://gallery.technet.microsoft.com/DiskSpd-a-robust-storage-6cd2f223

We trawled countless discussion forums before investing in tin to setup our first Ceph cluster approximately 1.5 years ago. We didn't have huge storage capacity requirements but wanted a highly available and linearly scalable architecture. We acquired 3 x 1U Intel Wildcat Pass server systems with 4 x 64 GB LR-DIMMs (expandable to 1.5TB), Dual Intel Xeon 2640v4 CPUs (40 Hyper Threaded cores per 1U), 2 x 10GbE UTP and 2 x 10GbE SFP+ interfaces. We like Intel original equipment as we are familiar with its quirks, are happy with their support and long firmware update history and particularly as the chassis ships with empty caddies (yuck to HP & Dell). The drives are natively accessible via AHCI and we've purchased the NVMe conversion kits to eventually be able to upgrade to faster storage. Each system still has empty PCIe slots for future use and there is out of band management to avoid unnecessary trips to the DC.

The 1U chassis only provides 8 x 2.5 inch bays, so we installed 2 x 480 GB Intel DC S3610 SSDs and 4 x 2TB Seagate 4Kn discs. The SSDs are partitioned to provide a 8 GB software RAID-1 for Proxmox, 256 GB software RAID-1 for swap and 3 x 60GB partitions to serve as SSD journals. Each FileStore OSD subsequently had its journal residing in a partition on the SSDs and performance was... surprisingly good.

The PVE 5.1 and Ceph Luminous upgrade process was painless, so we were excited to migrate our OSDs to BlueStore. My understanding of BlueStore was that it doesn't require a journal, due to writes to the hdd OSDs being atomic. Information previously stored in extended attributes is now stored in RocksDB and that relatively tiny database has its own WAL (write ahead log). The WAL is automatically located together with the DB, but can be split out, should the system additionally have NVMe or 3D X-Point memory.

After validating that Ceph was healthy (3/2 replica pools) we destroyed the FileStore OSDs on a given host and then re-created them by placing RocksDB in the SSD journal partitions. After waiting for replication to complete, we moved on to the next host. Our cluster had in the interim grown to 6 nodes and we only initiated this work after 6pm, so this process took approximately a week to complete.

The following graph shows a breakdown of CPU activity of a guest VM in our cluster. Upgrading PVE and Ceph started early evening on the 18th of November and the spike there is objects being moved around due to tuneables being changed to optimal.:

This virtual machine runs Check Point vSEC and is essentially part of a firewall-as-a-service offering. The problem here is that Check Point are still using a relatively ancient kernel, specifically 2.6.18 from RHEL5. Whilst Ceph RBD has the ability to run in writeback mode, to buffer random writes, it runs in writethrough mode until the VM sends its first flush command. The flush function is however only available in 2.6.32 or later, so RBD permanently runs in writethrough mode.

The impact wasn't noticeable previously, as writes were returned as having completed when they were absorbed by the SSD journals. BlueStore OSDs don't journal writes, they exclusively use RocksDB for object metadata. This means that any writes need to land on the spinning discs for them to be acknowledged.

It unfortunately takes time for people to speak up, so when we got our first reports of degraded performance on Wednesday afternoon we were well committed to the conversion process.

I spent virtually all of Thursday identifying the problem and trying to find of a solution. bcache's ability to use the random access advantages of a SSD, by absorbing read and write requests, prior to sequentially draining its cache to slower bulk storage looked promising. bcache was merged in to the 3.9 kernel and Debian stretch (PVE 5) includes bcache-tools as a pre-built package. Kernel 4.13 (PVE 5.1) also now includes the ability to partition bcache block devices, so we had a solution.

After destroying OSDs on a host again, creating bcache block devices using the HDDs, repartitioning our SSDs and subsequently attaching partitions on the SSDs as caches to the bcache block devices we initially gained... nothing... It didn't take too long for us to realise that bcache comes from a time when SSDs were fast at random read/write but slow on sequential data transfer. bcache subsequently stops caching writes when writing 4MB or more sequential data, the default Ceph object size. After disabling that feature we had good performance and completed the process on the rest of the nodes over the weekend.

Notes:

- Although these benchmarks were run on a Sunday morning there was still workload being serviced by aproximately 134 virtuals, so each test was run thrice to overcome bursts affecting benchmarks.

- Nice thing is that trim/discard is passed through the VM to Ceph, which could reclaim unused storage if contiguous blocks of discarded data cover underlying Ceph objects (4 MB).

- Cluster comprises of 5 Hyper-converged hosts containing 2 x Intel DC S3610 480 GB SATA SSDs and 4 x Seagate ST2000NX0243 discs (2TB 4Kn SATA).

- Tripple replication, so cluster provides 12 TB of storage.

- SATA hdd OSDs have their BlueStore RocksDB, RocksDB WAL (write ahead log) and bcache partitions on a SSD (2:1 ratio).

- SATA ssd failure will take down associated hdd OSDs (sda = sdc & sde; sdb = sdd & sdf)

Ceph Luminous BlueStore hdd OSDs with RocksDB, its WAL and bcache on SSD (2:1 ratio)

Layout:

Code:

sda1 sdb1 : 1 MB FakeMBR

sda2 sdb2 : 8 GB mdraid1 OS

sda3 sdb3 : 30 GB RocksDB and WAL for sdc and sdd

sda4 sdb4 : 30 GB RocksDB and WAL for sde and sdf

sda5 sdb5 : 60GB bcache for sdc and sdd

sda6 sdb6 : 60GB bcache for sde and sdf

sda7 sdb7 : 256GB mdraid1 swap with discard

sdc sdd sde sdf : BlueStore hdd OSDsPhysical chassis view:

Code:

sdb sdd sdf x

sda sdc sde x

x = reserved for dedicated NVMe or SSD BlueStore OSDsResulting disc, partition and bcache block device layout:

Code:

[admin@kvm5e ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:32 0 447.1G 0 disk

├─sda1 8:33 0 1M 0 part

├─sda2 8:34 0 7.8G 0 part

│ └─md0 9:0 0 7.8G 0 raid1 /

├─sda3 8:35 0 30G 0 part

├─sda4 8:36 0 30G 0 part

├─sda5 8:37 0 60G 0 part

│ └─bcache0 251:32 0 1.8T 0 disk

│ ├─bcache0p1 251:33 0 100M 0 part /var/lib/ceph/osd/ceph-16

│ └─bcache0p2 251:34 0 1.8T 0 part

├─sda6 8:38 0 60G 0 part

│ └─bcache2 251:0 0 1.8T 0 disk

│ ├─bcache2p1 251:1 0 100M 0 part /var/lib/ceph/osd/ceph-18

│ └─bcache2p2 251:2 0 1.8T 0 part

└─sda7 8:39 0 256G 0 part

└─md1 9:1 0 255.9G 0 raid1 [SWAP]

sdb 8:48 0 447.1G 0 disk

├─sdb1 8:49 0 1M 0 part

├─sdb2 8:50 0 7.8G 0 part

│ └─md0 9:0 0 7.8G 0 raid1 /

├─sdb3 8:51 0 30G 0 part

├─sdb4 8:52 0 30G 0 part

├─sdb5 8:53 0 60G 0 part

│ └─bcache1 251:48 0 1.8T 0 disk

│ ├─bcache1p1 251:49 0 100M 0 part /var/lib/ceph/osd/ceph-17

│ └─bcache1p2 251:50 0 1.8T 0 part

├─sdb6 8:54 0 60G 0 part

│ └─bcache3 251:16 0 1.8T 0 disk

│ ├─bcache3p1 251:17 0 100M 0 part /var/lib/ceph/osd/ceph-19

│ └─bcache3p2 251:18 0 1.8T 0 part

└─sdb7 8:55 0 256G 0 part

└─md1 9:1 0 255.9G 0 raid1 [SWAP]

sdc 8:64 0 1.8T 0 disk

└─bcache0 251:32 0 1.8T 0 disk

├─bcache0p1 251:33 0 100M 0 part /var/lib/ceph/osd/ceph-16

└─bcache0p2 251:34 0 1.8T 0 part

sdd 8:80 0 1.8T 0 disk

└─bcache1 251:48 0 1.8T 0 disk

├─bcache1p1 251:49 0 100M 0 part /var/lib/ceph/osd/ceph-17

└─bcache1p2 251:50 0 1.8T 0 part

sde 8:0 0 1.8T 0 disk

└─bcache2 251:0 0 1.8T 0 disk

├─bcache2p1 251:1 0 100M 0 part /var/lib/ceph/osd/ceph-18

└─bcache2p2 251:2 0 1.8T 0 part

sdf 8:16 0 1.8T 0 disk

└─bcache3 251:16 0 1.8T 0 disk

├─bcache3p1 251:17 0 100M 0 part /var/lib/ceph/osd/ceph-19

└─bcache3p2 251:18 0 1.8T 0 partConstruction:

Code:

Proxmox 5.1

apt-get install bcache-tools;

modprobe bcache;

[ ! -d /sys/fs/bcache ] && echo "Warning, kernel module not loaded!";

for f in c d e f; do

wipefs -a /dev/sd$f;

make-bcache -B /dev/sd$f;

done

sleep 5;

#make-bcache -C -b2MB -w4k --discard --wipe-bcache /dev/sda5; # bcache 0 (sdc)

# sector size 4k

# bucket = SSD's erase block size (2MB should fit even multiples for MLC and SLC, not TLC though)

# Possible bcache bug, does not attach when using custom bucket and sector size

make-bcache -C /dev/sda5 --wipe-bcache; # bcache 0 (sdc)

make-bcache -C /dev/sdb5 --wipe-bcache; # bcache 1 (sdd)

make-bcache -C /dev/sda6 --wipe-bcache; # bcache 2 (sde)

make-bcache -C /dev/sdb6 --wipe-bcache; # bcache 3 (sdf)

bcache-super-show /dev/sda5 | grep cset | awk '{print $2}' > /sys/block/bcache0/bcache/attach;

bcache-super-show /dev/sdb5 | grep cset | awk '{print $2}' > /sys/block/bcache1/bcache/attach;

bcache-super-show /dev/sda6 | grep cset | awk '{print $2}' > /sys/block/bcache2/bcache/attach;

bcache-super-show /dev/sdb6 | grep cset | awk '{print $2}' > /sys/block/bcache3/bcache/attach;

for f in /sys/block/bcache?/bcache; do

echo 0 > $f/sequential_cutoff;

echo writeback > $f/cache_mode;

done

cat >> /etc/rc.local <<EOF

# Unset sequential store cut off:

for f in /sys/block/bcache?/bcache; do

echo 0 > $f/sequential_cutoff;

done

EOF

vi /etc/rc.local;

# Ensure 'exit' comes after additions

ID=16

DEVICE=/dev/bcache0 #sdc

JOURNAL=`blkid /dev/sda3 | perl -pe 's/.*PARTUUID=.(.*?).$/\/dev\/disk\/by-partuuid\/\1/'`;

echo $ID; ls -l $DEVICE $JOURNAL;

ceph-disk prepare --bluestore $DEVICE --block.db $JOURNAL --osd-id $ID; sleep 20;

ceph osd metadata $ID;

ID=17

DEVICE=/dev/bcache1 #sdd

JOURNAL=`blkid /dev/sdb3 | perl -pe 's/.*PARTUUID=.(.*?).$/\/dev\/disk\/by-partuuid\/\1/'`;

echo $ID; ls -l $DEVICE $JOURNAL;

ceph-disk prepare --bluestore $DEVICE --block.db $JOURNAL --osd-id $ID; sleep 20;

ceph osd metadata $ID;

ID=18

DEVICE=/dev/bcache2 #sde

JOURNAL=`blkid /dev/sda4 | perl -pe 's/.*PARTUUID=.(.*?).$/\/dev\/disk\/by-partuuid\/\1/'`;

echo $ID; ls -l $DEVICE $JOURNAL;

ceph-disk prepare --bluestore $DEVICE --block.db $JOURNAL --osd-id $ID; sleep 20;

ceph osd metadata $ID;

ID=19

DEVICE=/dev/bcache3 #sdf

JOURNAL=`blkid /dev/sdb4 | perl -pe 's/.*PARTUUID=.(.*?).$/\/dev\/disk\/by-partuuid\/\1/'`;

echo $ID; ls -l $DEVICE $JOURNAL;

ceph-disk prepare --bluestore $DEVICE --block.db $JOURNAL --osd-id $ID; sleep 20;

ceph osd metadata $ID;Ceph RBD benchmarks:

Code:

rados bench -p rbd 120 write --no-cleanup # MBps throughput: 376/480/220 latency: 0.2s/0.9s/0.0s (avg/max/min)

rados bench -p rbd 120 rand # MBps throughput: 1167 latency: 0.1s/1.1s/0.0s (avg/max/min)

rados bench -p rbd 120 seq # MBps throughput: 1086 latency: 0.1s/1.4s/0.0s (avg/max/min)

rados bench -p rbd 120 write # MBps throughput: 395/516/184 latency: 0.2s/1.2s/0.0s (avg/max/min)Technet's DiskSpd benchmark utility, run within VM:

https://gallery.technet.microsoft.com/DiskSpd-a-robust-storage-6cd2f223

Code:

DiskSpd v2.0.17 command:

diskspd -b256K -d60 -h -L -o2 -t4 -r -w30 -c250M c:\io.dat

VM specs:

2 x Haswell-noTSX NUMA cores (host runs 2 x Intel E5-2640 v4 @ 2.40GHz with HyperThreading enabled)

4 GB RAM (LoadReduced-DIMMs)

Ceph Luminous BlueStore hdd OSDs with RocksDB, its WAL and bcache on SSD (2:1 ratio):

Windows 2012r2 - VirtIO SCSI (vioscsi) with KRBD:

1 read : 453.90 MBps 1816 IOPs write : 195.40 MBps 770 IOPs

2 read : 400.85 MBps 1603 IOPs write : 173.17 MBps 693 IOPs

3 read : 427.26 MBps 1709 IOPs write : 184.16 MBps 737 IOPs

Ceph Jewel FileStore hdd OSDs with SSD journals (2:1 ratio):

Windows 2012r2 - After deployment:

1 read : 305.15 MBps 1220 IOPs write : 131.15 MBps 524 IOPs

2 read : 274.90 MBps 1099 IOPs write : 118.10 MBps 472 IOPs

3 read : 290.14 MBps 1161 IOPs write : 124.87 MBps 499 IOPs

Disabled AntiVirus:

4 read : 300.95 MBps 1204 IOPs write : 129.48 MBps 518 IOPs

5 read : 284.69 MBps 1139 IOPs write : 122.40 MBps 490 IOPs

6 read : 307.37 MBps 1230 IOPs write : 132.03 MBps 528 IOPs

Windows 2012r2 - Updated drivers (Viostor):

1 read : 305.15 MBps 1222 IOPs write : 131.55 MBps 526 IOPs

2 read : 297.86 MBps 1191 IOPs write : 128.04 MBps 512 IOPs

3 read : 296.38 MBps 1186 IOPs write : 127.40 MBps 510 IOPs

Windows 2012r2 - VirtIO SCSI (vioscsi):

1 read : 313.74 MBps 1255 IOPs write : 135.16 MBps 541 IOPs

2 read : 314.29 MBps 1257 IOPs write : 135.20 MBps 541 IOPs

3 read : 296.30 MBps 1185 IOPs write : 127.60 MBps 510 IOPs

Windows 2012r2 - VirtIO SCSI (vioscsi) with KRBD:

1 read : 446.36 MBps 1785 IOPs write : 192.46 MBps 770 IOPs

2 read : 460.62 MBps 1842 IOPs write : 198.19 MBps 793 IOPs

3 read : 405.94 MBps 1624 IOPs write : 175.20 MBps 701 IOPs

Windows 2012r2 - Updated drivers (Viostor) with KRBD:

1 read : 448.84 MBps 1795 IOPs write : 193.12 MBps 772 IOPs

2 read : 428.78 MBps 1715 IOPs write : 185.19 MBps 741 IOPs

3 read : 482.75 MBps 1931 IOPs write : 207.37 MBps 829 IOPs

Windows 2012r2 - Updated drivers (Viostor) with KRBD - writeback caching:

1 read : 205.00 MBps 820 IOPs write : 88.38 MBps 354 IOPs

2 read : 206.60 MBps 826 IOPs write : 89.12 MBps 356 IOPs

3 read : 201.35 MBps 805 IOPs write : 86.87 MBps 347 IOPs

Windows 2012r2 - Updated drivers (Viostor) with KRBD - writeback (unsafe) caching:

1 read : 4611.13 MBps 18445 IOPs write : 1973.78 MBps 7895 IOPs

2 read : 5098.30 MBps 20393 IOPs write : 2181.72 MBps 8727 IOPs

3 read : 5084.54 MBps 20338 IOPs write : 2175.36 MBps 8701 IOPs

Last edited: