Hi

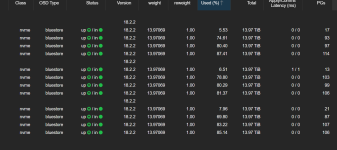

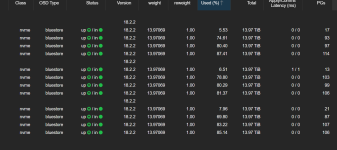

We have an EPYC 9554 based Cluster with 3x WD SN650 - NVME PCI-Gen4 15.36TB in each server. all backed by 100G Ethernet on Mellanox 2700 CX4 cards.

output of lspci ---

lspci | grep Ethernet

03:00.0 Ethernet controller: Broadcom Inc. and subsidiaries BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller (rev 01)

03:00.1 Ethernet controller: Broadcom Inc. and subsidiaries BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller (rev 01)

41:00.0 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

41:00.1 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

we have the proxmox ceph storage on 100G and the 10G is reserved for Inter VM / Internet communication.

-----------------------------------------------------------------------------------------------------------------------------------------------------------

We have added another 15.36 Nvme drive into each server so its 4 drives per server now. (also we have enough RAM at 1.5TB per box - 64GB DDR5 * 24)

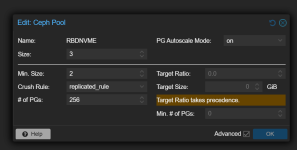

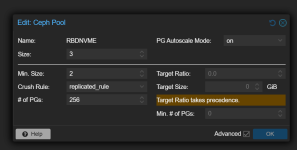

also increased the PG count from 128 to the 256 recommended by the ceph in proxmox gui in the pools area.

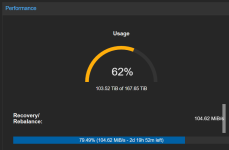

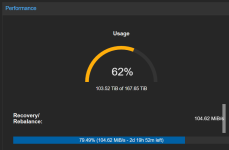

Since we were short of space and our cluster reached 85% usage we added 3 more 15.36TB NVME dries - 1 in each of the 3 hosts to increase capacity. This resulted in rebalancing

our rebalancing is very slow at 60-100 Mbps only which is very bad considering that there negligbile io in the cluster (so thats not the problem for sure)

running - the below does not seem to take any effect

ceph config set osd osd_recovery_max_active 16 --force

ceph config set osd osd_max_backfills 4 --force

this is the output of

ceph-conf --show-config | egrep "osd_recovery_max_active|osd_recovery_op_priority|osd_max_backfills"

osd_max_backfills = 1

osd_recovery_max_active = 0

osd_recovery_max_active_hdd = 3

osd_recovery_max_active_ssd = 10

osd_recovery_op_priority = 3

Note: we are running enterprise repo of ceph and proxmox with the latest updates to date.

any ideas on solving this so that we can get a good rebalncing speed.

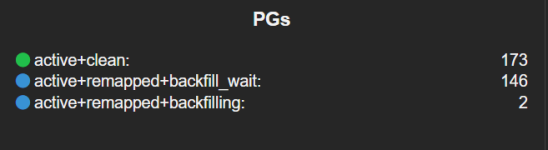

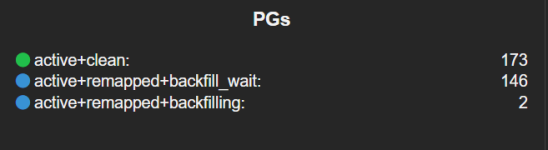

see the below screenshot from the ceph status in the web ui - backfilling - only 2 and even 1 sometimes and then It's back to 2

We have an EPYC 9554 based Cluster with 3x WD SN650 - NVME PCI-Gen4 15.36TB in each server. all backed by 100G Ethernet on Mellanox 2700 CX4 cards.

output of lspci ---

lspci | grep Ethernet

03:00.0 Ethernet controller: Broadcom Inc. and subsidiaries BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller (rev 01)

03:00.1 Ethernet controller: Broadcom Inc. and subsidiaries BCM57416 NetXtreme-E Dual-Media 10G RDMA Ethernet Controller (rev 01)

41:00.0 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

41:00.1 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

we have the proxmox ceph storage on 100G and the 10G is reserved for Inter VM / Internet communication.

-----------------------------------------------------------------------------------------------------------------------------------------------------------

We have added another 15.36 Nvme drive into each server so its 4 drives per server now. (also we have enough RAM at 1.5TB per box - 64GB DDR5 * 24)

also increased the PG count from 128 to the 256 recommended by the ceph in proxmox gui in the pools area.

Since we were short of space and our cluster reached 85% usage we added 3 more 15.36TB NVME dries - 1 in each of the 3 hosts to increase capacity. This resulted in rebalancing

our rebalancing is very slow at 60-100 Mbps only which is very bad considering that there negligbile io in the cluster (so thats not the problem for sure)

running - the below does not seem to take any effect

ceph config set osd osd_recovery_max_active 16 --force

ceph config set osd osd_max_backfills 4 --force

this is the output of

ceph-conf --show-config | egrep "osd_recovery_max_active|osd_recovery_op_priority|osd_max_backfills"

osd_max_backfills = 1

osd_recovery_max_active = 0

osd_recovery_max_active_hdd = 3

osd_recovery_max_active_ssd = 10

osd_recovery_op_priority = 3

Note: we are running enterprise repo of ceph and proxmox with the latest updates to date.

any ideas on solving this so that we can get a good rebalncing speed.

see the below screenshot from the ceph status in the web ui - backfilling - only 2 and even 1 sometimes and then It's back to 2

Last edited: