Hello,

On our clusters 8.4.0 since the upgrade of Ceph 19.2.0 to 19.2.1 (pve2/pve3) I have warning messages.

I applied the "solution" at https://github.com/rook/rook/discussions/15403

But this not resolve the "problem".

Best regards.

Francis

On our clusters 8.4.0 since the upgrade of Ceph 19.2.0 to 19.2.1 (pve2/pve3) I have warning messages.

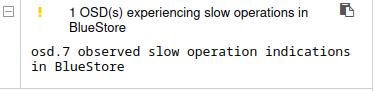

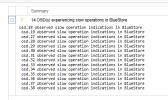

X OSD(s) experiencing slow operations in BlueStore

osd.x observed slow operation indications in BlueStore

osd.y observed slow operation indications in BlueStore

...

I applied the "solution" at https://github.com/rook/rook/discussions/15403

ceph config set global bdev_async_discard_threads 1

ceph config set global bdev_enable_discard true

But this not resolve the "problem".

Best regards.

Francis

Last edited: