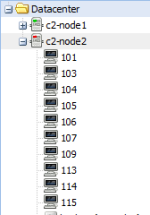

Today we noticed that one of our nodes stopped cman from nothing.

it fails to start again:

cluster.conf on node2:

pveversion:

The VMs on this node are still running.

But we are not able to control them through the webinterface.

it fails to start again:

Code:

# /etc/init.d/cman start

Starting cluster:

Checking if cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Starting qdiskd...

[FAILED]cluster.conf on node2:

Code:

# cat /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="11" name="cluster-2">

<cman expected_votes="3" keyfile="/var/lib/pve-cluster/corosync.authkey"/>

<quorumd allow_kill="0" interval="1" label="quorum" tko="10" votes="1"/>

<totem token="54000"/>

<clusternodes>

<clusternode name="c2-node1" nodeid="1" votes="1"/>

<clusternode name="c2-node2" nodeid="2" votes="1"/>

</clusternodes>

<rm/>

</cluster>pveversion:

Code:

# pveversion -v

proxmox-ve-2.6.32: 3.4-185 (running kernel: 2.6.32-48-pve)

pve-manager: 3.4-16 (running version: 3.4-16/40ccc11c)

pve-kernel-2.6.32-40-pve: 2.6.32-160

pve-kernel-2.6.32-47-pve: 2.6.32-179

pve-kernel-2.6.32-44-pve: 2.6.32-173

pve-kernel-2.6.32-41-pve: 2.6.32-164

pve-kernel-2.6.32-48-pve: 2.6.32-185

pve-kernel-2.6.32-45-pve: 2.6.32-174

pve-kernel-2.6.32-42-pve: 2.6.32-165

pve-kernel-2.6.32-46-pve: 2.6.32-177

pve-kernel-2.6.32-26-pve: 2.6.32-114

pve-kernel-2.6.32-43-pve: 2.6.32-166

lvm2: 2.02.98-pve4

clvm: 2.02.98-pve4

corosync-pve: 1.4.7-1

openais-pve: 1.1.4-3

libqb0: 0.11.1-2

redhat-cluster-pve: 3.2.0-2

resource-agents-pve: 3.9.2-4

fence-agents-pve: 4.0.10-3

pve-cluster: 3.0-20

qemu-server: 3.4-9

pve-firmware: 1.1-6

libpve-common-perl: 3.0-27

libpve-access-control: 3.0-16

libpve-storage-perl: 3.0-35

pve-libspice-server1: 0.12.4-3

vncterm: 1.1-8

vzctl: 4.0-1pve6

vzprocps: 2.0.11-2

vzquota: 3.1-2

pve-qemu-kvm: 2.2-28

ksm-control-daemon: 1.1-1

glusterfs-client: 3.5.2-1The VMs on this node are still running.

But we are not able to control them through the webinterface.