Hello fellow Proxmox users,

At the moment I run 4 proxmox hosts on version 7.4-3

All these hosts have local LVM storage, which I use to run the VM Disks on.

Now, If i try and move one disk from one local LVM storage to another host's local LVM storage, I am unable to, and I get the error: TASK ERROR: storage migration failed: no such volume group 'SSD-Local-Proxmox6'

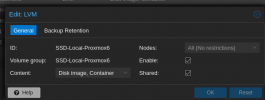

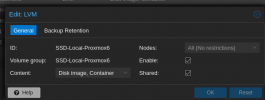

I did share all added local LVM storage in the cluster under Datacenter - storage - EDIT - Box 'shared' is enabled.

What am I missing here?

I was wondering if someone can clarify this.

At the moment I run 4 proxmox hosts on version 7.4-3

All these hosts have local LVM storage, which I use to run the VM Disks on.

Now, If i try and move one disk from one local LVM storage to another host's local LVM storage, I am unable to, and I get the error: TASK ERROR: storage migration failed: no such volume group 'SSD-Local-Proxmox6'

I did share all added local LVM storage in the cluster under Datacenter - storage - EDIT - Box 'shared' is enabled.

What am I missing here?

I was wondering if someone can clarify this.