Hi,

I have a VM that is on a Ceph storage, but for some reason the local storage is preventing it from migrating to another node, eventhough the data is not on the local volume. I heard that you can restrict the local storage to just that one node (which it is always, as it is LOCAL STORAGE)

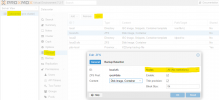

It is a local ZFS volume called pxmx_raid1_pool, how can I restrict it to just that one node? This is the system boot volume, so I can't recreate it for obvious reasons.

I have a VM that is on a Ceph storage, but for some reason the local storage is preventing it from migrating to another node, eventhough the data is not on the local volume. I heard that you can restrict the local storage to just that one node (which it is always, as it is LOCAL STORAGE)

It is a local ZFS volume called pxmx_raid1_pool, how can I restrict it to just that one node? This is the system boot volume, so I can't recreate it for obvious reasons.