I'm new to the Proxmox Forum,but i already use PVE for more than three years.I'm sure that I've read the basic docs like

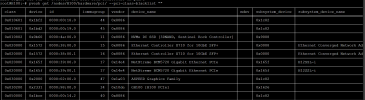

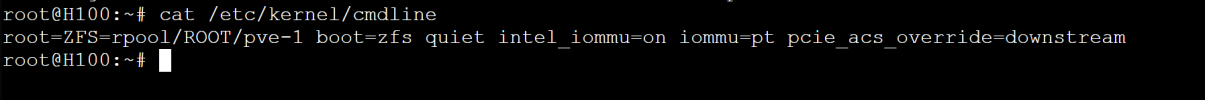

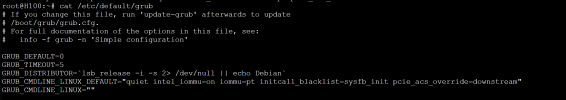

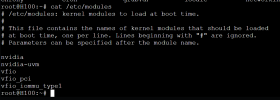

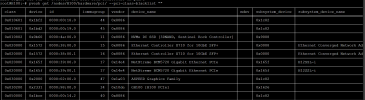

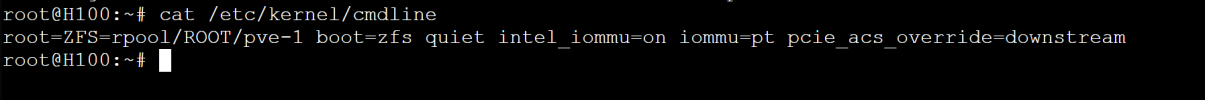

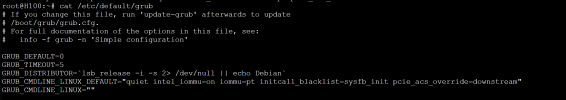

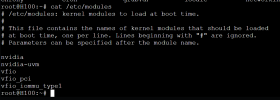

some outputs below

btw,when installing nvidia drivers on Windows VM,host machine may freeze and crash then reboot

- https://pve.proxmox.com/wiki/PCI_Passthrough#Enable_the_IOMMU

- https://pve.proxmox.com/wiki/PCI_Passthrough#Required_Modules

- https://pve.proxmox.com/wiki/PCI_Passthrough#IOMMU_Interrupt_Remapping

- https://pve.proxmox.com/wiki/PCI_Passthrough#Verify_IOMMU_Isolation

- I'm working on a beast of a server with:

- 144 x Intel(R) Xeon(R) Platinum 8452Y (2 Sockets)

- 256G ram

- H100 PCIe GPU card

some outputs below

btw,when installing nvidia drivers on Windows VM,host machine may freeze and crash then reboot