VMID=120

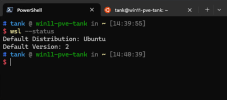

NAME=win11-wsl2

MEM=16384

CORES=8

DISK_GB=200

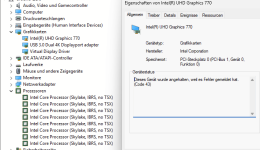

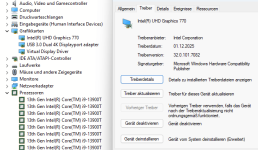

qm create $VMID --name $NAME --ostype win11 --machine pc-q35-9.2+pve1

--cpu host --sockets 1 --cores $CORES --memory $MEM --agent enabled=1

--scsihw virtio-scsi-single --vga qxl --bios ovmf

qm set $VMID --efidisk0 local-lvm:4,efitype=4m,pre-enrolled-keys=1

qm set $VMID --tpmstate0 local-lvm:4,version=v2.0

qm set $VMID --scsi0 Storage2:${DISK_GB},ssd=1,discard=on,iothread=1

qm set $VMID --net0 virtio,bridge=vmbr0,firewall=1

qm set $VMID --ide2 NFS:iso/Win11_24H2_English_x64.iso,media=cdrom

qm set $VMID --ide3 NFS:iso/virtio-win-0.1.271.iso,media=cdrom