Hey guys,

I'm trying to build a custom kernel but whatever I do I am unable to edit the config of the kernel, it always reverts to the default.

After this I extract the config from the .deb file and it has reverted to the original config.

Any idea how to allow for a custom config? I've tried to edit the config-6.11.11.org file as well before I run the "make deb" cmd but it seems to always revert to the original config file.

I'm trying to build a custom kernel but whatever I do I am unable to edit the config of the kernel, it always reverts to the default.

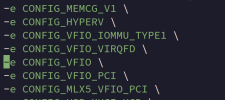

Bash:

git clone git://git.proxmox.com/git/pve-kernel.git

cd pve-kernel

nano Makefile

# I edit this line: EXTRAVERSION=-$(KREL)-pve-custom

make build-dir-fresh

#Copy custom config

cp /path/to/my/custom.config proxmox-kernel-6.11.11-1/ubuntu-kernel/.config

make debAfter this I extract the config from the .deb file and it has reverted to the original config.

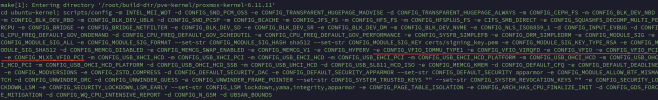

Bash:

dpkg-deb -x proxmox-kernel-6.11.11-1-pve-custom_6.11.11-1_amd64.deb extracted_kernel

find extracted_kernel -name "config-*"Any idea how to allow for a custom config? I've tried to edit the config-6.11.11.org file as well before I run the "make deb" cmd but it seems to always revert to the original config file.

Last edited: