Hi everyone,

we are using a Bandwith Limit for some of our Customers set viá the Proxmox UI. We discovered, that some of our Customers have way higher Download/Upload rates then they should be. After some digging, we found out, that if you Migrate a VM that has a Bandwith rate limit set to lets say 13 MB/s to another Node, the Ratelimit does not work anymore.

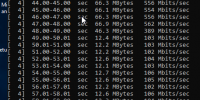

Here a Image of an iperf, at Hop 24 you see the succesful Migration to another node

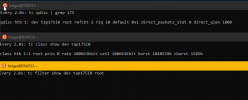

The vm.conf file on the nodes keep the rate limit (rate=13) but it does not affect the migratet machine anymore:

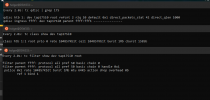

if you make a change to the Bandwith Limit, it works again.

running on:

pve-manager/6.4-9/5f5c0e3f (running kernel: 5.4.119-1-pve)

we are using a Bandwith Limit for some of our Customers set viá the Proxmox UI. We discovered, that some of our Customers have way higher Download/Upload rates then they should be. After some digging, we found out, that if you Migrate a VM that has a Bandwith rate limit set to lets say 13 MB/s to another Node, the Ratelimit does not work anymore.

Here a Image of an iperf, at Hop 24 you see the succesful Migration to another node

The vm.conf file on the nodes keep the rate limit (rate=13) but it does not affect the migratet machine anymore:

if you make a change to the Bandwith Limit, it works again.

running on:

pve-manager/6.4-9/5f5c0e3f (running kernel: 5.4.119-1-pve)

Last edited: