Version:

According to the reply from @fabian, I added a step 2 to poll the task status of the clone, but after tests, the problem is the same as before.

My operations that trigger the bug:

1. I use this API to create a VM "POST /api2/json/nodes/{node}/qemu/{vmid}/clone".

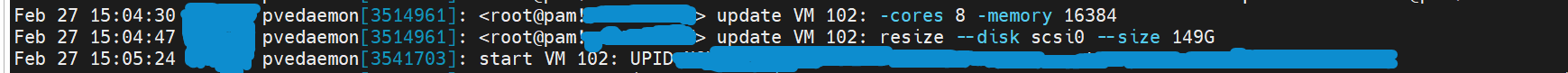

2. I poll the API "GET /api2/json/nodes/{node}/tasks/{upid}/status" to wait until the clone task status is "stopped" and the exitstatus is "OK".

3. I poll this API to wait for the VM config to be unlocked "GET /api2/json/nodes/{node}/qemu/{vmid}/status/current".

4. I use this API to set the CPU cores and Ram size of the VM "PUT /api2/json/nodes/{node}/qemu/{vmid}/config".

5. I use this API to get the disk name of this VM "GET /api2/json/nodes/{node}/qemu/{vmid}/config".

6. I call this API with retry to resize the disk of this VM to an absolute value "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize". It seems that after a VM is created by clone, its disk cannot be resized in a short time (about 1 - 2 minutes), so I resize it with retry in this step. In this step, the first try of resizing failed, and then a following try succeeded. The bug is: In this condition, although inside the VM we can see the disk is resized, Proxmox still shows the old size instead of the resized value in the API "GET /api2/json/nodes/{node}/qemu/{vmid}/status/current".

I think the key steps to trigger the bug are step 1, step 2 and step 6, but to be cautious, I listed all of my operations here.

Now I have found a method to avoid this problem with this operation:

If I need to resize the disk to 150G, I firstly set the absolute value "149G" using the API "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize". Then I set the relative value "+1G" using the API "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize".

Thanks to the reply from @fabian, I think this may be the best method to escape from the problem:

After cloning a VM and resizing the VM disk, SSH to the Proxmox server to execute the command "qm rescan --vmid <vmid>".

This post is just to share information about this bug.

According to the reply from @fabian, I added a step 2 to poll the task status of the clone, but after tests, the problem is the same as before.

My operations that trigger the bug:

1. I use this API to create a VM "POST /api2/json/nodes/{node}/qemu/{vmid}/clone".

2. I poll the API "GET /api2/json/nodes/{node}/tasks/{upid}/status" to wait until the clone task status is "stopped" and the exitstatus is "OK".

3. I poll this API to wait for the VM config to be unlocked "GET /api2/json/nodes/{node}/qemu/{vmid}/status/current".

4. I use this API to set the CPU cores and Ram size of the VM "PUT /api2/json/nodes/{node}/qemu/{vmid}/config".

5. I use this API to get the disk name of this VM "GET /api2/json/nodes/{node}/qemu/{vmid}/config".

6. I call this API with retry to resize the disk of this VM to an absolute value "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize". It seems that after a VM is created by clone, its disk cannot be resized in a short time (about 1 - 2 minutes), so I resize it with retry in this step. In this step, the first try of resizing failed, and then a following try succeeded. The bug is: In this condition, although inside the VM we can see the disk is resized, Proxmox still shows the old size instead of the resized value in the API "GET /api2/json/nodes/{node}/qemu/{vmid}/status/current".

I think the key steps to trigger the bug are step 1, step 2 and step 6, but to be cautious, I listed all of my operations here.

If I need to resize the disk to 150G, I firstly set the absolute value "149G" using the API "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize". Then I set the relative value "+1G" using the API "PUT /api2/json/nodes/{node}/qemu/{vmid}/resize".

Thanks to the reply from @fabian, I think this may be the best method to escape from the problem:

After cloning a VM and resizing the VM disk, SSH to the Proxmox server to execute the command "qm rescan --vmid <vmid>".

This post is just to share information about this bug.

Last edited: