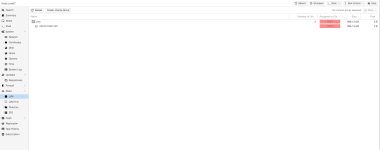

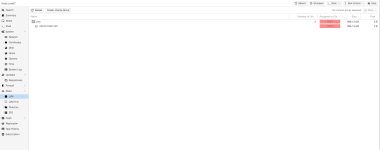

I updated one of my PVE servers from 9.0.x to 9.0.10, since this moment I can see the following error if I select "Disks":

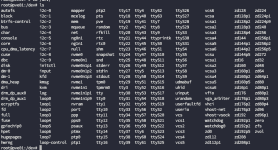

Output of pvdisplay:

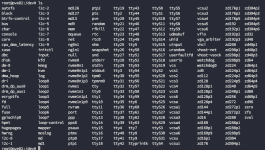

Output of lvdisplay:

I compared the /dev directory with another node which I installed and patched at the same time but on the affected node I can see also "md*" devices which are missing on the working one.

Any idea how to fix this?

Thanks!

Output of pvdisplay:

Code:

root@pve02:/# pvdisplay

--- Physical volume ---

PV Name /dev/nvme0n1p3

VG Name pve

PV Size 930.51 GiB / not usable 4.69 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 238210

Free PE 0

Allocated PE 238210

PV UUID 7CrkuN-EER0-p3LT-dbEQ-dSq4-Hv47-5bbcif

WARNING: Unrecognised segment type tier-thin-pool

WARNING: Unrecognised segment type thick

WARNING: PV /dev/md1 in VG vg1 is using an old PV header, modify the VG to update.

LV vg1/tp1, segment 1 invalid: does not support flag ERROR_WHEN_FULL. for tier-thin-pool segment.

Internal error: LV segments corrupted in tp1.

Cannot process volume group vg1

root@pve02:/#Output of lvdisplay:

Code:

root@pve02:/# lvdisplay

--- Logical volume ---

LV Path /dev/pve/swap

LV Name swap

VG Name pve

LV UUID 9Zd21e-qaKz-1Y3W-lvFW-pQRk-9gXN-tsQKb8

LV Write Access read/write

LV Creation host, time proxmox, 2025-09-06 09:10:08 +0200

LV Status available

# open 1

LV Size 8.00 GiB

Current LE 2048

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:0

--- Logical volume ---

LV Path /dev/pve/root

LV Name root

VG Name pve

LV UUID NpqTlX-O9IZ-eIUJ-ducf-JlfD-nIso-aUlhDa

LV Write Access read/write

LV Creation host, time proxmox, 2025-09-06 09:10:08 +0200

LV Status available

# open 1

LV Size <922.51 GiB

Current LE 236162

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:1

WARNING: Unrecognised segment type tier-thin-pool

WARNING: Unrecognised segment type thick

WARNING: PV /dev/md1 in VG vg1 is using an old PV header, modify the VG to update.

LV vg1/tp1, segment 1 invalid: does not support flag ERROR_WHEN_FULL. for tier-thin-pool segment.

Internal error: LV segments corrupted in tp1.

Cannot process volume group vg1

root@pve02:/#I compared the /dev directory with another node which I installed and patched at the same time but on the affected node I can see also "md*" devices which are missing on the working one.

Any idea how to fix this?

Thanks!

Last edited: