The question says it all. I tried searching for this specific question but didn't find anything other than an unraid article but the solution looked very "hacky".

At any rate, I currently have a windows 10 VM that I use as my main workstation and video editing machine. Everything is working great so far except my NVMe write speeds appear to be slower than what I was getting with bare metal windows. I believe this might be a linux driver issue but I wanted to test by completely passing through the controller to windows. I don't do anything else on the NVMe disk/controller except use windows (my linux containers and vm's are on a separate disk) so I don't mind dedicating it to my windows 10 VM.

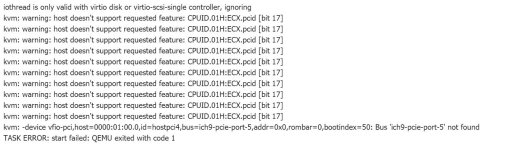

So the main question is can I passthrough the NVMe controller with NVMe drive directly to my windows 10 VM and boot from it as my OS disk? If so, how would I do that because I don't see pci as an option to choose as a boot disk in the VM config.

Please note, I'm not referring to just passing the disk through using disk-id. I want to pass the entire NVMe controller through. All my devices are in separate iommu groups already so no concern there.

At any rate, I currently have a windows 10 VM that I use as my main workstation and video editing machine. Everything is working great so far except my NVMe write speeds appear to be slower than what I was getting with bare metal windows. I believe this might be a linux driver issue but I wanted to test by completely passing through the controller to windows. I don't do anything else on the NVMe disk/controller except use windows (my linux containers and vm's are on a separate disk) so I don't mind dedicating it to my windows 10 VM.

So the main question is can I passthrough the NVMe controller with NVMe drive directly to my windows 10 VM and boot from it as my OS disk? If so, how would I do that because I don't see pci as an option to choose as a boot disk in the VM config.

Please note, I'm not referring to just passing the disk through using disk-id. I want to pass the entire NVMe controller through. All my devices are in separate iommu groups already so no concern there.