hello all,

first i have a cluster of to node ,

my ceph work with 6 OSD see img

today one of my osd is down and i can restart it

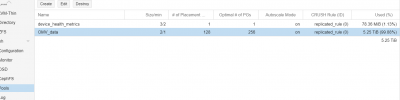

in fact there 3 month ago, i have replace osd 5 by a bigger ( before 1TO, now 3To); but i see my pool no upgrade, the second img

you see 5.25 to but with new 3To i will have 7 approximately. but no...

now i have exceed 5.25 i suppose this is why my osd down .

with the command ceph -w

i have this

my question, is i can remove the osd down, destroy and recreate it , without losing data,

or how i can restart the osd for backup and restart a 0

Thanks

first i have a cluster of to node ,

my ceph work with 6 OSD see img

today one of my osd is down and i can restart it

in fact there 3 month ago, i have replace osd 5 by a bigger ( before 1TO, now 3To); but i see my pool no upgrade, the second img

you see 5.25 to but with new 3To i will have 7 approximately. but no...

now i have exceed 5.25 i suppose this is why my osd down .

with the command ceph -w

i have this

Code:

cluster:

id: 112e2652-f242-4b6d-857f-ccee86a01041

health: HEALTH_WARN

nodown flag(s) set

2 backfillfull osd(s)

Low space hindering backfill (add storage if this doesn't resolve itself): 95 pgs backfill_toofull

Degraded data redundancy: 861538/2249117 objects degraded (38.306%), 99 pgs degraded, 99 pgs undersized

99 pgs not deep-scrubbed in time

99 pgs not scrubbed in time

1 pool(s) do not have an application enabled

2 pool(s) backfillfull

services:

mon: 2 daemons, quorum bsg-galactica,bsg-pegasus (age 50m)

mgr: bsg-galactica(active, since 3w)

osd: 6 osds: 5 up (since 48m), 5 in (since 18m); 95 remapped pgs

flags nodown

data:

pools: 2 pools, 129 pgs

objects: 1.12M objects, 4.3 TiB

usage: 5.3 TiB used, 4.0 TiB / 9.2 TiB avail

pgs: 861538/2249117 objects degraded (38.306%)

95 active+undersized+degraded+remapped+backfill_toofull

30 active+clean

4 active+undersized+degradedmy question, is i can remove the osd down, destroy and recreate it , without losing data,

or how i can restart the osd for backup and restart a 0

Thanks