Hi everyone,

i am running in a loop with my PBS system that doesn't have the performance it should be on the paper when processing real VM backups.

It seems that i can't make any backup from my PVE system to speed more than 1Gbps while the network cards on both machines are 10Gbps and the network speed test shows that it is effective :

root@proxmox:~# iperf -c 192.168.200.213

------------------------------------------------------------

Client connecting to 192.168.200.213, TCP port 5001

TCP window size: 16.0 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.200.210 port 38270 connected with 192.168.200.213 port 5001 (icwnd/mss/irtt=14/1448/153)

[ ID] Interval Transfer Bandwidth

[ 1] 0.0000-10.0146 sec 9.75 GBytes 8.36 Gbits/sec

For the record, a backup process was taking place and running around 1Gbps as stated above, which hypothetically would raise the test to 9.30Gbits/s, fair enough i guess.

The PVE system is running on a HP ML350 Gen 9 server with the following specifications :

2x Intel Xeon E5-2650L V4 @ 1.7Ghz (14 cores with hyperthreading)

256GB of RAM PC4 2400Mhz ECC

HP P840ar in HBA mode

4x SSD Samsung PM1633a SAS 7,68TB in RAID 10 (block size 64K)

12x HDD WD DC H520 SAS 4Kn 14TB in RAIDZ-2 with 2 Vdevs (block size 128K)

I can see smart status without an issue

10GBps network is a HP 546SFP+ adapter

The PBS system runs on a Dell R730xd with the following specifications :

1x Intel Xeon E5-2640 V4 @ 2.4Ghz (10 cores plus hyperthreading)

256GB of RAM PC4 2400Mhz ECC

I tried Dell Perc H730p mini in HBA mode and a HBA300 without seeing any differences, i kept running it on the HBA300

2x SSD 128GB in Raid 1 for the OS

16x 12TB HGST SAS in RAIDZ-2 also with 2 Vdevs and 2 NVME SSDs in mirror special device (check screenshots) for the metadata small files.

10Gbps network is the embedded daughter card Dell X540+I350 (2x 10GbE and 2x 1GbE)

I have the impression that i am running a pretty decent setup, with enterprise grade equipment,

I used the proxmox-backup-client benchmark on both the PBS host and the PVE to compare results, here is what i got :

From PVE :

root@proxmox:~# proxmox-backup-client benchmark --repository 192.168.***.***:******

Uploaded 340 chunks in 5 seconds.

Time per request: 14825 microseconds.

┌───────────────────────────────────┬───────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪═══════════════════╡

│ TLS (maximal backup upload speed) │ 282.91 MB/s (23%) │

├───────────────────────────────────┼───────────────────┤

│ SHA256 checksum computation speed │ 249.45 MB/s (12%) │

├───────────────────────────────────┼───────────────────┤

│ ZStd level 1 compression speed │ 268.12 MB/s (36%) │

├───────────────────────────────────┼───────────────────┤

│ ZStd level 1 decompression speed │ 425.15 MB/s (35%) │

├───────────────────────────────────┼───────────────────┤

│ Chunk verification speed │ 170.31 MB/s (22%) │

├───────────────────────────────────┼───────────────────┤

│ AES256 GCM encryption speed │ 866.01 MB/s (24%) │

└───────────────────────────────────┴───────────────────┘

from PBS itself :

root@pbs:~proxmox-backup-client benchmark --repository *******

Uploaded 340 chunks in 5 seconds.

Time per request: 14772 microseconds.

┌───────────────────────────────────┬────────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪════════════════════╡

│ TLS (maximal backup upload speed) │ 283.92 MB/s (23%) │

├───────────────────────────────────┼────────────────────┤

│ SHA256 checksum computation speed │ 254.57 MB/s (13%) │

├───────────────────────────────────┼────────────────────┤

│ ZStd level 1 compression speed │ 331.72 MB/s (44%) │

├───────────────────────────────────┼────────────────────┤

│ ZStd level 1 decompression speed │ 480.07 MB/s (40%) │

├───────────────────────────────────┼────────────────────┤

│ Chunk verification speed │ 225.55 MB/s (30%) │

├───────────────────────────────────┼────────────────────┤

│ AES256 GCM encryption speed │ 1084.96 MB/s (30%) │

└───────────────────────────────────┴────────────────────┘

Values look to be similar which exclude any network issue from the equation i presume.

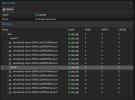

When i run a backup job, as you can see in attachment, i am around 80-90Mbps.

I thought that running the mirror special drive with NVME SSDs would solve my problem, so i reconfigured my RaidZ2 to include it, and it did not change anything at all. I haven't seen any difference in performance while i see data written on the special device.

In the screenshots you can see that iodelay are very low (never goes more than 1%), PVE bandwidth is quite steady around 90Mbps (the peak was probably a VM doing something), CPU load is ridiculous. By the way, doing a backup of a VM with storage on the RAID-10 SSDs is not performing bettern, another screenshot attached of a 64GB image, pretending a 260MB/s backup but i never saw the bandwidth going for more than 1Gbps which is reflected by the write bandwidth you can see that never goes for more than 100MB/s.

I really don't know where that limitation could come from, if someone can give me a hint.

i am running in a loop with my PBS system that doesn't have the performance it should be on the paper when processing real VM backups.

It seems that i can't make any backup from my PVE system to speed more than 1Gbps while the network cards on both machines are 10Gbps and the network speed test shows that it is effective :

root@proxmox:~# iperf -c 192.168.200.213

------------------------------------------------------------

Client connecting to 192.168.200.213, TCP port 5001

TCP window size: 16.0 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.200.210 port 38270 connected with 192.168.200.213 port 5001 (icwnd/mss/irtt=14/1448/153)

[ ID] Interval Transfer Bandwidth

[ 1] 0.0000-10.0146 sec 9.75 GBytes 8.36 Gbits/sec

For the record, a backup process was taking place and running around 1Gbps as stated above, which hypothetically would raise the test to 9.30Gbits/s, fair enough i guess.

The PVE system is running on a HP ML350 Gen 9 server with the following specifications :

2x Intel Xeon E5-2650L V4 @ 1.7Ghz (14 cores with hyperthreading)

256GB of RAM PC4 2400Mhz ECC

HP P840ar in HBA mode

4x SSD Samsung PM1633a SAS 7,68TB in RAID 10 (block size 64K)

12x HDD WD DC H520 SAS 4Kn 14TB in RAIDZ-2 with 2 Vdevs (block size 128K)

I can see smart status without an issue

10GBps network is a HP 546SFP+ adapter

The PBS system runs on a Dell R730xd with the following specifications :

1x Intel Xeon E5-2640 V4 @ 2.4Ghz (10 cores plus hyperthreading)

256GB of RAM PC4 2400Mhz ECC

I tried Dell Perc H730p mini in HBA mode and a HBA300 without seeing any differences, i kept running it on the HBA300

2x SSD 128GB in Raid 1 for the OS

16x 12TB HGST SAS in RAIDZ-2 also with 2 Vdevs and 2 NVME SSDs in mirror special device (check screenshots) for the metadata small files.

10Gbps network is the embedded daughter card Dell X540+I350 (2x 10GbE and 2x 1GbE)

I have the impression that i am running a pretty decent setup, with enterprise grade equipment,

I used the proxmox-backup-client benchmark on both the PBS host and the PVE to compare results, here is what i got :

From PVE :

root@proxmox:~# proxmox-backup-client benchmark --repository 192.168.***.***:******

Uploaded 340 chunks in 5 seconds.

Time per request: 14825 microseconds.

┌───────────────────────────────────┬───────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪═══════════════════╡

│ TLS (maximal backup upload speed) │ 282.91 MB/s (23%) │

├───────────────────────────────────┼───────────────────┤

│ SHA256 checksum computation speed │ 249.45 MB/s (12%) │

├───────────────────────────────────┼───────────────────┤

│ ZStd level 1 compression speed │ 268.12 MB/s (36%) │

├───────────────────────────────────┼───────────────────┤

│ ZStd level 1 decompression speed │ 425.15 MB/s (35%) │

├───────────────────────────────────┼───────────────────┤

│ Chunk verification speed │ 170.31 MB/s (22%) │

├───────────────────────────────────┼───────────────────┤

│ AES256 GCM encryption speed │ 866.01 MB/s (24%) │

└───────────────────────────────────┴───────────────────┘

from PBS itself :

root@pbs:~proxmox-backup-client benchmark --repository *******

Uploaded 340 chunks in 5 seconds.

Time per request: 14772 microseconds.

┌───────────────────────────────────┬────────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪════════════════════╡

│ TLS (maximal backup upload speed) │ 283.92 MB/s (23%) │

├───────────────────────────────────┼────────────────────┤

│ SHA256 checksum computation speed │ 254.57 MB/s (13%) │

├───────────────────────────────────┼────────────────────┤

│ ZStd level 1 compression speed │ 331.72 MB/s (44%) │

├───────────────────────────────────┼────────────────────┤

│ ZStd level 1 decompression speed │ 480.07 MB/s (40%) │

├───────────────────────────────────┼────────────────────┤

│ Chunk verification speed │ 225.55 MB/s (30%) │

├───────────────────────────────────┼────────────────────┤

│ AES256 GCM encryption speed │ 1084.96 MB/s (30%) │

└───────────────────────────────────┴────────────────────┘

Values look to be similar which exclude any network issue from the equation i presume.

When i run a backup job, as you can see in attachment, i am around 80-90Mbps.

I thought that running the mirror special drive with NVME SSDs would solve my problem, so i reconfigured my RaidZ2 to include it, and it did not change anything at all. I haven't seen any difference in performance while i see data written on the special device.

In the screenshots you can see that iodelay are very low (never goes more than 1%), PVE bandwidth is quite steady around 90Mbps (the peak was probably a VM doing something), CPU load is ridiculous. By the way, doing a backup of a VM with storage on the RAID-10 SSDs is not performing bettern, another screenshot attached of a 64GB image, pretending a 260MB/s backup but i never saw the bandwidth going for more than 1Gbps which is reflected by the write bandwidth you can see that never goes for more than 100MB/s.

I really don't know where that limitation could come from, if someone can give me a hint.