I have a Windows Server that crash every view weeks

Virtio Guest agent and driver was installed with 0.1.252 and now 0.1.262 (in this Log)

on the memory dump, i got the following error:

There are Other Server on this host whitout anny issue.

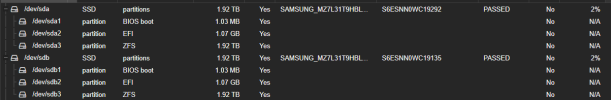

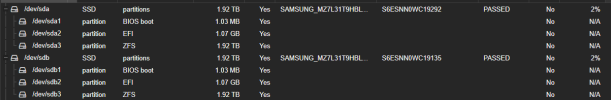

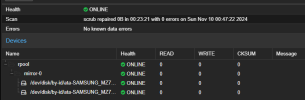

The DIsk itself looks good

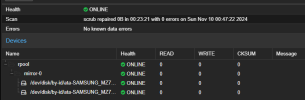

and ZFS also reports no error

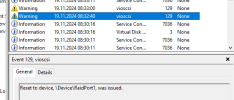

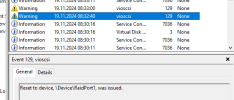

on windows i noticed there are some warnings

and just my feeling i think its related to the zfs replication

Virtio Guest agent and driver was installed with 0.1.252 and now 0.1.262 (in this Log)

on the memory dump, i got the following error:

Code:

9: kd> !analyze -v

*******************************************************************************

* *

* Bugcheck Analysis *

* *

*******************************************************************************

KERNEL_DATA_INPAGE_ERROR (7a)

The requested page of kernel data could not be read in. Typically caused by

a bad block in the paging file or disk controller error. Also see

KERNEL_STACK_INPAGE_ERROR.

If the error status is 0xC000000E, 0xC000009C, 0xC000009D or 0xC0000185,

it means the disk subsystem has experienced a failure.

If the error status is 0xC000009A, then it means the request failed because

a filesystem failed to make forward progress.

Arguments:

Arg1: fffff3eb066f3340, lock type that was held (value 1,2,3, or PTE address)

Arg2: ffffffffc0000185, error status (normally i/o status code)

Arg3: 0000200001e91be0, current process (virtual address for lock type 3, or PTE)

Arg4: ffffd60cde668000, virtual address that could not be in-paged (or PTE contents if arg1 is a PTE address)

Debugging Details:

------------------

Unable to load image \SystemRoot\System32\drivers\vioscsi.sys, Win32 error 0n2

KEY_VALUES_STRING: 1

Key : Analysis.CPU.mSec

Value: 1218

Key : Analysis.Elapsed.mSec

Value: 2406

Key : Analysis.IO.Other.Mb

Value: 5

Key : Analysis.IO.Read.Mb

Value: 1

Key : Analysis.IO.Write.Mb

Value: 29

Key : Analysis.Init.CPU.mSec

Value: 109

Key : Analysis.Init.Elapsed.mSec

Value: 19067

Key : Analysis.Memory.CommitPeak.Mb

Value: 98

Key : Analysis.Version.DbgEng

Value: 10.0.27725.1000

Key : Analysis.Version.Description

Value: 10.2408.27.01 amd64fre

Key : Analysis.Version.Ext

Value: 1.2408.27.1

Key : Bugcheck.Code.KiBugCheckData

Value: 0x7a

Key : Bugcheck.Code.LegacyAPI

Value: 0x7a

Key : Bugcheck.Code.TargetModel

Value: 0x7a

Key : Failure.Bucket

Value: 0x7a_c0000185_DUMP_VIOSCSI

Key : Failure.Hash

Value: {f5096b16-2043-7702-792c-bfca7413f754}

Key : Hypervisor.Enlightenments.Value

Value: 16752

Key : Hypervisor.Enlightenments.ValueHex

Value: 4170

Key : Hypervisor.Flags.AnyHypervisorPresent

Value: 1

Key : Hypervisor.Flags.ApicEnlightened

Value: 1

Key : Hypervisor.Flags.ApicVirtualizationAvailable

Value: 0

Key : Hypervisor.Flags.AsyncMemoryHint

Value: 0

Key : Hypervisor.Flags.CoreSchedulerRequested

Value: 0

Key : Hypervisor.Flags.CpuManager

Value: 0

Key : Hypervisor.Flags.DeprecateAutoEoi

Value: 0

Key : Hypervisor.Flags.DynamicCpuDisabled

Value: 0

Key : Hypervisor.Flags.Epf

Value: 0

Key : Hypervisor.Flags.ExtendedProcessorMasks

Value: 1

Key : Hypervisor.Flags.HardwareMbecAvailable

Value: 0

Key : Hypervisor.Flags.MaxBankNumber

Value: 0

Key : Hypervisor.Flags.MemoryZeroingControl

Value: 0

Key : Hypervisor.Flags.NoExtendedRangeFlush

Value: 1

Key : Hypervisor.Flags.NoNonArchCoreSharing

Value: 0

Key : Hypervisor.Flags.Phase0InitDone

Value: 1

Key : Hypervisor.Flags.PowerSchedulerQos

Value: 0

Key : Hypervisor.Flags.RootScheduler

Value: 0

Key : Hypervisor.Flags.SynicAvailable

Value: 1

Key : Hypervisor.Flags.UseQpcBias

Value: 0

Key : Hypervisor.Flags.Value

Value: 536745

Key : Hypervisor.Flags.ValueHex

Value: 830a9

Key : Hypervisor.Flags.VpAssistPage

Value: 1

Key : Hypervisor.Flags.VsmAvailable

Value: 0

Key : Hypervisor.RootFlags.AccessStats

Value: 0

Key : Hypervisor.RootFlags.CrashdumpEnlightened

Value: 0

Key : Hypervisor.RootFlags.CreateVirtualProcessor

Value: 0

Key : Hypervisor.RootFlags.DisableHyperthreading

Value: 0

Key : Hypervisor.RootFlags.HostTimelineSync

Value: 0

Key : Hypervisor.RootFlags.HypervisorDebuggingEnabled

Value: 0

Key : Hypervisor.RootFlags.IsHyperV

Value: 0

Key : Hypervisor.RootFlags.LivedumpEnlightened

Value: 0

Key : Hypervisor.RootFlags.MapDeviceInterrupt

Value: 0

Key : Hypervisor.RootFlags.MceEnlightened

Value: 0

Key : Hypervisor.RootFlags.Nested

Value: 0

Key : Hypervisor.RootFlags.StartLogicalProcessor

Value: 0

Key : Hypervisor.RootFlags.Value

Value: 0

Key : Hypervisor.RootFlags.ValueHex

Value: 0

Key : SecureKernel.HalpHvciEnabled

Value: 0

Key : WER.DumpDriver

Value: DUMP_VIOSCSI

Key : WER.OS.Branch

Value: fe_release_svc_prod2

Key : WER.OS.Version

Value: 10.0.20348.859

BUGCHECK_CODE: 7a

BUGCHECK_P1: fffff3eb066f3340

BUGCHECK_P2: ffffffffc0000185

BUGCHECK_P3: 200001e91be0

BUGCHECK_P4: ffffd60cde668000

FILE_IN_CAB: MEMORY.DMP

FAULTING_THREAD: ffffdb08d0144040

ERROR_CODE: (NTSTATUS) 0xc0000185 - Das E/A-Ger t hat einen E/A-Fehler gemeldet.

IMAGE_NAME: vioscsi.sys

MODULE_NAME: vioscsi

FAULTING_MODULE: fffff80133220000 vioscsi

DISK_HARDWARE_ERROR: There was error with disk hardware

BLACKBOXBSD: 1 (!blackboxbsd)

BLACKBOXNTFS: 1 (!blackboxntfs)

BLACKBOXPNP: 1 (!blackboxpnp)

BLACKBOXWINLOGON: 1

PROCESS_NAME: System

STACK_TEXT:

ffffd60c`db9626d8 fffff801`308be399 : 00000000`0000007a fffff3eb`066f3340 ffffffff`c0000185 00002000`01e91be0 : nt!KeBugCheckEx

ffffd60c`db9626e0 fffff801`306f87e5 : ffffd60c`00000000 ffffd60c`db962800 ffffd60c`db962838 fffff3f9`00000000 : nt!MiWaitForInPageComplete+0x1c5039

ffffd60c`db9627e0 fffff801`306e9a9d : 00000000`c0033333 00000000`00000000 ffffd60c`de668000 ffffd60c`de668000 : nt!MiIssueHardFault+0x1d5

ffffd60c`db962890 fffff801`307508a1 : 00000000`00000000 00000000`00000000 ffffdb08`e1050080 00000000`00000000 : nt!MmAccessFault+0x35d

ffffd60c`db962a30 fffff801`30751ed2 : ffffd60c`00000000 00000000`00000000 ffffdb08`e1050080 00000000`00000000 : nt!MiInPageSingleKernelStack+0x28d

ffffd60c`db962c80 fffff801`307c59ea : 00000000`00000000 00000000`00000000 fffff801`307c5900 fffff801`3105ed00 : nt!KiInSwapKernelStacks+0x4e

ffffd60c`db962cf0 fffff801`306757d5 : ffffdb08`d0144040 fffff801`307c5970 fffff801`3105ed00 a350a348`a340a338 : nt!KeSwapProcessOrStack+0x7a

ffffd60c`db962d30 fffff801`30825548 : ffff9180`893c5180 ffffdb08`d0144040 fffff801`30675780 a4b0a4a8`a4a0a498 : nt!PspSystemThreadStartup+0x55

ffffd60c`db962d80 00000000`00000000 : ffffd60c`db963000 ffffd60c`db95d000 00000000`00000000 00000000`00000000 : nt!KiStartSystemThread+0x28

STACK_COMMAND: .process /r /p 0xffffdb08d00c8080; .thread 0xffffdb08d0144040 ; kb

FAILURE_BUCKET_ID: 0x7a_c0000185_DUMP_VIOSCSI

OS_VERSION: 10.0.20348.859

BUILDLAB_STR: fe_release_svc_prod2

OSPLATFORM_TYPE: x64

OSNAME: Windows 10

FAILURE_ID_HASH: {f5096b16-2043-7702-792c-bfca7413f754}

Followup: MachineOwner

---------There are Other Server on this host whitout anny issue.

The DIsk itself looks good

and ZFS also reports no error

on windows i noticed there are some warnings

and just my feeling i think its related to the zfs replication

Last edited: