I just want to describe my experience with docker in lxc container under Proxmox. Maybe someone has some improvement ideas or my description is helpful for him/her.

Goals:

1. Use the effortlessness of docker to install and maintain applications.

2. Monitor and manage centrally all running applications.

3. Separate data from application code, thus simplifying data backup.

4. Allow some basic fallback functionality in case some nodes are in maintenance.

Systems:

Implementation on Proxmox:

Well, so far I am happy that it works. If anyone has a better working config, I would be interested.

Michael

Goals:

1. Use the effortlessness of docker to install and maintain applications.

2. Monitor and manage centrally all running applications.

3. Separate data from application code, thus simplifying data backup.

4. Allow some basic fallback functionality in case some nodes are in maintenance.

Systems:

- Most services run on a private home environment, consisting of one HP Microserver Gen 8 and a fallback machine. In addition some services run on small arm based nodes (RPi, NanoPis or RockPis).

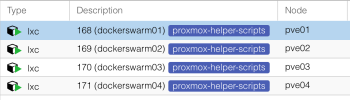

- The Microserver and the fallback machine run Proxmox 6.3 on ZFS. They run mostly classical LXC CTs (about 10), but will be migrated to docker containers, which I will keep in LXC CTs as a few separate nodes.

- The small arm based edge nodes run docker host on light OSs without additional LXC layer.

Implementation on Proxmox:

- All LXC machines run a stripped-down Debian buster. Docker-ce is installed via get-docker.sh. All docker hosts are running as root, but use userns-remap for their containers.

- Proxmox loads additional kernel modules: aufs, ip_vs

- Unprivileged LXC with nesting=1, keyctl=1: Unsuccessful. There are two main issues I couldn't resolve:

- a.) only available storage driver is VFS (overlay not possible on ZFS, aufs not possible in non-init namespace). After download of any docker image, the storage controller complains of a permission issue during storage of the image layers. Why, I have no idea. I completely deleted the /var/lib/docker content. Doesn't help.

- b.) I haven't managed to get /proc/sys/net/ipv4/vs/ folder populated in the lxc container. It is always empty. I tried to keep all capabilities, use unconfined AA, mounted /proc as rw, added /.dockerenv and everything I found on the net. No chance. Without this foder, there is no ingress network possible.

- Privileged LXC container with nesting=1. Works perfectly so far, except there is an issue to setup iptables entries for the ingress network.

- Working fix: adding "lxc.mount.auto: proc:rw" to the lxc conf file. I haven't found any other way yet.

Well, so far I am happy that it works. If anyone has a better working config, I would be interested.

Michael