Last edited:

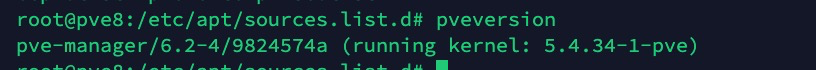

after reinstalled pve(osd reused),ceph osd can't start

- Thread starter hrghope

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

my osd metadata,may be miss someting

root@pve8:/usr/lib/systemd/system/ceph-osd@.service.d# ceph osd metadata

[

{

"id": 0

},

{

"id": 1

},

{

"id": 2

},

{

"id": 3

},

{

"id": 4

}

]

ceph-volume raw list seams ok

root@pve8:/usr/lib/systemd/system/ceph-osd@.service.d# ceph-volume raw list

{

"0": {

"ceph_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"device": "/dev/mapper/ceph--006b38e4--3351--4c04--91e6--edf1740516d2-osd--block--f22a1e72--b34d--4c97--b289--e878b4b09b91",

"osd_id": 0,

"osd_uuid": "f22a1e72-b34d-4c97-b289-e878b4b09b91",

"type": "bluestore"

},

"1": {

"ceph_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"device": "/dev/mapper/ceph--f5f20107--fa7f--4fef--9306--fa2b2ce664b8-osd--block--26375834--a615--4a77--8ad6--8accd4e92010",

"osd_id": 1,

"osd_uuid": "26375834-a615-4a77-8ad6-8accd4e92010",

"type": "bluestore"

}

}

there are someting may help

mon.pve (mon.0) 79 : cluster [WRN] Health check update: 5 slow ops, oldest one blocked for 125 sec, daemons [mon.pve,mon.pve8] have slow ops. (SLOW_OPS)

{

"description": "osd_boot(osd.0 booted 0 features 4611087854035861503 v3268)",

"initiated_at": "2021-01-23 09:56:51.649375",

"age": 1229.1620785550001,

"duration": 1229.1624290279999,

"type_data": {

"events": [

{

"time": "2021-01-23 09:56:51.649375",

"event": "initiated"

},

{

"time": "2021-01-23 09:56:51.649375",

"event": "header_read"

},

{

"time": "2021-01-23 09:56:51.649372",

"event": "throttled"

},

{

"time": "2021-01-23 09:56:51.649419",

"event": "all_read"

},

{

"time": "2021-01-23 09:56:51.649458",

"event": "dispatched"

},

{

"time": "2021-01-23 09:56:51.649459",

"event": "mon:_ms_dispatch"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "mon:dispatch_op"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136063",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136063",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136064",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136064",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136880",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.139483",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "callback finished"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "osdmap:wait_for_finished_proposal"

}

],

"info": {

"seq": 123,

"src_is_mon": false,

"source": "osd.0 v2:192.168.3.8:6808/13167",

"forwarded_to_leader": false

}

}

}

],

"num_ops": 5

}

root@pve:/etc/pve# ceph daemon osd.3 status

{

"cluster_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"osd_fsid": "7bd4adc8-e750-49f3-b729-16376edebcc6",

"whoami": 3,

"state": "booting",

"oldest_map": 2652,

"newest_map": 3263,

"num_pgs": 120

}

root@pve8:/usr/lib/systemd/system/ceph-osd@.service.d# ceph osd metadata

[

{

"id": 0

},

{

"id": 1

},

{

"id": 2

},

{

"id": 3

},

{

"id": 4

}

]

ceph-volume raw list seams ok

root@pve8:/usr/lib/systemd/system/ceph-osd@.service.d# ceph-volume raw list

{

"0": {

"ceph_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"device": "/dev/mapper/ceph--006b38e4--3351--4c04--91e6--edf1740516d2-osd--block--f22a1e72--b34d--4c97--b289--e878b4b09b91",

"osd_id": 0,

"osd_uuid": "f22a1e72-b34d-4c97-b289-e878b4b09b91",

"type": "bluestore"

},

"1": {

"ceph_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"device": "/dev/mapper/ceph--f5f20107--fa7f--4fef--9306--fa2b2ce664b8-osd--block--26375834--a615--4a77--8ad6--8accd4e92010",

"osd_id": 1,

"osd_uuid": "26375834-a615-4a77-8ad6-8accd4e92010",

"type": "bluestore"

}

}

there are someting may help

mon.pve (mon.0) 79 : cluster [WRN] Health check update: 5 slow ops, oldest one blocked for 125 sec, daemons [mon.pve,mon.pve8] have slow ops. (SLOW_OPS)

{

"description": "osd_boot(osd.0 booted 0 features 4611087854035861503 v3268)",

"initiated_at": "2021-01-23 09:56:51.649375",

"age": 1229.1620785550001,

"duration": 1229.1624290279999,

"type_data": {

"events": [

{

"time": "2021-01-23 09:56:51.649375",

"event": "initiated"

},

{

"time": "2021-01-23 09:56:51.649375",

"event": "header_read"

},

{

"time": "2021-01-23 09:56:51.649372",

"event": "throttled"

},

{

"time": "2021-01-23 09:56:51.649419",

"event": "all_read"

},

{

"time": "2021-01-23 09:56:51.649458",

"event": "dispatched"

},

{

"time": "2021-01-23 09:56:51.649459",

"event": "mon:_ms_dispatch"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "mon:dispatch_op"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:51.649460",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136063",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136063",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136064",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136064",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136120",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.136873",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.136880",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.138946",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.139483",

"event": "callback retry"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.139484",

"event": "osdmap:wait_for_finished_proposal"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "callback finished"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "psvc:dispatch"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "osdmap:wait_for_readable"

},

{

"time": "2021-01-23 09:56:52.143906",

"event": "osdmap:wait_for_finished_proposal"

}

],

"info": {

"seq": 123,

"src_is_mon": false,

"source": "osd.0 v2:192.168.3.8:6808/13167",

"forwarded_to_leader": false

}

}

}

],

"num_ops": 5

}

root@pve:/etc/pve# ceph daemon osd.3 status

{

"cluster_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"osd_fsid": "7bd4adc8-e750-49f3-b729-16376edebcc6",

"whoami": 3,

"state": "booting",

"oldest_map": 2652,

"newest_map": 3263,

"num_pgs": 120

}

Last edited:

With re-installation you mean all nodes at once? If so, then the MONs are new and have no knowledge about the PGs or OSDs. You will need to recover the maps from the OSDs. And if I am not mistaken the auth keys need to be replaced as well.

Well, if there is the old installation, then you can get the MON DB directly. You will need to mount the old LV and copy the MON DB under /var/lib/ceph/mon/ to the new installation. This has to be done on each node separately.1、restart the old node.but how can i fix this?

2、or,how can i "recover the maps from the OSDs" and "the auth keys need to be replaced "?

first,i run pveceph purge and pveinit

1、

when i copied the mon db and run "pveceph mon create",i got:

"Could not connect to ceph cluster despite configured monitors"

2、

when i "pveceph mon create" first,then copy the mon db,the mon can't start

about "keyring",i tried:

1、copy my old db,and copy “/var/lib/ceph/mgr/ceph-pve/keyring ” key to "/etc/pve/priv/ceph.mon.keyring" mon.key

2、copy the new installed mon's key to /var/lib/ceph/mgr/ceph-pve/keyring

neighter is ok

anthoer question:

is all mon dbs same?

1、

when i copied the mon db and run "pveceph mon create",i got:

"Could not connect to ceph cluster despite configured monitors"

2、

when i "pveceph mon create" first,then copy the mon db,the mon can't start

about "keyring",i tried:

1、copy my old db,and copy “/var/lib/ceph/mgr/ceph-pve/keyring ” key to "/etc/pve/priv/ceph.mon.keyring" mon.key

2、copy the new installed mon's key to /var/lib/ceph/mgr/ceph-pve/keyring

neighter is ok

anthoer question:

is all mon dbs same?

Last edited:

thanks , i recovery mon1 host by osds

https://docs.ceph.com/en/latest/rados/troubleshooting/troubleshooting-mon/

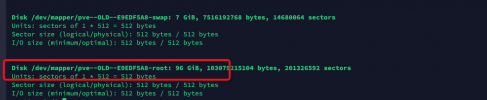

but on another host,i got:

the osd is corrupt?

https://docs.ceph.com/en/latest/rados/troubleshooting/troubleshooting-mon/

but on another host,i got:

the osd is corrupt?

It is better to copy the whole output as text, instead of a screenshot. This makes it easier to get a better picture and lookup messages.

The OSD needs to be mapped, so the

The OSD needs to be mapped, so the

/var/lib/ceph/osd/ceph-0 is populated. But it shouldn't be running.But this will need the maps from all OSDs, at least the script is doing that.thanks , i recovery mon1 host by osds

-------

root@pve8:~/recovery# ls -l /var/lib/ceph/osd/ceph-0/

total 24

lrwxrwxrwx 1 ceph ceph 93 Jan 25 01:26 block -> /dev/ceph-006b38e4-3351-4c04-91e6-edf1740516d2/osd-block-f22a1e72-b34d-4c97-b289-e878b4b09b91

-rw------- 1 ceph ceph 37 Jan 25 01:26 ceph_fsid

-rw------- 1 ceph ceph 37 Jan 25 01:26 fsid

-rw------- 1 ceph ceph 55 Jan 25 01:26 keyring

-rw------- 1 ceph ceph 6 Jan 25 01:26 ready

-rw------- 1 ceph ceph 10 Jan 25 01:26 type

-rw------- 1 ceph ceph 2 Jan 25 01:26 whoami

---------

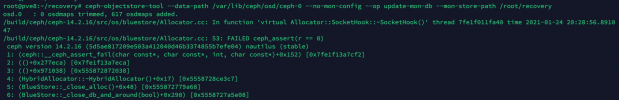

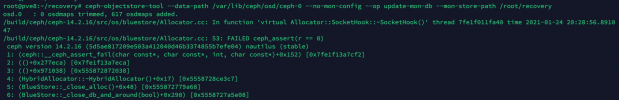

root@pve8:~/recovery# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-0 --no-mon-config --op update-mon-db --mon-store-path /root/recovery

osd.0 : 0 osdmaps trimmed, 783 osdmaps added.

/build/ceph/ceph-14.2.16/src/os/bluestore/Allocator.cc: In function 'virtual Allocator::SocketHook::~SocketHook()' thread 7fda45fe7a40 time 2021-01-25 01:25:39.136322

/build/ceph/ceph-14.2.16/src/os/bluestore/Allocator.cc: 53: FAILED ceph_assert(r == 0)

ceph version 14.2.16 (5d5ae817209e503a412040d46b3374855b7efe04) nautilus (stable)

1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x152) [0x7fda4726fcf2]

2: (()+0x277eca) [0x7fda4726feca]

3: (()+0x971038) [0x562ec40ba038]

4: (HybridAllocator::~HybridAllocator()+0x17) [0x562ec41163c7]

5: (BlueStore::_close_alloc()+0x48) [0x562ec3fc1a68]

6: (BlueStore::_close_db_and_around(bool)+0x298) [0x562ec3fede08]

7: (BlueStore::umount()+0x299) [0x562ec404a419]

8: (main()+0x32ef) [0x562ec3aeb33f]

9: (__libc_start_main()+0xeb) [0x7fda464fe09b]

10: (_start()+0x2a) [0x562ec3b17f0a]

*** Caught signal (Aborted) **

in thread 7fda45fe7a40 thread_name:ceph-objectstor

ceph version 14.2.16 (5d5ae817209e503a412040d46b3374855b7efe04) nautilus (stable)

1: (()+0x12730) [0x7fda46a52730]

2: (gsignal()+0x10b) [0x7fda465117bb]

3: (abort()+0x121) [0x7fda464fc535]

4: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x1a3) [0x7fda4726fd43]

5: (()+0x277eca) [0x7fda4726feca]

6: (()+0x971038) [0x562ec40ba038]

7: (HybridAllocator::~HybridAllocator()+0x17) [0x562ec41163c7]

8: (BlueStore::_close_alloc()+0x48) [0x562ec3fc1a68]

9: (BlueStore::_close_db_and_around(bool)+0x298) [0x562ec3fede08]

10: (BlueStore::umount()+0x299) [0x562ec404a419]

11: (main()+0x32ef) [0x562ec3aeb33f]

12: (__libc_start_main()+0xeb) [0x7fda464fe09b]

13: (_start()+0x2a) [0x562ec3b17f0a]

Aborted

root@pve8:~/recovery# ls -l /var/lib/ceph/osd/ceph-0/

total 24

lrwxrwxrwx 1 ceph ceph 93 Jan 25 01:26 block -> /dev/ceph-006b38e4-3351-4c04-91e6-edf1740516d2/osd-block-f22a1e72-b34d-4c97-b289-e878b4b09b91

-rw------- 1 ceph ceph 37 Jan 25 01:26 ceph_fsid

-rw------- 1 ceph ceph 37 Jan 25 01:26 fsid

-rw------- 1 ceph ceph 55 Jan 25 01:26 keyring

-rw------- 1 ceph ceph 6 Jan 25 01:26 ready

-rw------- 1 ceph ceph 10 Jan 25 01:26 type

-rw------- 1 ceph ceph 2 Jan 25 01:26 whoami

---------

root@pve8:~/recovery# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-0 --no-mon-config --op update-mon-db --mon-store-path /root/recovery

osd.0 : 0 osdmaps trimmed, 783 osdmaps added.

/build/ceph/ceph-14.2.16/src/os/bluestore/Allocator.cc: In function 'virtual Allocator::SocketHook::~SocketHook()' thread 7fda45fe7a40 time 2021-01-25 01:25:39.136322

/build/ceph/ceph-14.2.16/src/os/bluestore/Allocator.cc: 53: FAILED ceph_assert(r == 0)

ceph version 14.2.16 (5d5ae817209e503a412040d46b3374855b7efe04) nautilus (stable)

1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x152) [0x7fda4726fcf2]

2: (()+0x277eca) [0x7fda4726feca]

3: (()+0x971038) [0x562ec40ba038]

4: (HybridAllocator::~HybridAllocator()+0x17) [0x562ec41163c7]

5: (BlueStore::_close_alloc()+0x48) [0x562ec3fc1a68]

6: (BlueStore::_close_db_and_around(bool)+0x298) [0x562ec3fede08]

7: (BlueStore::umount()+0x299) [0x562ec404a419]

8: (main()+0x32ef) [0x562ec3aeb33f]

9: (__libc_start_main()+0xeb) [0x7fda464fe09b]

10: (_start()+0x2a) [0x562ec3b17f0a]

*** Caught signal (Aborted) **

in thread 7fda45fe7a40 thread_name:ceph-objectstor

ceph version 14.2.16 (5d5ae817209e503a412040d46b3374855b7efe04) nautilus (stable)

1: (()+0x12730) [0x7fda46a52730]

2: (gsignal()+0x10b) [0x7fda465117bb]

3: (abort()+0x121) [0x7fda464fc535]

4: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x1a3) [0x7fda4726fd43]

5: (()+0x277eca) [0x7fda4726feca]

6: (()+0x971038) [0x562ec40ba038]

7: (HybridAllocator::~HybridAllocator()+0x17) [0x562ec41163c7]

8: (BlueStore::_close_alloc()+0x48) [0x562ec3fc1a68]

9: (BlueStore::_close_db_and_around(bool)+0x298) [0x562ec3fede08]

10: (BlueStore::umount()+0x299) [0x562ec404a419]

11: (main()+0x32ef) [0x562ec3aeb33f]

12: (__libc_start_main()+0xeb) [0x7fda464fe09b]

13: (_start()+0x2a) [0x562ec3b17f0a]

Aborted

If it is this issue, then the fix will land in 14.2.17.

https://lists.ceph.io/hyperkitty/li...d/TMKGRSLSLC5CQQXLSE5AHYPBURUU4WVS/?sort=date

But I suppose if you try the following, then you might get around it completely.

* copy keyrings (/etc/pve/priv/, /var/lib/ceph/bootstrap/) from the old installation

* create MONs (pveceph mon create)

* stop MONs

* remove directory /var/lib/ceph/mon/ceph-<id>

* copy directory /var/lib/ceph/mon/ceph-<id>/ from the old installation

* start MONs

https://lists.ceph.io/hyperkitty/li...d/TMKGRSLSLC5CQQXLSE5AHYPBURUU4WVS/?sort=date

But I suppose if you try the following, then you might get around it completely.

* copy keyrings (/etc/pve/priv/, /var/lib/ceph/bootstrap/) from the old installation

* create MONs (pveceph mon create)

* stop MONs

* remove directory /var/lib/ceph/mon/ceph-<id>

* copy directory /var/lib/ceph/mon/ceph-<id>/ from the old installation

* start MONs

i had tried this ,and i got this error:If it is this issue, then the fix will land in 14.2.17.

https://lists.ceph.io/hyperkitty/li...d/TMKGRSLSLC5CQQXLSE5AHYPBURUU4WVS/?sort=date

But I suppose if you try the following, then you might get around it completely.

* copy keyrings (/etc/pve/priv/, /var/lib/ceph/bootstrap/) from the old installation

* create MONs (pveceph mon create)

* stop MONs

* remove directory /var/lib/ceph/mon/ceph-<id>

* copy directory /var/lib/ceph/mon/ceph-<id>/ from the old installation

* start MONs

the error log of mon.pve: unable to read magic from mon data

Code:

Jan 25 07:51:03 pve ceph-mon[1947]: 2021-01-25 07:51:03.333 7f2345c23400 -1 unable to read magic from mon data

Jan 25 07:51:03 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Main process exited, code=exited, status=1/FAILURE

Jan 25 07:51:03 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Failed with result 'exit-code'.

Jan 25 07:51:13 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Service RestartSec=10s expired, scheduling restart.

Jan 25 07:51:13 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Scheduled restart job, restart counter is at 6.

Jan 25 07:51:13 pve systemd[1]: Stopped Ceph cluster monitor daemon.

Jan 25 07:51:13 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Start request repeated too quickly.

Jan 25 07:51:13 pve systemd[1]: [EMAIL]ceph-mon@pve.service[/EMAIL]: Failed with result 'exit-code'.

Jan 25 07:51:13 pve systemd[1]: Failed to start Ceph cluster monitor daemon.

Jan 25 08:42:07 pve systemd[1]: Started Ceph cluster monitor daemon.

Jan 25 08:44:17 pve ceph-mon[17615]: 2021-01-25 08:44:17.542 7f831f824700 -1 mon.pve@2(peon) e3 get_health_metrics reporting 1 slow ops, oldest is mon_command({"prefix":"pg dump","format":"json","dumpcontents":["osds"]} v 0)

Jan 25 08:44:22 pve ceph-mon[17615]: 2021-01-25 08:44:22.542 7f831f824700 -1 mon.pve@2(peon) e3 get_health_metrics reporting 2 slow ops, oldest is mon_command({"prefix":"pg dump","format":"json","dumpcontents":["osds"]} v 0)the mon.pve8 seams ok,but "3 slow ops, oldest one blocked for 294 sec, mon.pve8 has slow ops"

Code:

root@pve8:~# ceph daemon mon.pve8 ops

{

"ops": [

{

"description": "osd_boot(osd.3 booted 0 features 4611087854035861503 v3437)",

"initiated_at": "2021-01-25 08:59:04.492338",

"age": 210.02295253899999,

"duration": 210.02296655399999,

"type_data": {

"events": [

{

"time": "2021-01-25 08:59:04.492338",

"event": "initiated"

},

{

"time": "2021-01-25 08:59:04.492338",

"event": "header_read"

},

{

"time": "2021-01-25 08:59:04.492336",

"event": "throttled"

},

{

"time": "2021-01-25 08:59:04.492349",

"event": "all_read"

},

{

"time": "2021-01-25 08:59:04.492379",

"event": "dispatched"the osd.0 osd.1 seams ok in pve ui, but seams halted.(osd.0 osd.1 and mon.pve8 are in one host)

Code:

root@pve8:~# ceph daemon osd.0 status

{

"cluster_fsid": "856cb359-a991-46b3-9468-a057d3e78d7c",

"osd_fsid": "f22a1e72-b34d-4c97-b289-e878b4b09b91",

"whoami": 0,

"state": "booting",

"oldest_map": 2652,

"newest_map": 3434,

"num_pgs": 274

}

Last edited:

Did you replace the DB on each new MON with their old ones?i had tried this ,and i got this error:

Did you replace the DB on each new MON with their old ones?

i prepare a script of process for talking

Code:

hosts=(pve pve8 pve11)

for host in ${hosts[@]}; do

echo "pveceph stop in $host"

ssh root@$host <<EOF pveceph stop

EOF

done

for host in ${hosts[@]}; do

echo "pveceph purge in $host"

ssh root@$host pveceph purge

done

# /etc/pve/ceph.conf has no mon config,so pveceph purge will remove all mon config

ssh root@pve cp /etc/pve/ceph.conf.bk /etc/pve/ceph.conf

ssh root@pve pveceph purge

echo "restore old ceph config and mon config"

ssh root@pve cp /etc/pve/ceph.conf.bk /etc/pve/ceph.conf

ssh root@pve cp /root/old_ceph/ceph.client.admin.keyring /etc/ceph/

ssh root@pve cp /root/old_ceph/ceph.client.admin.keyring /etc/pve/priv

ssh root@pve cp /root/old_ceph/ceph.mon.keyring /etc/pve/priv

for host in ${hosts[@]}; do

echo "pveceph init & pveceph mon create in $host"

ssh root@$host pveceph init

ssh root@$host pveceph mon create

done

echo "ceph mon stat:"

ssh root@pve ceph mon stat

for host in ${hosts[@]}; do

echo "systemctl stop ceph-mon* in $host"

ssh root@$host systemctl stop ceph-mon*

done

ssh root@pve rm /var/lib/ceph/mon/ceph-pve/store.db/ -rf

ssh root@pve cp -R /root/recovery2/ceph-pve/store.db /var/lib/ceph/mon/ceph-pve/

ssh root@pve chown -R ceph:ceph /var/lib/ceph/mon/ceph-pve/store.db

ssh root@pve8 rm /var/lib/ceph/mon/ceph-pve8/store.db/ -rf

ssh root@pve8 cp -R /root/recovery2/ceph-pve8/store.db /var/lib/ceph/mon/ceph-pve8/

ssh root@pve8 chown -R ceph:ceph /var/lib/ceph/mon/ceph-pve8/store.db

ssh root@pve11 rm /var/lib/ceph/mon/ceph-pve11/store.db/ -rf

ssh root@pve11 cp -R /root/recovery2/ceph-pve11/store.db /var/lib/ceph/mon/ceph-pve11/

ssh root@pve11 chown -R ceph:ceph /var/lib/ceph/mon/ceph-pve11/store.db

for host in ${hosts[@]}; do

echo "systemctl start ceph-mon* in $host"

ssh root@$host systemctl start ceph-mon*

donethe output:

Code:

pveceph stop in pve

pveceph stop in pve8

pveceph stop in pve11

pveceph purge in pve

Error gathering ceph info, already purged? Message: got timeout

Removed /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve.service.

Removed /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@pve.service.

Foreign MON address in ceph.conf. Keeping config & keyrings

pveceph purge in pve8

Error gathering ceph info, already purged? Message: got timeout

Removed /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve8.service.

Foreign MON address in ceph.conf. Keeping config & keyrings

pveceph purge in pve11

Error gathering ceph info, already purged? Message: got timeout

Removed /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve11.service.

Foreign MON address in ceph.conf. Keeping config & keyrings

unable to get monitor info from DNS SRV with service name: ceph-mon

Error gathering ceph info, already purged? Message: rados_connect failed - No such file or directory

Error gathering ceph info, already purged? Message: rados_connect failed - No such file or directory

Removing config & keyring files

restore old ceph config and mon config

pveceph init & pveceph mon create in pve

unable to get monitor info from DNS SRV with service name: ceph-mon

monmaptool: monmap file /tmp/monmap

monmaptool: generated fsid 1b468090-d9df-468c-8e61-24c5a20b8d41

epoch 0

fsid 1b468090-d9df-468c-8e61-24c5a20b8d41

last_changed 2021-01-26 22:18:23.531667

created 2021-01-26 22:18:23.531667

min_mon_release 0 (unknown)

0: [v2:192.168.3.5:3300/0,v1:192.168.3.5:6789/0] mon.pve

monmaptool: writing epoch 0 to /tmp/monmap (1 monitors)

Created symlink /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve.service -> /lib/systemd/system/ceph-mon@.service.

creating manager directory '/var/lib/ceph/mgr/ceph-pve'

creating keys for 'mgr.pve'

setting owner for directory

enabling service 'ceph-mgr@pve.service'

Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@pve.service -> /lib/systemd/system/ceph-mgr@.service.

starting service 'ceph-mgr@pve.service'

pveceph init & pveceph mon create in pve8

Created symlink /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve8.service -> /lib/systemd/system/ceph-mon@.service.

pveceph init & pveceph mon create in pve11

Created symlink /etc/systemd/system/ceph-mon.target.wants/ceph-mon@pve11.service -> /lib/systemd/system/ceph-mon@.service.

ceph mon stat:

e3: 3 mons at {pve=[v2:192.168.3.5:3300/0,v1:192.168.3.5:6789/0],pve11=[v2:192.168.3.11:3300/0,v1:192.168.3.11:6789/0],pve8=[v2:192.168.3.8:3300/0,v1:192.168.3.8:6789/0]}, election epoch 10, leader 0 pve, quorum 0,1 pve,pve8

systemctl stop ceph-mon* in pve

systemctl stop ceph-mon* in pve8

systemctl stop ceph-mon* in pve11

systemctl start ceph-mon* in pve

systemctl start ceph-mon* in pve8

systemctl start ceph-mon* in pve11ceph -s shows:

Code:

root@pve8:/etc/pve/priv# ceph -s

cluster:

id: 856cb359-a991-46b3-9468-a057d3e78d7c

health: HEALTH_WARN

1 osds down

1 host (3 osds) down

5 pool(s) have no replicas configured

Reduced data availability: 236 pgs inactive

Degraded data redundancy: 334547/2964667 objects degraded (11.284%), 288 pgs degraded, 288 pgs undersized

3 pools have too many placement groups

4 daemons have recently crashed

30 slow ops, oldest one blocked for 26833 sec, osd.1 has slow ops

too many PGs per OSD (256 > max 250)

1/3 mons down, quorum pve11,pve8

services:

mon: 3 daemons, quorum pve11,pve8 (age 73s), out of quorum: pve

mgr: pve8(active, starting, since 1.51716s)

osd: 5 osds: 2 up (since 5d), 3 in (since 5d)

data:

pools: 10 pools, 768 pgs

objects: 2.63M objects, 10 TiB

usage: 9.5 TiB used, 20 TiB / 29 TiB avail

pgs: 30.729% pgs unknown

334547/2964667 objects degraded (11.284%)

288 active+undersized+degraded

243 active+clean

236 unknown

1 active+clean+scrubbing+deep

io:

client: 0 B/s rd, 1.7 MiB/s wr, 0 op/s rd, 70 op/s wrthe mon.pve falls

the systemd log:

Code:

Jan 26 22:18:21 pve systemd-logind[730]: New session 228 of user root.

Jan 26 22:18:21 pve systemd[1]: Started Session 228 of user root.

Jan 26 22:18:23 pve pvestatd[1390]: rados_connect failed - No such file or directory

Jan 26 22:18:23 pve pveceph[999548]: <root@pam> starting task UPID:pve:000F4091:00CC3A2F:601024AF:cephcreatemon:mon.pve:root@pam:

Jan 26 22:18:23 pve systemd[1]: Started Ceph cluster monitor daemon.

Jan 26 22:18:23 pve systemd[1]: Starting Ceph object storage daemon osd.2...

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph-fuse@.service instances at once.

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph-mon@.service instances at once.

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph-mgr@.service instances at once.

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph-mds@.service instances at once.

Jan 26 22:18:23 pve systemd[1]: Starting Ceph object storage daemon osd.3...

Jan 26 22:18:23 pve systemd[1]: Starting Ceph object storage daemon osd.4...

Jan 26 22:18:23 pve systemd[1]: Reloading.

Jan 26 22:18:23 pve pvestatd[1390]: status update time (19.276 seconds)

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $free in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $avail in int at /usr/share/perl5/PVE/Storage.pm line 1223.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in int at /usr/share/perl5/PVE/Storage.pm line 1224.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $free in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $avail in int at /usr/share/perl5/PVE/Storage.pm line 1223.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in int at /usr/share/perl5/PVE/Storage.pm line 1224.

Jan 26 22:18:23 pve systemd[1]: Started Ceph object storage daemon osd.2.

Jan 26 22:18:23 pve systemd[1]: Started Ceph object storage daemon osd.3.

Jan 26 22:18:23 pve systemd[1]: Started Ceph object storage daemon osd.4.

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph-osd@.service instances at once.

Jan 26 22:18:23 pve systemd[1]: Reached target ceph target allowing to start/stop all ceph*@.service instances at once.

Jan 26 22:18:23 pve pveceph[999569]: <root@pam> starting task UPID:pve:000F412E:00CC3A46:601024AF:cephcreatemgr:mgr.pve:root@pam:

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $free in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in addition (+) at /usr/share/perl5/PVE/Storage/RBDPlugin.pm line 561.

Jan 26 22:18:23 pve ceph-osd[999724]: 2021-01-26 22:18:23.751 7f144f54a700 -1 monclient(hunting): handle_auth_bad_method server allowed_methods [2] but i only suppo

Jan 26 22:18:23 pve ceph-osd[999724]: failed to fetch mon config (--no-mon-config to skip)

Jan 26 22:18:23 pve ceph-osd[999723]: 2021-01-26 22:18:23.755 7f43d14bb700 -1 monclient(hunting): handle_auth_bad_method server allowed_methods [2] but i only suppo

Jan 26 22:18:23 pve ceph-osd[999723]: failed to fetch mon config (--no-mon-config to skip)

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $avail in int at /usr/share/perl5/PVE/Storage.pm line 1223.

Jan 26 22:18:23 pve pvestatd[1390]: Use of uninitialized value $used in int at /usr/share/perl5/PVE/Storage.pm line 1224.

Jan 26 22:18:23 pve systemd[1]: ceph-osd@3.service: Main process exited, code=exited, status=1/FAILURE

Jan 26 22:18:23 pve systemd[1]: ceph-osd@3.service: Failed with result 'exit-code'.

Jan 26 22:18:23 pve systemd[1]: ceph-osd@2.service: Main process exited, code=exited, status=1/FAILURE

Jan 26 22:18:23 pve systemd[1]: ceph-osd@2.service: Failed with result 'exit-code'.root@pve8:/etc/pve/priv# ceph mon dump

dumped monmap epoch 9

epoch 9

fsid 856cb359-a991-46b3-9468-a057d3e78d7c

last_changed 2021-01-16 23:06:10.132860

created 2020-09-30 14:45:03.339127

min_mon_release 14 (nautilus)

0: [v2:192.168.3.11:3300/0,v1:192.168.3.11:6789/0] mon.pve11

1: [v2:192.168.3.8:3300/0,v1:192.168.3.8:6789/0] mon.pve8

2: [v2:192.168.3.5:3300/0,v1:192.168.3.5:6789/0] mon.pve

Last edited:

Make sure the ceph.conf has the MON addresses, the OSDs seem to complain about it. And leave the MON services, once created. Only the /var/lib/ceph/mon/ directory needs to be copied. This makes sure that there isn't a conflict with the ceph.conf.

Make sure the ceph.conf has the MON addresses, the OSDs seem to complain about it. And leave the MON services, once created. Only the /var/lib/ceph/mon/ directory needs to be copied. This makes sure that there isn't a conflict with the ceph.conf.

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 172.16.1.0/24

fsid = 856cb359-a991-46b3-9468-a057d3e78d7c

mon_allow_pool_delete = true

mon_host = 192.168.3.5 192.168.3.8 192.168.3.11

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 192.168.3.5/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.pve]

public_addr = 192.168.3.5

[mon.pve11]

public_addr = 192.168.3.11

[mon.pve8]

public_addr = 192.168.3.8ceph.conf is ok。

in my script

1、“ssh root@$host pveceph mon create ” create all mon in my 3 hosts

2、stop all mons

3、del the /var/lib/ceph/mon/ceph-pve*/store.db/

4、 copy the old db

5、chown db owner

6、start mon

mon.pve11 & mon.pve8 seams ok,mon.pve falls

Last edited:

That's upstream decision, so I don't know."If it is this issue, then the fix will land in 14.2.17."

any release plan?

Well, if its only the one node, then you can re-create it later.mon.pve11 & mon.pve8 seams ok,mon.pve falls

pg 100% unknow,maybe i will say goodbye to my data.That's upstream decision, so I don't know.

Well, if its only the one node, then you can re-create it later.

will the process of recovery modify the data of osds?

i have 5 osds in 2 host,

i recoveried monmap 3 osds in one host last week, and i can read some data。now i can't.

thanks a lot. i learn much from your replies.That's upstream decision, so I don't know.

Well, if its only the one node, then you can re-create it later.