Hello,

Currently we have a ceph cluster of 6 nodes, 3 of the nodes are dedicated ceph nodes. Proxmox build 7.2-4.

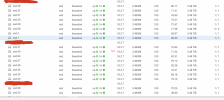

There's 8 x 3.84 Tib drives in each ceph node (24 total in three nodes). We are running out of space in ceph pool with 86%-87% usage.

We currently do not have additional spare 3.84 Tib drives and are considering to temporarily add extra 3 x 1.92Tib drives per ceph node (9 drives in total) to expand the ceph pool.

My question is:

1) Do we need to manually configure or tweak the drive weights?

2) Could anything go wrong if we add smaller OSD drives ?

3) Or is it a straightforward proccess as just creating OSDs via gui and ceph manages to set correct weights and other parameters to new 1.92 tib drives?

Currently we have a ceph cluster of 6 nodes, 3 of the nodes are dedicated ceph nodes. Proxmox build 7.2-4.

There's 8 x 3.84 Tib drives in each ceph node (24 total in three nodes). We are running out of space in ceph pool with 86%-87% usage.

We currently do not have additional spare 3.84 Tib drives and are considering to temporarily add extra 3 x 1.92Tib drives per ceph node (9 drives in total) to expand the ceph pool.

My question is:

1) Do we need to manually configure or tweak the drive weights?

2) Could anything go wrong if we add smaller OSD drives ?

3) Or is it a straightforward proccess as just creating OSDs via gui and ceph manages to set correct weights and other parameters to new 1.92 tib drives?

Attachments

Last edited: