Dear All,

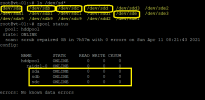

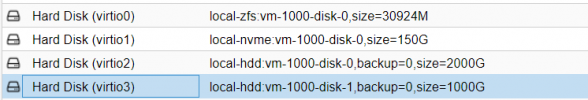

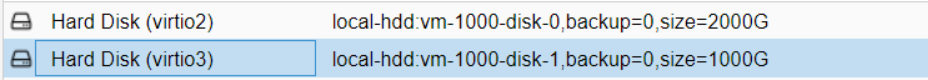

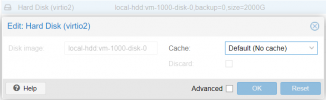

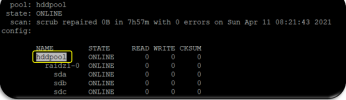

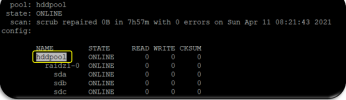

We are planning to increase the size of the array of disks on our ProxMox server or let say adding more disks. We already know which pool we are going to increase the size for it's called "hddpool"

Could you please tell me how this could be done/ what are steps? and what are the security measure to think of before starting the process to avoid problems.

Thank you in advance for any help that you could provide...

Best regards,

Yaseen KAMALA

We are planning to increase the size of the array of disks on our ProxMox server or let say adding more disks. We already know which pool we are going to increase the size for it's called "hddpool"

Could you please tell me how this could be done/ what are steps? and what are the security measure to think of before starting the process to avoid problems.

Thank you in advance for any help that you could provide...

Best regards,

Yaseen KAMALA