If you want to encrypt your data, the best way is usually to start at the lowest layer possible to get as much data encrypted as possible. If you run all your VMs on top of Proxmox, then adding encryption to all disks in Proxmox is the natural solution, but there doesn't seem to be an official way. In addition, I haven't found a full guide, but lots of people that wanted to do it and didn't know how. This tutorial is for those people: If you want to add full disk encryption to Proxmox, follow along.

Warning: Here be dragons. This is not officially supported and could break on update (though I've tried my best to avoid it). Make sure you have backups. I will also provide further links in case you get stuck, sometimes you will have to debug things for yourself. If you are a beginner with Linux, be prepared for a steep learning curve. Don't blame me if you lose data or your system ends up broken. I'll try to help, but this is very much at your own risk.

Because Proxmox is based on Debian, I highly recommend choosing Debian as your live system just to be safe. It probably work with others, but why risk it? Find out what debian version you are on:

In my case we need to download Debian bookworm from https://www.debian.org/CD/live/. I chose the

Copy that onto a USB stick, e.g. using

Before you shut off your system, it is also a good idea to update it. I would highly recommend to only run this on currently supported versions of Debian & Proxmox. If you are on older versions, do an upgrade and make sure everything works. You want to make sure that any issues you're facing later on are due to problems with what we're doing here, not other parts.

If everything is up-to-date, working and your USB stick is ready, reboot your system into the Debian live distro. Make sure to shut it down properly to avoid any disk corruption messing up our steps later on.

Once you've booted up Debian you're ready to make changes. However, depending on where your server is, this might not be the most comfortable machine to be working on so I like to enable SSH and then return to my normal computer and do everything else from there. It's also much easier for copy & paste commands in this guide. To set up SSH:

Remember the password you set and note down the IP address assigned to Debian via DHCP. If you weren't automatically connected to the internet, you might have to set that up manually. Go back to your preferred computer and use SSH to connect:

If you reboot later on, but need to return to the live disk for troubleshooting, come back here and follow these steps again. The live disk does not persist your changes.

Note: If you find during debugging you repeatedly run into SSH warnings after each reboot, it is because the host key changes on each reboot. SSH throws a very angry warning which is usually very important, but here it's not super helpful. If you are on a network you trust enough, you can add

Now that we're connected let's install the three packages we need during the installation. Remember, these are installed on the live disk, so if you reboot, you need to install them again:

Because we want to encrypt our root partition (which lives somewhere in

If you notice that your original installation has more partitions (for example, root and VM data are separate and LVM is not in use), then you might need to adjust your steps below. Try to replicate what is there as closely as you can and pretty much just insert a separate partition for

We'll do this on a fresh drive

I prefer cfdisk so open your new drive with

Note: When I attempted to do this, I noticed that the first partition already started at sector 2048. If your experience is the same, you can skip what's labeled as /dev/sdX1 above and start with the EFI System.

While Linux doesn't care too much about partition types set above, it is important that the EFI System is set to EFI System (

If everything looks good, select "Write", type "yes" and then quit. Check it all looks good with

Let's prepare the two unencrypted partitions now. First, EFI should be FAT, e.g. FAT32 via

If this command fails because

Then we create the

Next, we will set up our encrypted root partition.

Warning: Here be dragons. This is not officially supported and could break on update (though I've tried my best to avoid it). Make sure you have backups. I will also provide further links in case you get stuck, sometimes you will have to debug things for yourself. If you are a beginner with Linux, be prepared for a steep learning curve. Don't blame me if you lose data or your system ends up broken. I'll try to help, but this is very much at your own risk.

Background

If you already know Linux & Debian quite well and are familiar with full disk encryption, you can skip this section. If you get stuck later you can always return here.Is there a TL;DR?

Yes.apt install cryptsetup-initramfs dropbear-initramfs. If you need more detailed instructions, you probably want this whole guide, because there's a lot of steps, but those two packages are what power the ability to decrypt the root partition remotely.What is full disk encryption?

This is the ability to encrypt your entire disk (with some exceptions, see below) meaning that if the system is turned off, nobody can easily read your data by just taking your disk. For me the main benefit is that I can just sell or throw away drives I no longer need. Don't need to wipe it if the data is encrypted. There are many articles out there with more in-depth explanation, for example here.Is my CPU fast enough for my NVMe drive? What about self-encrypting drives?

If runningcryptsetup benchmark shows that the fastest algorithm can't keep up with the speed of your drive (or you are worried about the CPU load caused by encryption), you might want to look into self-encrypting drives. It's beyond the scope of this tutorial and you might have a hard time doing remote unlock, but if they suit your use case well, it's probably simpler than this tutorial.How do I create a backup?

This is a complex question, but I'll give you a very inefficient solution. Warning! I didn't test this method, I had a spare drive so my "backup" was that I didn't modify the old installation. I also had all important data backed up on a different device so worst case I could rebuild everything from backups. The simplest and least efficient solution is simplydd if=/dev/sda of=/mnt/backup/proxmox.img. This will create a bit-for-bit image of your disk (and will naturally be the size of the whole disk). To restore you run the reverse: dd if=/mnt/backup/proxmox.img of=/dev/sda (this overwrites all data on /dev/sda).How do I add encryption during Proxmox installation?

This tutorial deals with encryption of an existing installation. If you are starting fresh, my recommendation would be to install Debian with full disk encryption and then add Proxmox to it. This is also an advanced method, but is at least documented officially. You can also just install Proxmox unencrypted and then use this guide. It's a bit cumbersome, but should work.Why not use reencrypt?

Because I didn't know about it when I set out to do this. If you have backups you can try this, it might be much faster, esp. for large installations. But do so at your own risk.Can I test this somehow?

Yes, actually. Proxmox can run inside Proxmox so before I did everything on the live system, I installed Proxmox inside a VM, gave it two disks, installed it on one and then ran through the steps on the other one. Make sure your boot process reflects your host system: Choose UEFI if your host is also using UEFI (OVMF), BIOS otherwise. If you're not sure, check in the console of the host:ls /sys/firmware/efi/efivars/. If that returns a bunch of values, you're running a UEFI system. One other note: Even with an EFI disk attached, I could not get it to remember boot entries, so if you reboot and notice the boot step wasn't saved properly, press "Esc" during startup of the VM, go into the Boot Maintenance Manager and Boot From File, find the right disk and then EFI/proxmox/grubx64.efi or whatever file is there and boot that. This is just an issue inside the VM and shouldn't affect anything when you're doing it on your host for real.Where do I go if I get stuck?

Personally, I highly recommend the Arch Linux Wiki. It's a phenomenal resource full of high quality documentation. Beware though, it is a different distribution and sometimes that matters (esp. around boot processes). Unfortunately, Debian's documentation is nowhere near the same quality, but there's lots of resources out there if you search. Using "Ubuntu" as a search term can help as Ubuntu is based on Debian and there's a lot of similarities. I'll try to link resources in each section that provide more detailed explanation and there is a whole references at the end, too.Goal

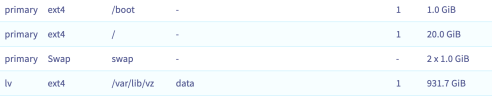

Our goal after this tutorial is the following:- Our root (

/) partition is an encrypted LVM volume - The rest of our LVM storage is availble for VMs (data)

- Our boot (

/boot) and EFI (/boot/efi) partitions are not encrypted - We can decrypt our system remotely using SSH

- Any extra physical drives are also encrypted and upon boot we decrypt them automatically

Assumptions

- You are using Grub. I will provide some extra explanation for those that aren't using Grub, but this is all theoretical as my installation used Grub.

- You boot using UEFI. The instructions below are written with that in mind. Doing a BIOS boot will change quite a few things.

- Your root partition is on LVM. It's not an issue if it isn't, but you might need to adjust some instructions. If you use ZFS you're on your own, I don't know how that works.

- You have access to a screen & keyboard attached to your Proxmox server. You will need it.

- You have enough spare storage to at least create a full backup of your Proxmox installation. Ideally, you have a spare disk that is at least as large as your Proxmox disk, but at the very least create a backup first.

- Your Proxmox is running on Debian bookworm. If not, that's fine, but some steps might be different.

- You have a USB stick available large enough for a Debian Live ISO (or some other way of booting a live distro).

- You have internet access from your Proxmox server, ideally via DHCP or you know how to get extra packages installed for both the Debian live ISO and the Proxmox installation.

- You are running a 64 bit system. If you are on 32 bit, pay attention during Grub install. Otherwise, I can make no promises anything here will work.

Let's Go!

You've read the background and are happy to take the risk? Let's get into it then. We will perform the following steps to (hopefully) end up with an encrypted system.- Boot Debian live ISO

- Create correct partition layout

- Encrypt future root partition & set up LVM

- Copy old installation into new encrypted partition

- Make new installation bootable

- Decrypt other drives

- Add SSH unlock

- Boot

- Troubleshoot

- References

Boot Debian live ISO

To begin we need to get ourselves a live Linux distro to perform all our tasks on. Technically you can accomplish a lot of this from your running proxmox server if you have a separate drive that you want to encrypt, but do so at your own risk. If you get to the step where we copy data from the old to the new installation, it's probably safest to do that from a live disk instead of the running system.Because Proxmox is based on Debian, I highly recommend choosing Debian as your live system just to be safe. It probably work with others, but why risk it? Find out what debian version you are on:

Bash:

$ cat /etc/os-release | grep VERSION=

VERSION="12 (bookworm)"In my case we need to download Debian bookworm from https://www.debian.org/CD/live/. I chose the

-standard suffix which comes without a GUI. You can definitely choose something else, but everything will be in the terminal and we'll only spend very little time on the screen the server is attached to, if possible.Copy that onto a USB stick, e.g. using

dd or a tool like Rufus or Balena Etcher - the last one is my favourite for Windows. For Linux, plain old dd will do just fine. For more ways see here.Before you shut off your system, it is also a good idea to update it. I would highly recommend to only run this on currently supported versions of Debian & Proxmox. If you are on older versions, do an upgrade and make sure everything works. You want to make sure that any issues you're facing later on are due to problems with what we're doing here, not other parts.

If everything is up-to-date, working and your USB stick is ready, reboot your system into the Debian live distro. Make sure to shut it down properly to avoid any disk corruption messing up our steps later on.

Once you've booted up Debian you're ready to make changes. However, depending on where your server is, this might not be the most comfortable machine to be working on so I like to enable SSH and then return to my normal computer and do everything else from there. It's also much easier for copy & paste commands in this guide. To set up SSH:

Bash:

# Become root

sudo -i

# Updating apt is important as live ISOs are behind on packages

apt update

# Install SSH

apt install -y ssh

# Start SSH daemon

systemctl start ssh

# Set a password for the regular user (we use this to connect in the next step)

passwd user

# Find out the IP address

ip addrRemember the password you set and note down the IP address assigned to Debian via DHCP. If you weren't automatically connected to the internet, you might have to set that up manually. Go back to your preferred computer and use SSH to connect:

Bash:

# Enter the user's password

ssh user@x.x.x.x

sudo -iIf you reboot later on, but need to return to the live disk for troubleshooting, come back here and follow these steps again. The live disk does not persist your changes.

Note: If you find during debugging you repeatedly run into SSH warnings after each reboot, it is because the host key changes on each reboot. SSH throws a very angry warning which is usually very important, but here it's not super helpful. If you are on a network you trust enough, you can add

-o "UserKnownHostsFile=/dev/null" -o "StrictHostKeyChecking=no" to the ssh command to disable the warnings. However, do so at your own risk and have a look at man 5 ssh_config to understand what these options do. If you're not sure, you can just follow the instructions in SSH's warning and remove the old key and then connect and trust the new fingerprint. The extra paranoid can even compare the fingerprint by getting it from the server's attached screen, but that's beyond scope here.Now that we're connected let's install the three packages we need during the installation. Remember, these are installed on the live disk, so if you reboot, you need to install them again:

Bash:

apt install dosfstools cryptsetup efibootmgrCreate correct partition layout

Finally, it's time to make some changes. I hope you have backups, because many of the operations that follow are destructive and will wipe data. First, have a look at the partition layout of your existing installation. For example, if your existing installation is on/dev/sda, run fdisk -l /dev/sda. Mine looked something like this:

Code:

Device Start End Sectors Size Type

/dev/sda1 34 2047 2014 1007K BIOS boot

/dev/sda2 2048 2099199 2097152 1G EFI System

/dev/sda3 2099200 1953525134 1951425935 930.5G Linux LVMBecause we want to encrypt our root partition (which lives somewhere in

/dev/sda3) but not our /boot drive, we need create a separate partition for /boot. We will have both an EFI & boot partition. There's probably better ways, but I didn't want to stray too far from Proxmox' expected setup to minimise future issues so all we'll do is create one new partition.If you notice that your original installation has more partitions (for example, root and VM data are separate and LVM is not in use), then you might need to adjust your steps below. Try to replicate what is there as closely as you can and pretty much just insert a separate partition for

/boot as described below.We'll do this on a fresh drive

/dev/sdX (always replace with the correct name), but if you're trying to resize a disk with existing data instead, make sure to shrink the partition first. This is out of scope for this guide, but have a look at these instructions if you want to do this.I prefer cfdisk so open your new drive with

cfdisk /dev/sdX and create the following setup (make sure to set the disklabel type to gpt!):

Code:

Device Start End Sectors Size Type

/dev/sdX1 34 2047 2014 1007K BIOS boot

/dev/sdX2 2048 1050623 1048576 512M EFI System

/dev/sdX3 1050624 2074624 1024001 500M Linux filesystem

/dev/sdX4 2074625 1953523711 1951449087 930.5G Linux filesystemNote: When I attempted to do this, I noticed that the first partition already started at sector 2048. If your experience is the same, you can skip what's labeled as /dev/sdX1 above and start with the EFI System.

While Linux doesn't care too much about partition types set above, it is important that the EFI System is set to EFI System (

C12A7328-F81F-11D2-BA4B-00A0C93EC93B) so that the UEFI bootloader can find it.If everything looks good, select "Write", type "yes" and then quit. Check it all looks good with

fdisk -l /dev/sdX. Most important here:- There's 2048 sectors free at the start for the bootloader

- There's an EFI partition with the correct type. 512M is generously sized. Mine currently only uses about 500K (less than 1%!). I just copied what Proxmox did, you can probably go much smaller.

- There's a separate boot partition. I suggest 500M at least. Mine is currently using 103M so any smaller and it might get crowded here.

Let's prepare the two unencrypted partitions now. First, EFI should be FAT, e.g. FAT32 via

Bash:

mkfs.fat -F 32 /dev/sdX2If this command fails because

mkfs.fat doesn't exist, you may have forgotten to install dosfstools.Then we create the

/boot partition:

Bash:

mkfs.ext4 /dev/sdX3Next, we will set up our encrypted root partition.

Last edited: