Hi,

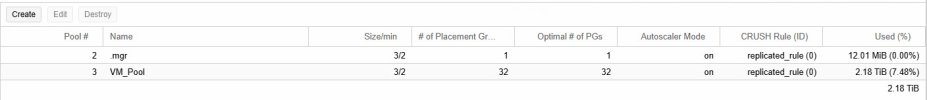

During a near disaster, we opted to restore some VM's, backupped from VMWare vSphere by Acronis to local SAN storage.

The restore was performed from a bootable media, supplied by Acronis.

However, this process was unable to finish.

Restoring a small VM on about 50GB of data took more than 7 hours!

The picture was the same, even when performing the restore from cloud, so neither local storage nor internet connection could be the culprit.

Performing the restore with same bootable media from within a new VMware ESXI VM was done in less than 9 minutes.

We did not see any spikes in neither network, CPU etc. during the restore process in Prox.

We were wondering if there were any performance tweaks or something that we could do in the restore VM/bootable media, in order to gain more performance?

Do you perhaps have input on how this restore VM should be configured?

During a near disaster, we opted to restore some VM's, backupped from VMWare vSphere by Acronis to local SAN storage.

The restore was performed from a bootable media, supplied by Acronis.

However, this process was unable to finish.

Restoring a small VM on about 50GB of data took more than 7 hours!

The picture was the same, even when performing the restore from cloud, so neither local storage nor internet connection could be the culprit.

Performing the restore with same bootable media from within a new VMware ESXI VM was done in less than 9 minutes.

We did not see any spikes in neither network, CPU etc. during the restore process in Prox.

We were wondering if there were any performance tweaks or something that we could do in the restore VM/bootable media, in order to gain more performance?

Do you perhaps have input on how this restore VM should be configured?