https://forum.proxmox.com/threads/4-node-ceph-cluster-no-switch.131248/

Shout out to all the peeps who have helped me thus far on this quick journey.

Im decently new to all of this and just dipped my toes in the waters with this setup so please keep that in mind.

Ive made some changes based on the lessons learned in that first thread so felt a new one was better suited but please merge if not appropriate.

As a reminder, this is my Homelab set up and not even my production stack as im running a few Bare Metal servers that i want to decom once this os running well so im focusing on the "infra" build still. As such some parts are new and ordered for the build but many are old stock pieced together especially when it comes to disks so pardon some of the variance.

So my two main concerns are:

Shout out to all the peeps who have helped me thus far on this quick journey.

Im decently new to all of this and just dipped my toes in the waters with this setup so please keep that in mind.

Ive made some changes based on the lessons learned in that first thread so felt a new one was better suited but please merge if not appropriate.

As a reminder, this is my Homelab set up and not even my production stack as im running a few Bare Metal servers that i want to decom once this os running well so im focusing on the "infra" build still. As such some parts are new and ordered for the build but many are old stock pieced together especially when it comes to disks so pardon some of the variance.

Summary of Cluster:

I have (3) identical nodes that are the core of the cluster, I plan to have these run most of the heavy VM's and the other two are for quorum and extra storage, which is mostly the topic for this thread.

(3) Dell 5040 SFF's - i5-6500 @ 32gb NON ECC

Storage:

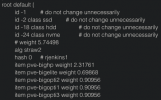

- (1) 500 gb Samsung Evo nvme (Device class shows up as SSD in GUI, should i be worried?)

- (2) 250gb Crucial MX500 ssd (scored a lot of 10 so spread them around the nodes)

Network:

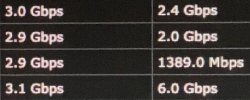

- 10G Mellanox for Ceph (public as well)

- Dual Port 1G Broadcom in LACP for LAN/MNGT

- Onboard Intel 217 for corosync Ring 1

- USB 1gb Realtek for corosync Ring 2

(1) HD EliteDesk SFF - i5-4950 @ 3.3 - 32gb NON ECC (mostly to be the odd man for quorum but had it laying around so why not)

Storage:

- (1) 256gb Synix nvme (Device class shows up as SSD in GUI, should i be worried?)

- (1) 256 GB Samsung ssd

- (1) 128gb Kingston ssd (Pending install if not needed for WAL - see below)

Network:

- 10G Mellanox for Ceph (public as well)

- Dual 1G Intel 1000 in LACP for LAN/MNGT (this computer refused to boot with ANY dual port 1G cards i tried)

- Onboard Intel 217 for corosync Ring 1

- USB 1gb Realtek for corosync Ring 2

For these three, since they are all flash storage, not much "tunning" needs to be done but please let me know if so.

These are all setup using default settings via the gui.

Now for the Odd man out with the Odd storage where i think some advice might benefit me but again, kinda dont know so looking for feedback

(1) HP Proliant ML360G6 - DUAL 2.9 XEONS - 48GB ECC MEM

Storage:

- (1) 256gb Synix nvme (Device class shows up as SSD in GUI, should i be worried?)

- (1) 256gb Synix ssd

- (4x) 256gb Crucial MX500 ssd

- (8x) 146gb SAS HP drives (6 are 10K and 2 are 15K variants)

- 128gb Kingston SSD for DB

Network - Currently running an RSTP single port ring with this machine serving as the HUB, Mikrotik in the mail

- Dual Port Intel X520 for Ceph (public as well)

- 10G Mellanox for Ceph (public as well)

- Dual Onboard 1G broadcom in LACP for LAN/MNGT

- 1g Intel 1000 for corosync Ring 1

- USB 1gb Realtek for corosync Ring 2

- SAS drives

- Should they just be part of the main pool or should i group them into a "slower" pool

- Im under the impression that Ceph understands the data and will "sort the data" accordingly to get the best use of the disc capacity

- Benchmark - How do i test out this stack to see what works well? It seems to work great right now but you know what they say about telemetry versus the good old butt dyno lol

Last edited: