Hi,

Here is the story, a three nodes cluster with, three MacMinis with internal disk and each one an external disk. Cluster configured, ceph configured with replication, HA configured.

After a few hours of running I discover the local-lvm disk on Node2 and Node3 are offline. A short investigation reveals that the volume group ‘pve’ has been renamed ‘vgroup’ on these two nodes. I rename the volume group but I forget to update fstab and update grub and initramfs (I didn’t know). Whateve…nodes2 and 3 get back their local-lvm volumes.

Strange thing, node1 begins to have the same behaviour so I do the same renaming. Finally all my nodes has volume group named ‘pve’.

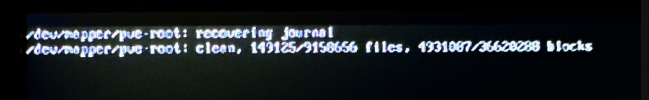

I don’t know why or pehaps now I know that’s because I didn’t update fstab, grub and initramfs, node1 goes totally offline after a simple apt upgrade and reboot.

Again the volume group is named vgroup and is referenced as pve in grub fstab and so on ! So no volume is mounted and the node is down.

I don’t see it for a few days as HA migrated the node1 vm (which is a Home Assistant) to node 2.

I don’t remember why but I tell my friend who is on site to reboot the HA machine and he misunderstood and rebooted the whole node2.

Result is the migrated VM is no more booting saying that there’s no bootable volume.

I tried moving the VM disk as raw on the internal disk, no luck, I tried moving it as qcow2, no luck.

I’m stuck and I don’t know if removing node1 from cluster and doing a whole new install will get back my VM disk. I only need some data on the VM disk so if I was able to mount it I would backup the data I need but I didn’t succeed.

I tried to fire a new VM and mounting the /dev/sdb disk but and lsblk or fdisk - l tells me there’s no partition so I can’t mount anything.

Oh and I forgot to tell as it was a safe cluster... I don't have any backup :-(

I ever someone has an idea…

Thanks for your help

V.

Here is the story, a three nodes cluster with, three MacMinis with internal disk and each one an external disk. Cluster configured, ceph configured with replication, HA configured.

After a few hours of running I discover the local-lvm disk on Node2 and Node3 are offline. A short investigation reveals that the volume group ‘pve’ has been renamed ‘vgroup’ on these two nodes. I rename the volume group but I forget to update fstab and update grub and initramfs (I didn’t know). Whateve…nodes2 and 3 get back their local-lvm volumes.

Strange thing, node1 begins to have the same behaviour so I do the same renaming. Finally all my nodes has volume group named ‘pve’.

I don’t know why or pehaps now I know that’s because I didn’t update fstab, grub and initramfs, node1 goes totally offline after a simple apt upgrade and reboot.

Again the volume group is named vgroup and is referenced as pve in grub fstab and so on ! So no volume is mounted and the node is down.

I don’t see it for a few days as HA migrated the node1 vm (which is a Home Assistant) to node 2.

I don’t remember why but I tell my friend who is on site to reboot the HA machine and he misunderstood and rebooted the whole node2.

Result is the migrated VM is no more booting saying that there’s no bootable volume.

I tried moving the VM disk as raw on the internal disk, no luck, I tried moving it as qcow2, no luck.

I’m stuck and I don’t know if removing node1 from cluster and doing a whole new install will get back my VM disk. I only need some data on the VM disk so if I was able to mount it I would backup the data I need but I didn’t succeed.

I tried to fire a new VM and mounting the /dev/sdb disk but and lsblk or fdisk - l tells me there’s no partition so I can’t mount anything.

Oh and I forgot to tell as it was a safe cluster... I don't have any backup :-(

I ever someone has an idea…

Thanks for your help

V.

Code:

root@pvemini2:~# pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.3-3 (running version: 7.3-3/c3928077)

pve-kernel-5.15: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph: 17.2.5-pve1

ceph-fuse: 17.2.5-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-8

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.2-12

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.7-1

proxmox-backup-file-restore: 2.2.7-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-1

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1

Code:

cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,images,vztmpl,rootdir,snippets,backup

prune-backups keep-all=1

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

rbd: ceph-replicate

content rootdir,images

krbd 0

pool ceph-replicate

Code:

VG #PV #LV #SN Attr VSize VFree

ceph-46a69907-e338-47a4-abb1-3b3a9214cd48 1 1 0 wz--n- 465.73g 0

pve 1 4 0 wz--n- <931.01g 15.99g

root@pvemini2:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 pve lvm2 a-- <931.01g 15.99g

/dev/sdb ceph-46a69907-e338-47a4-abb1-3b3a9214cd48 lvm2 a-- 465.73g 0

Last edited: