We recently completed the upgrade to Proxmox 7.

The issue exists on two different kernels

pveversion:

pve-manager/7.2-7/d0dd0e85 (running kernel: 5.15.39-1-pve)

and

pve-manager/7.2-7/d0dd0e85 (running kernel: 5.15.35-2-pve)

Since the upgrade Io wait has increased dramatically during vzdump backup processes.

I also noticed that it seems like vzdump completes the backups faster than before the upgrade, thats good, but the trade off seems to be overloading the IO subsystems to the point its causing issues.

vzdump is writing to spinning rust SATA Disk with LUKS.

VM storage is a mixture of zfs zvols and LVM over DRBD

The backing storage for DRBD ranges from Areca Raid Arrays that might be rust or SSD to a few backed by PcieSSD

Most of our nodes only have fast SSD for VM storage, on those the IO wait during backup averaged around 4% before and is now around 8%, does not seem to cause any issues.

On nodes with SSD and spinning rust they averaged around 8% before and after the upgrade around 35% and we are seeing IO stalls in VMs where this was not a problem in Proxmox 6.

Nearly all VMs are setup to use 'VirtIO SCSI' with a few using 'VirtIO', default cache, none have IO Threads enabled and Async IO is the default.

I see that Async IO default changed in Proxmox 7 and I believe this is the source of the problem.

https://bugzilla.kernel.org/show_bug.cgi?id=199727

Seems to indicate I should use 'VirtIO SCSI Single', enable IO Threads and set Async IO to threads to alleviate this issue.

Any other suggestions?

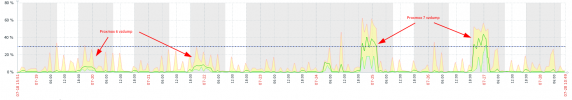

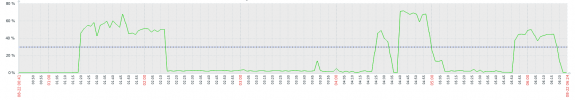

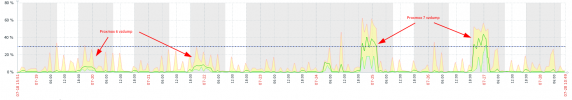

Here is a zabbix graph of IO wait from one of the nodes:

The issue exists on two different kernels

pveversion:

pve-manager/7.2-7/d0dd0e85 (running kernel: 5.15.39-1-pve)

and

pve-manager/7.2-7/d0dd0e85 (running kernel: 5.15.35-2-pve)

Since the upgrade Io wait has increased dramatically during vzdump backup processes.

I also noticed that it seems like vzdump completes the backups faster than before the upgrade, thats good, but the trade off seems to be overloading the IO subsystems to the point its causing issues.

vzdump is writing to spinning rust SATA Disk with LUKS.

VM storage is a mixture of zfs zvols and LVM over DRBD

The backing storage for DRBD ranges from Areca Raid Arrays that might be rust or SSD to a few backed by PcieSSD

Most of our nodes only have fast SSD for VM storage, on those the IO wait during backup averaged around 4% before and is now around 8%, does not seem to cause any issues.

On nodes with SSD and spinning rust they averaged around 8% before and after the upgrade around 35% and we are seeing IO stalls in VMs where this was not a problem in Proxmox 6.

Nearly all VMs are setup to use 'VirtIO SCSI' with a few using 'VirtIO', default cache, none have IO Threads enabled and Async IO is the default.

I see that Async IO default changed in Proxmox 7 and I believe this is the source of the problem.

https://bugzilla.kernel.org/show_bug.cgi?id=199727

Seems to indicate I should use 'VirtIO SCSI Single', enable IO Threads and set Async IO to threads to alleviate this issue.

Any other suggestions?

Here is a zabbix graph of IO wait from one of the nodes: